Data Collection in Breast Cancer Research: Study Design and Methodology

Introduction to Data Collection

In contemporary breast cancer research, particularly for cases involving early-stage breast cancer (BCa), rigorous data collection and study design are essential. This article delves into the methodologies used for data gathering, focusing on two clinical trials completed at the Ghent University Hospital. The trials, identifiable by their ClinicalTrials.gov identifiers NCT04343079 and NCT04999917, were conducted under the approval of the Ghent University Hospital ethics committee and aligned with the ethical guidelines of the Declaration of Helsinki.

Study Design Overview

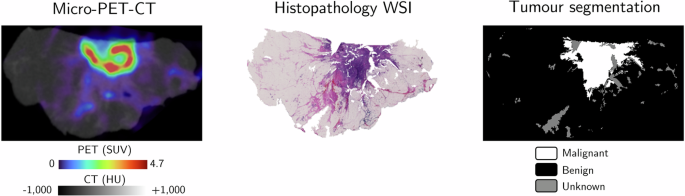

The primary data collected included micro-PET-CT images and histopathological data from patients diagnosed with early-stage breast cancer, categorized into non-special type (NST), invasive lobular carcinoma (ILC), or ductal carcinoma in situ (DCIS). Patients undergoing breast-conserving surgery (BCS) provided written informed consent, ensuring their awareness and agreement to participate in the study, as well as the publication of the findings.

Micro-PET-CT Specimen Images

Participants in the study received a preoperative injection of the radiotracer [^18F]FDG at dosages of either 0.8 MBq/kg or 4 MBq/kg. The imaging was accomplished using advanced systems, including the β-CUBE® and X-CUBE® (developed by MOLECUBES NV, Belgium) for preclinical in vivo imaging, and the AURA 10® (manufactured by XEOS Medical NV, Belgium) specifically for ex vivo specimen scanning. Although the scanners serve differing purposes, their similar specifications facilitate the achievement of high-quality images with sub-millimeter spatial resolution.

To maintain consistency across the data, all PET images were reconstructed to simulate a uniform acquisition time of 10 minutes. This involved using 20 iterations of the maximum likelihood expectation maximization (MLEM) algorithm and adhering to a precise isotropic voxel size of 400 μm. The corresponding CT images were captured within 1 to 3 minutes, also reconstructed to an isotropic voxel size of 100 μm.

Image Preprocessing

Numerous preprocessing steps were undertaken to optimize the micro-PET-CT images before being introduced to the machine learning models. The 3D micro-CT images were standardized in Hounsfield units (HUs), while the micro-PET images were converted to lean body mass standardized uptake values (SUVs) using the Janmahasatian formulation. Additionally, attenuation corrections and median filtering were applied to the images to enhance overall quality.

To establish binary tissue masks, Otsu’s method was utilized for image thresholding, ensuring that only relevant tissue structures—specifically the largest connected tissue structures—were retained, while the background was effectively removed.

Ground Truth Image Labels

We sourced two distinct datasets of ground truth labels from histopathological findings. The first dataset encompassed 2D micro-PET-CT slices of lumpectomy specimens, accurately annotated to identify true semantic tumor locations. This dataset was pivotal for the supervised training and testing of deep learning (DL) models aimed at tumor segmentation.

In the preparation of this dataset, fresh tumor specimens underwent immediate processing and were sectioned into lamellas. Each lamella, approximately 2-3 mm thick, was imaged before being subjected to hematoxylin and eosin (H&E) staining, with a corresponding whole-slide image (WSI) obtained for analysis.

Annotations and Co-Registration

An experienced pathologist annotated the NST, ILC, and DCIS tumors in the WSIs, with the aim of distinguishing (pre)malignant tissues from benign. Specific protocols were followed to ensure precise co-registration of the micro-PET-CT images and WSIs. A specialized algorithm was developed in-house for this purpose, yielding a single 2D micro-PET-CT image per lamella, accurately aligned with its respective WSI.

The second dataset comprised 3D micro-PET-CT images with defined histopathological margin statuses, utilized for evaluating the performance of the DL models in margin assessment. Following standard care protocols, the histopathology department processed lumpectomy specimens to ascertain margin statuses as per ASCO-CAP guidelines.

Deep Learning Model Architecture

For our segmentation tasks, we leveraged the Residual U-Net (ResU-Net) architecture, recognized for its efficacy in semantic segmentation tasks in biomedical images. Built upon the foundational U-Net structure, ResU-Net includes residual blocks to enhance convergence rates and minimizes issues such as vanishing gradients.

Model Input and Training

The model was trained on datasets utilizing binary tumor segmentation labels, employing a five-fold cross-validation strategy. Preprocessing of the dataset involved normalizing and scaling images, one-hot encoding true tumor segmentations, and incorporating various augmentations to bolster model robustness.

The training aimed to optimize hyperparameters for maximum segmentation accuracy, particularly focused on distinguishing between NST tumors and their DCIS components.

Intensity Thresholding Model for Baseline Comparison

To evaluate the effectiveness of the ResU-Net model, we established a conventional intensity thresholding model as a baseline. Using a stratified five-fold approach, sets of optimal thresholds for both micro-CT and micro-PET images were derived to perform segmentation, providing a comparative measure against the performance of our DL models.

Performance Evaluation Metrics

Model performance for tumor segmentation was assessed using metrics such as the Dice Similarity Coefficient (DSC) and Precision-Recall Area Under Curve (PR-AUC). High DSC indicates strong overlap between predicted and true segmentations, which is crucial for accurate margin assessments.

For margin evaluation, predicted statuses were determined by calculating minimum distances between predicted tumor segmentations and specimen contours, allowing for sensitivity, specificity, precision, and F1 scores to be derived.

Statistical Analysis Framework

Statistical methods, including the Wilcoxon signed-rank test and McNemar’s test, were employed to ensure rigorous results interpretation, enabling the distinction between the effectiveness of our model and conventional practices, as well as between model inputs.

Through this extensive methodology and collection process, we strive for meaningful advancements in the detection and segmentation of tumors in breast cancer, utilizing both deep learning techniques and traditional image processing methods. Continue following the developments to uncover how these models enhance diagnostic accuracy and patient outcomes.