Deep Adaptive Attention Network for Content-Based Image Retrieval (DAAN-CBIR)

Introduction

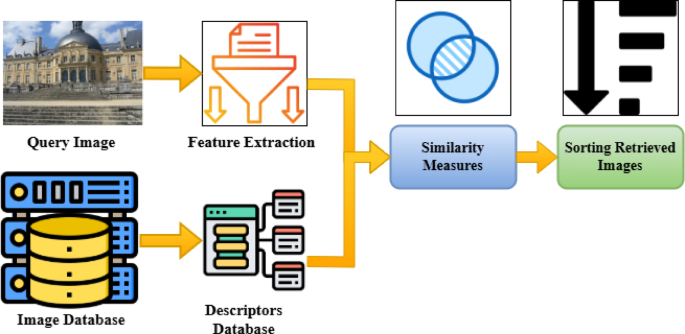

Content-Based Image Retrieval (CBIR) is a fascinating field of study where images are retrieved based on their content rather than textual metadata. This requires a sophisticated understanding of image similarity, which remains one of the most challenging aspects of CBIR. While human perception can evaluate image similarity based on attentional focus and semantic context, translating this understanding into algorithms is complex. Currently, various techniques are available, but many face limitations in delivering timely and accurate results. An innovative solution to these challenges is the Deep Adaptive Attention Network (DAAN-CBIR), which optimizes image retrieval through multi-scale feature extraction and hybrid neural architectures.

The Need for Attentional Mechanisms in CBIR

Traditional CBIR systems often struggle due to their lack of focus on relevant image parts, leading to inefficiencies in processing and retrieval accuracy. DAAN-CBIR aims to mimic human visual perception by emulating the attention mechanisms that prioritize significant regions while ignoring extraneous details. This focus allows the system to extract critical information from designated image areas, enhancing the quality of the retrieval process.

Network Model Overview

At the heart of DAAN-CBIR lies its unique structure, which consists of three primary components:

- Visual Attention Model: This model identifies areas of interest within the images based on their content.

- Feature Fusion Module: This component integrates the different feature representations for a more comprehensive processing approach.

- Generation of Attention Maps: Attention maps dynamically highlight regions in the candidate images that correspond to the query images.

The application of a pre-trained Deep Neural Network (DNN) allows for high-dimensional feature maps that factor in both spatial and semantic attributes of the input images, leading to a better understanding of relevance.

Visual Feature Encoding

DAAN-CBIR utilizes a DNN to extract visual features from candidate images. The framework treats the candidate image, denoted as ( J_c ), and the query ROI image ( J_q ) of different sizes. By implementing Global Average Pooling (GAP), the architecture translates the complex, high-dimensional tensors produced by the DNN into compact feature vectors, ( U_q ).

The focus on positive and negative pairs during training ensures that the model understands which features signify similarity. This results in a robust representation of images, enhancing retrieval effectiveness by discerning relevance based on context rather than surface-level characteristics.

Feature Fusion Mechanism

A crucial aspect of the network is its feature fusion mechanism, which combines features from different sources. This module leverages multi-scale convolution blocks, allowing the network to target and process critical visual dimensions effectively. The diversity in convolution layer kernel sizes promotes a more substantial learning experience, accommodating various aspects of the image.

The attention model is trained to balance the semantics between the candidate image’s feature tensor and the region of interest from the query image. By normalizing the feature representations, the model ensures that the most salient aspects drive the final integrated feature.

Attention Maps and Their Importance

Attention maps play a pivotal role in DAAN-CBIR by emphasizing regions where the query content and candidate image content overlap significantly. The development of these maps is guided by a training process that employs pairs of annotated images. This supervised learning approach allows the model to project probability maps, enhancing the network’s retrieval precision by identifying critical correspondence in visual spaces.

The Role of Adaptation in Attention Mechanisms

One of the standout features of DAAN-CBIR is its ability to adapt dynamically to variations in query types and datasets. The attention mechanisms implemented within the system ensure that it can prioritize the right visual attributes depending on the context. This capability minimizes the occurrence of false positives, enhancing the quality of selected images in the retrieval process.

Training and Model Optimization

The model undergoes rigorous training using various techniques, including Stochastic Gradient Descent (SGD). The architecture is designed for optimized learning, ensuring that the outcomes reflect a deep awareness of both positive and negative samples. With every training iteration, the architecture employs specific batch sampling strategies that foster variability among negative matches, ensuring a robust understanding of feature context.

Empirical Verification and Results

A suite of empirical tests was conducted to evaluate the effectiveness of DAAN-CBIR. Parameters such as recall and precision were closely monitored to determine the system’s performance. The introduction of alternative methods, such as channel shuffling and the attention modules, offers additional layers of refinement, enhancing the model’s overall competency in image retrieval tasks.

Overall, DAAN-CBIR combines advanced techniques with a human-like understanding of visual information. Its innovative approach to attention and feature extraction represents a significant leap forward in the CBIR domain, promising more intelligent and efficient image retrieval solutions. This exploration into image attention mechanisms has the potential to redefine how machines interact with visual data, paving the way for a future where retrieval systems are as nuanced as human perception itself.