Enhanced Feature Extraction for Millimeter-Wave Images: A Deep Dive

In today’s rapidly advancing technological landscape, the ability to process and interpret millimeter-wave images through sophisticated models is becoming increasingly crucial, especially in fields like security and surveillance. This article offers a thorough exploration of various advanced techniques—specifically multi-scale convolution, the Task-Integrated Block (TIB), and the Text-Image Interaction Module (TIIM)—which collaboratively enhance feature extraction capabilities and facilitate better alignment between natural language and visual features.

Multi-Scale Convolution

Multi-scale convolution is a powerful strategy that employs several parallel branches with convolutional kernels of varying sizes within a single layer. The essence of this approach lies in its ability to capture features at different scales simultaneously. Smaller kernels focus on fine details, while larger ones detect broader, macro-level features.

In a typical application of multi-scale convolution, each branch independently applies its convolutional operations to the input image. The feature maps extracted from these branches are subsequently fused to create a multi-faceted feature output. This enables the model to recognize objects and their details more effectively, particularly in complex visual environments. For instance, integrating this technique into frameworks like YOLOv8 significantly bolsters an algorithm’s ability to detect diverse targets in millimeter-wave imagery, enhancing overall performance and robustness.

Mathematical Representation

The mathematics underpinning this concept is fascinating. The convolution operation for each scale can be expressed as:

[ n_i = I \ast K_i ]

Where:

- ( I ) is the input feature map

- ( K_i ) represents the convolutional kernel for scale ( i )

The final output ( N ) is computed by combining all scale outputs:

[ N = [n_1, n_2, \dots, n_i] ]

This multi-faceted output structure is critical in environments where traditional single-scale convolution may falter—particularly concerning the detection of small objects lost amid larger background distractions.

Task-Integrated Block (TIB)

The Task-Integrated Block is a strategic enhancement designed to optimize the use of image feature information, particularly beneficial for detecting small objects. It marries cross-attention and self-attention mechanisms to refine feature extraction processes, thereby improving localization and detection performance.

Self-Attention Mechanism

Within the TIB, self-attention assigns varying weights dynamically to different feature regions on the input image’s map. This mechanism allows the model to engage more effectively with critical features, thus improving the correlation between high-level semantics and low-level details. For instance, when processing detection maps across three different scales, it becomes apparent that the small object map holds paramount importance, leading to its designation as the primary weight map in the feature integration process.

Cross-Attention Mechanism

Cross-attention facilitates the correlation between distinct detection maps. It works by referencing the small object detection map to enhance the information captured from medium and large object scales. This comprehensive integration is pivotal in crafting a feature representation that is not only detailed but also accurate in object localization.

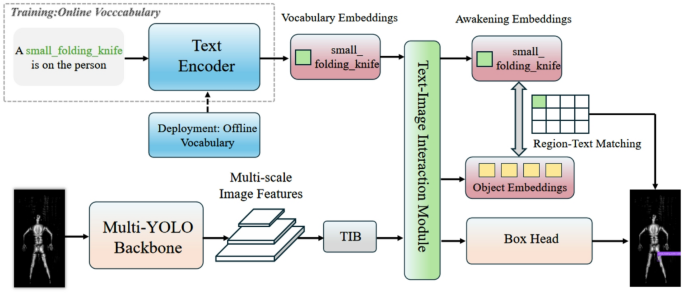

Text-Image Interaction Module (TIIM)

With the complexities of detecting objects in diverse environments, the Text-Image Interaction Module emerges as an innovative solution, especially in open scenarios where there is much variability. It ameliorates the traditional limitations of closed-set detection systems by fostering a dynamic interaction between text and image features.

I-TM Layer and T-IA Layer

The TIIM comprises two main components: the I-TM layer, which updates image content with text features, and the T-IA layer, which refreshes text information based on the refined image data. The I-TM layer leverages attention mechanisms to superimpose text on image features, effectively enhancing the expressiveness of the visual data.

In parallel, the T-IA layer updates text embeddings by integrating salient information drawn from the images. This reciprocal enhancement process leads to a richer representation, significantly improving the model’s ability to recognize complex and subtle objects within the imagery.

Efficient Model Deployment

The TIIM can efficiently reparameterize offline vocabulary embeddings into model weights suitable for convolutional or linear layers, ensuring that the model remains adaptable to complex scenes while maintaining high performance in object detection tasks.

Text Detector Integration

Integrating a pre-trained Transformer text encoder further boosts the capabilities of our model by generating versatile text embeddings, crucial for linking visual objects with their textual descriptors. By replacing traditional instance annotations with region-text pairs, the revamped approach allows for an enriched interaction between image and text features during model training.

This innovative framework not only enables more accurate detection by evaluating similarities between text and enriched visual embeddings but also enhances the model’s adaptability to varied prompts during inference.

This exploration of multi-scale convolution, the Task-Integrated Block, and the Text-Image Interaction Module uncovers the intricate dynamics of modern image detection methods. By advancing the interaction between visual and textual information, these techniques significantly contribute to enhancing the recognition capabilities necessary for real-world applications, particularly in complex environments.