Exploring the OpenDeID v2 Corpus: A Deep Dive into Deidentification Methods and Performance Metrics

The OpenDeID v2 corpus stands as a significant advancement in the realm of machine learning applications for health data privacy. Designed to evaluate various machine learning techniques and hybrid methods in the SREDH/AI CUP 2023 deidentification competition, this extensive dataset expands upon the initial OpenDeID v1 corpus, originally sourced from the Health Science Alliance (HSA) biobank at the Lowy Cancer Research Center, University of New South Wales, Australia. With additional curation, OpenDeID v2 now boasts 3,244 pathology reports, divided into three key sets—training, validation, and testing—comprising 1,734, 560, and 950 reports respectively.

Structure and Content of the OpenDeID v2 Corpus

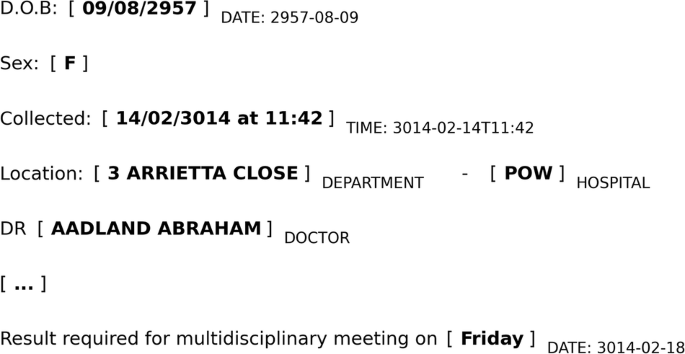

The corpus includes six primary sensitive health information (SHI) categories: NAME, LOCATION, AGE, DATE, CONTACT, and ID. Notably, each of these categories, aside from AGE, is further broken down into subcategories to facilitate more nuanced detection and categorization. For instance, the DATE annotations are further classified into DATE, TIME, DURATION, and SET, with all date-related information normalized according to the ISO 8601 standard, ensuring temporal accuracy is maintained throughout the dataset. Extensive guidelines and detailed annotation processes accompany the dataset, reinforcing its reliability and usability.

The dataset’s structure, illustrated in various supplementary documents, reveals the intricate relationships and distributions among the SHI categories, demonstrating a complex yet coherent approach to deidentification.

In-Context Learning Performance with Pythia Models

The Pythia model suite, a foundational framework for evaluating the corpus, was tested across multiple subtasks to investigate its few-shot learning capabilities. Instead of relying solely on previously utilized methods, the competition leveraged a k-nearest neighbor (kNN) approach to enhance in-context learning (ICL). This aimed to improve model responses by emphasizing closer training instances, ultimately selecting the five nearest examples.

Performance metrics showed that Pythia models exceeding 1 billion parameters yielded results comparable to the average performance of all competition submissions for Subtask 1. However, they fell short regarding average F1 scores in Subtask 2, which proved to be more challenging. Interestingly, even a 160 million parameter model managed to closely approximate the macro scores for Subtask 1, indicating that smaller models still possess a degree of effectiveness in specific tasks.

The results from varying model sizes—ranging from 70 million to 12 billion parameters—highlighted a performance trend: the larger the model, the more effective it generally was, particularly in more complex tasks such as temporal normalization.

Fine-Tuning Strategies and Their Impacts

The efficiency of model performance was further scrutinized through various fine-tuning strategies, including both full-parameter and LoRA-based approaches. Results demonstrated that while fine-tuning generally led to a marked improvement in recall rates for both subtasks, the return on investment in performance boosts diminished for models beyond a certain size—especially those with more than 2.8 billion parameters.

Notably, the LoRA fine-tuning approach emerged as a particularly viable strategy, boasting efficiency in training while enhancing performance. However, smaller models like the 70 million parameter version faced limitations due to fewer learnable parameters, which may hinder their ability to capture the necessary complexity present in the task.

Highlights from the SREDH/AI CUP 2023 Competition

Held on the CodaLab platform, the SREDH/AI CUP 2023 competition drew in 721 participants from 291 teams. Teams were tasked with employing the OpenDeID v2 corpus to develop their models, allowing up to six submissions per participant on the final test set.

The competition highlighted substantial variations in performance across teams, particularly between subtasks. Teams focusing on recognizing SHIs recorded relatively more consistent success compared to those tasked with temporal normalization. Intriguingly, approximately 77.2% of top competitors integrated large language models (LLMs) into their systems, reflecting a current trend towards LLM usage in clinical natural language processing (NLP).

Techniques and Approaches: Insights from Top Teams

A multitude of strategies emerged among the top competitors, including:

- Ensemble Learning: Many teams utilized ensemble methods by combining outputs from different models to enhance performance, particularly for categories with limited training data.

- Hybrid Approaches: Numerous successful teams adopted hybrid methodologies, combining rule-based systems with LLM methods. This tactic particularly targeted the recognition and normalization of age- and temporal-related SHIs.

- Data Augmentation: Strategies for enhancing training datasets, especially for underrepresented SHI types, were prevalent. Some teams leveraged generative models like ChatGPT to create additional training instances, dramatically improving their model performance.

The use of commercial LLMs, such as OpenAI’s ChatGPT, was another prominent theme, although teams also explored various proprietary systems for data expansion and fine-tuning processes to enhance their models’ capabilities.

Understanding the Results

The performance metrics from the competition underscored the disparity in challenges posed by the two tasks: categorizing SHIs versus normalizing temporal information. Average scores indicated that the latter task was particularly arduous, reinforcing the notion that sophisticated model designs and innovative techniques are crucial in advancing clinical NLP research. Teams with well-defined methodologies and an understanding of their models’ strengths and weaknesses dominated the leaderboard, underscoring the value of strategy and adaptability in machine learning competitions.

The Future of Deidentification in Machine Learning

As the foundational work of the OpenDeID v2 corpus lays the groundwork for future advancements in health data privacy, its comprehensive design fosters innovation among researchers and participants alike. By offering a robust dataset for evaluating deidentification techniques, it paves the way for further discovery and enhancement in the critical domain of sensitive health information handling. Participants’ dedication to refining their approaches and pushing the envelope in AI-driven health research bodes well for advancements in medical data privacy solutions.