Datasets Collection, Experimental Environment, and Parameter Setting

Introduction to the Datasets

In the realm of image classification and deep learning (DL) research, the choice of dataset can significantly influence the outcomes of any experiment. In this study, three prominent datasets are utilized: CIFAR-10, ImageNet, and a Custom Dataset tailored to specific research goals.

CIFAR-10 is a well-established benchmark dataset comprising 60,000 color images divided into 10 distinct categories, with each category containing 6,000 images. This dataset is split into 50,000 training samples and 10,000 test samples, with each image measuring 32 × 32 pixels. Due to its moderate scale and diverse content, CIFAR-10 serves as an excellent resource for evaluating various image classification algorithms. It can be downloaded from the official CIFAR-10 homepage here.

ImageNet is another heavyweight in the world of visual databases, hosting over 14 million annotated images across more than 20,000 categories. The focus for this experiment is the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2012 subset, which consists of 1,000 categories of images specifically designed for image classification and object detection tasks. For more details, you can access the dataset through the official ImageNet website here.

Custom Dataset refers to datasets created to meet particular research needs. Here, the Custom Dataset is derived from the GitHub project Train_Custom_Dataset, which supplies a comprehensive pipeline for data construction and annotation, supporting various tasks, including classification, detection, and segmentation. The dataset focuses on three primary categories: daily objects, office supplies, and electronic devices, totaling 3,600 images. It is divided into a training set of 2,700 images and validation and test sets comprising 450 images each. All images are standardized to a resolution of 224 × 224 pixels, ensuring consistency in input size and a balanced number of images across categories. The dataset can be downloaded from the GitHub project’s official website here.

Experimental Environment

The computational backbone for this research is a high-performance computer, designed to tackle the intricate demands of deep learning tasks. The specifications include:

-

CPU: An Intel Core i7-12700 K model, equipped with 12 cores and 20 threads, enabling efficient processing at a base frequency of 3.6 GHz. This powerful CPU is essential for handling complex calculations inherent in deep learning algorithms.

-

Graphics Card: The NVIDIA RTX 3090, with a substantial 24GB of video memory, stands out for its robust parallel computing capabilities, crucial for the intensive training and reasoning of multi-scale convolutional neural networks (MSCNNs).

-

Memory: A total of 32GB DDR5 RAM ensures sufficient space to handle large datasets without performance hiccups.

-

Storage: A 1 TB NVMe SSD optimizes data reading and writing speeds, significantly enhancing the efficiency of both training and experiments.

- Operating System: Running on 64-bit Windows 11 Professional, the system takes full advantage of modern hardware features and supports advanced deep learning frameworks. The primary development tool used is PyTorch 2.0, offering a flexible platform for implementing the algorithms.

Parameter Settings

To ensure the accuracy and efficacy of the experiment, specific parameters for the proposed optimized model have been meticulously configured. Each parameter plays a pivotal role in determining the model’s performance, ensuring a comprehensive evaluation aligned with the research objectives.

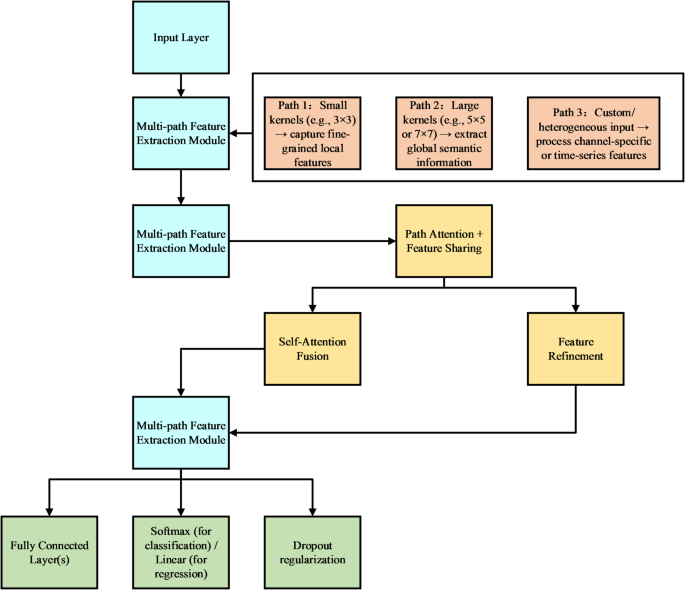

The selection of comparison models is equally important. Notably, the Swin Transformer, ConvNeXt (A ConvNet for the 2020s), and EfficientNetV2 are chosen for comparison against the optimized model. This array of robust models allows for a well-rounded assessment of enhancements in multi-path feature extraction, fusion mechanisms, and lightweight designs offered by the proposed approach.

Performance Evaluation Metrics

In the performance comparison phase, two primary dimensions of evaluation are harnessed: model performance and computational efficiency. The metrics include:

- Accuracy: A fundamental measure reflecting the model’s classification capability.

- Precision and Recall: These metrics provide deeper insights, especially in scenarios involving class imbalances. Precision evaluates the proportion of correct positive predictions, while recall assesses the model’s ability to capture actual positives.

- F1 Score: The harmonic mean of precision and recall, this score is particularly crucial in classification tasks with imbalanced datasets.

- Training Time and Inference Time: These indicators are vital for understanding the model’s efficiency and usability in real-world applications.

- Number of Parameters: This reflects the model’s size and scalability, directly affecting training resources and deployment feasibility.

- GPU Memory Usage: Essential for understanding the model’s hardware resource consumption, especially in multi-path architectures.

The results from these evaluations provide a comprehensive view of how the optimized model stacks up against its contemporaries, paving the way for innovative developments in deep learning applications.

Simulation Experiment: Model Robustness and Scalability

A simulation experiment is conducted to probe the effectiveness of the optimized model, focusing on two key dimensions: model robustness and scalability. Evaluation metrics include:

- Noise Robustness: Testing the model’s resilience against noisy inputs reflective of real-world scenarios.

- Occlusion Sensitivity: Gauging performance when input data is incomplete or obscured.

- Class Imbalance Impact: Evaluating the model’s ability to identify minority classes in cases of data imbalance.

- Resistance to Adversarial Attacks: Assessing how the model withstands malicious inputs.

- Data Scalability Efficiency: A measure of how well the model can adapt to larger datasets.

- Task Adaptability and Resource Scalability Requirements: Understanding the model’s flexibility across different tasks and its demands for additional resources.

- Training Parallelism Efficiency: A vital metric for evaluating computational efficiency in multi-processor environments.

Discussion on Performance and Robustness

The performance comparison of the experiment reveals that the optimized model consistently outperforms baseline models in most metrics, particularly in classification accuracy, inference time, and memory consumption. This advantage is primarily attributed to the integration of a path cooperation mechanism within the multi-branch parallel architecture, which dynamically weights features from various paths to enhance feature fusion quality.

In the realm of simulation experiments, the optimized model exhibits key strengths—particularly in noise robustness and adaptability—thanks to its inter-path feature-sharing mechanism. This design enables it to effectively manage real-world challenges, including dynamic tasks and variable resource availability, making it a robust choice for intelligent perception and computing tasks.

Even as competing models like Swin Transformer, ConvNeXt, and EfficientNetV2 showcase their own strengths, they fall short in areas such as training efficiency and scalability under complex data structures.

Through this comprehensive framework of datasets, environment, parameters, performance evaluation, and robustness exploration, the optimized model emerges as an advanced solution adaptable to a wide range of practical applications in image classification and beyond.