Exploring the Intersection of Ethics and Machine Learning in Medical Studies

Ethical Foundation of the Study

Conducting medical research requires adherence to strict ethical guidelines to ensure the welfare of participants. The study discussed herein was approved by the local ethics committee of the medical Faculty of the University of Leipzig (026/18-ek). Notably, it was registered with ClinicalTrials.gov (NCT04230603), which provides transparency and facilitates monitoring. Written informed consent was obtained from all participating patients, ensuring they were fully aware of the study’s nature, risks, and benefits, including consent for publication. All methodologies followed the stipulations outlined in the Declaration of Helsinki, emphasizing respect for human rights in medical research.

Ensuring Compatibility between Combinations

The integrity of machine learning (ML) studies hinges on clarity and control over different components of the analytical pipeline. A machine learning pipeline can essentially be segmented into four independent components: Data, Preprocessing, Model, and Cross-validation. Each of these components must be controlled to accurately assess the impact of variations in one area without interference from others.

In this study, to evaluate preprocessing methods effectively, factors like data order, model state, and cross-validation were held constant across trials. This disciplined approach allows researchers to draw confident conclusions about the efficacy of different preprocessing techniques, as elaborated in the detailed checklist presented in Table 1. By focusing solely on preprocessing, researchers could confidently attribute any performance differences to the methods employed.

Sampling a Small but Representative Dataset

The first stage in the analytical framework involved creating a representative subset of the data. This was accomplished with the following key steps:

- Random Selection: 1% of data from each patient and class was randomly selected.

- Kolmogorov–Smirnov Test: This statistical test validated that the empirical distribution of the reduced dataset corresponded significantly with the full dataset.

- Iteration for Representation: Steps 1 and 2 were repeated until all wavelengths in the reduced subset were deemed representative.

With these methods, the small dataset mirrored the full dataset’s characteristics, allowing for the application of diverse preprocessing combinations without introducing bias.

Preprocessing

Pipeline Overview

The preprocessing pipeline for the selected dataset consisted of carefully structured steps, crucial for refining data before it undergoes analysis:

- Reading Data: Importing the hyperspectral datacube along with corresponding ground truth labels.

- Scaling: Utilizing normalization or standardization to ensure uniform distribution of data values.

- Smoothing: Implementing techniques to reduce noise in data, enhancing clarity.

- Filtering: Removing unwanted artifacts, particularly those from blood and light reflections that could mislead the analysis.

- Patch Extraction: Extracting 3D patches from labeled pixels for training neural networks, with specified patch sizes to ensure uniformity in input shapes.

Scaling Techniques

Scaling has a dual purpose: standardizing data across a range for improved convergence and mitigating bias influenced by individual spectral characteristics. This study employed both normalization and standardization—two effective methods for this undertaking. The scaling impacts are visually articulated in Figure 2, showcasing how different methods of scaling alter spectral characteristics.

Smoothing Techniques

Noise reduction was achieved through various smoothing methods—1D, 2D, and 3D—targeting either spectral or spatial data dimensions. Three smoothing filters (Median Filter, Gaussian Filter, and Savitsky-Golay Filter) were chosen based on their effectiveness in enhancing signal quality and preserving crucial structural information.

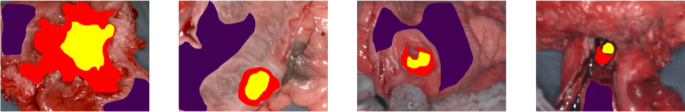

Filtering for Artifacts

Given the challenges faced in pixel classification, particularly with misclassified blood and light reflection pixels, filtering became a pivotal part of the preprocessing stage. A method was employed to segregate these pixels by analyzing their spectral characteristics, utilizing parameters derived from previous studies. The filtering process facilitated a more precise classification of healthy and cancerous tissues in the dataset.

Addressing Dataset Imbalance

With the dataset comprising 477,670 cancerous and over 4 million non-malignant pixels, a significant imbalance presented itself. This was addressed using class weights during model training, enabling the algorithm to focus on underrepresented classes. Additionally, sample weights were considered to further balance training outcomes, ensuring that even patients with smaller cancer areas could contribute meaningfully to the model’s generalization capability.

Comprehensive Testing of Combinations

In total, the study explored 1,584 combinations of various preprocessing techniques. Figure 4 illustrates this systematic approach to processing hyperspectral imaging data, delineating different parameters for scaling, smoothing, and filtering techniques. This meticulous organization serves to optimize the data handling process before analysis.

Training Architecture and Validation Strategy

Given the limited size of the dataset, a pixel-wise classification strategy was adopted to enhance sample availability. The Inception-based 3D-CNN architecture was selected for its effectiveness in processing data with diverse feature maps. The study implemented Leave-One-Out Cross-Validation (LOOCV), creating a robust methodology to ensure that the model’s training was both comprehensive and fair.

Parameters and Infrastructure

The analytical combinations were executed on a robust infrastructure using Python and Tensorflow. Key parameters were established to guide the training process effectively, such as the choice of optimizer, loss function, and epoch limits. Early stopping was integrated into the training process to prevent overfitting, reflecting a mindful approach toward maintaining model integrity.

In essence, this study combines ethical diligence with cutting-edge machine learning methodologies, striving to enhance diagnostic accuracy in medical imaging. By focusing on detailed preprocessing, sampling strategies, and robust validation processes, its insights pave the way for future innovations in the intersection of technology and healthcare.