Exploring the DeepFuse Framework: A Revolutionary Approach to Multimodal Craniofacial Imaging

The rapidly evolving field of craniofacial analysis has encountered a significant breakthrough with the advent of the DeepFuse framework. This sophisticated architecture is specifically designed to integrate diverse multimodal imaging data to tackle two pivotal challenges: cephalometric landmark detection and treatment outcome prediction. At the heart of DeepFuse lies an innovative architecture that streamlines the processing of different types of craniofacial images—such as lateral cephalograms, cone beam computed tomography (CBCT) volumes, and digital dental models—to extract useful information for clinical applications.

Framework Overview: Three Key Components

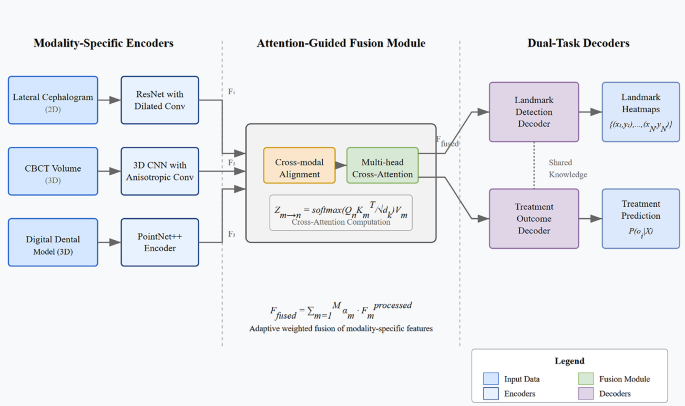

The DeepFuse framework is composed of three principal elements: modality-specific encoders, an attention-guided fusion module, and dual-task decoders. This tripartite structure reflects a fundamental acknowledgment that various imaging modalities yield complementary insights into craniofacial structures, which necessitate distinct processing pathways before integration.

Modality-Specific Encoders

The framework caters to various input modalities, facilitating the incorporation of lateral cephalograms (2D), CBCT volumes (3D), and digital dental models (3D). Each of these modalities undergoes analysis through dedicated encoder networks that are tailored to the unique characteristics of the data type.

-

For lateral cephalograms, the encoder leverages a modified ResNet architecture equipped with dilated convolutions. This design choice allows for the capture of multi-scale features while retaining the spatial resolution vital for precise landmark localization.

-

The CBCT encoder employs a 3D CNN, optimized with anisotropic convolutions to efficiently process the volumetric data common in CBCT scans. This approach recognizes the unique voxel dimensions inherent in clinical settings.

- Dental model encoders utilize PointNet++, an architecture adept at handling unordered point cloud data, which is crucial for processing the morphological representations of dental surfaces.

Attention-Guided Fusion Module

One of the main innovations of DeepFuse is its attention-guided fusion module. This component dynamically integrates features from different modalities and accounts for their varying levels of reliability and relevance. Instead of relying on fixed weights, the module utilizes a multi-head cross-attention mechanism, allowing the framework to prioritize complementary features while mitigating the limitations of individual modalities, such as suboptimal contrast in cephalograms or artifacts in CBCT images.

Dual-Task Decoders

The data processed through the framework ultimately bifurcates into two separate decoder streams tailored for landmark detection and treatment outcome prediction. This distinct architectural feature underscores the understanding that these two tasks, while connected in anatomical principles, benefit from specialized feature refinement respective to their objectives. The landmark detection decoder generates heatmaps for target landmarks, and the treatment prediction decoder outputs probability distributions for potential treatment outcomes. This dual-task approach is vital for enhancing the overall accuracy and effectiveness of craniofacial analysis.

Multi-Source Data Input and Preprocessing

In applying the DeepFuse framework, preprocessing becomes a vital step. The architecture accommodates multiple imaging modalities common in orthodontic and maxillofacial diagnostics, necessitating specific preprocessing techniques for each type to optimize performance.

Lateral Cephalograms

For cephalograms, preprocessing involves techniques to enhance landmark visibility. The initial step typically employs contrast-limited adaptive histogram equalization (CLAHE), which is particularly effective for improving local contrast while preventing noise amplification. This allows for clearer anatomical features to be discerned, essential for accurate landmark detection.

CBCT Volumes

CBCT scans present unique challenges due to their inherent susceptibility to artifacts and noise, including beam-hardening artifacts. To counter this, DeepFuse implements a modified 3D anisotropic diffusion filter aimed at reducing noise while preserving anatomical boundaries. Additionally, a metal artifact reduction (MAR) algorithm is used, specifically developed to handle streak artifacts frequently encountered in scans of patients with orthodontic appliances.

Digital Dental Models

Digital dental models, often represented as triangular meshes or point clouds, also go through a series of normalization procedures including uniform resampling and alignment. This ensures each model aligns spatially with others, providing a cohesive dataset for further processing. The preprocessing pipeline also includes simplification of the mesh to balance computational efficiency with detail retention.

Automated Registration Process

Accurate registration of the various modalities plays a significant role in the DeepFuse framework. An automated process identifies shared anatomical landmarks (e.g., nasion, sella) across modalities, facilitating the correct spatial alignment necessary for effective feature fusion. The registration process comprises several steps: landmark identification, transformation estimation to align modalities, intensity-based refinement for local accuracy, and quality assessment of the registration’s effectiveness. This ensures a mean target registration error (TRE) within clinically acceptable limits, paving the way for robust feature integration down the line.

Multimodal Feature Extraction and Fusion Mechanism

To fully exploit the strengths of each imaging modality, the DeepFuse framework employs a meticulous feature extraction and fusion methodology. Each modality’s encoder captures unique features pertinent to its specific data characteristics.

Individual Feature Extraction

-

Cephalogram Encoder: Utilizes a modified ResNet-50 to maintain spatial resolution while capturing hierarchical anatomical information through dilated convolutions.

-

CBCT Encoder: A 3D DenseNet architecture processes the volumetric data, ensuring effective feature capture across varying isotropic dimensions.

- Dental Encoder: PointNet++ captures geometry and topology from the dental models, leveraging a hierarchical layer approach conducive to nuanced surface detail.

Fusion Mechanism

Once features are extracted, they undergo alignment in a common embedding space via a learned alignment module. Thus, features from each modality can be meaningfully integrated despite their inherent differences.

The attention-guided fusion mechanism uses a multi-head cross-attention framework to allow different modalities to focus on the most relevant components of the data. This self-adaptive weighting scheme dynamically adjusts according to the information quality assessed for each modality.

Conclusion

The DeepFuse framework stands as a pioneering solution for the integration of multimodal craniofacial imaging data, showcasing a structured approach to landmark detection and treatment outcome prediction. By harnessing each modality’s strengths and intelligently fusing their features, DeepFuse illustrates the potential of machine learning in transforming clinical practices in orthodontics and maxillofacial care. This innovative architecture not only enhances diagnostic accuracy but also offers adaptability to future advancements in imaging technology.