Segmentation of Speech Turns into Speech Acts

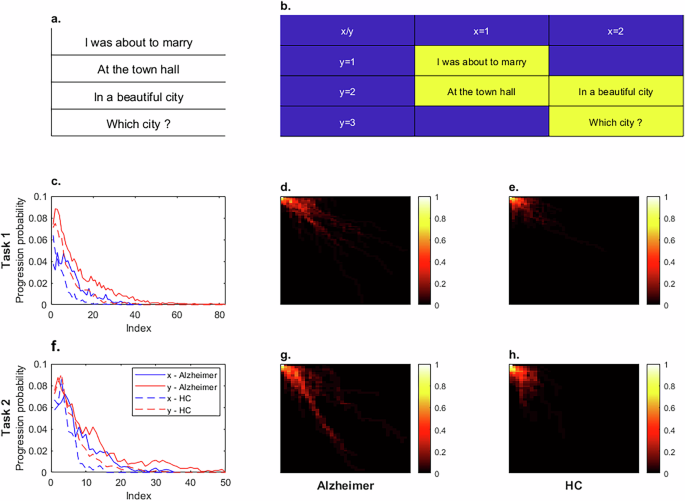

Within the 2TK (Two-Dimensional Topological Kinetics) framework, the analysis of conversations takes on a meticulous form. Conversational analysis within this context involves breaking down speech turns into minimal units of information, known as speech acts. This method distinguishes each speech act as a small, semantically coherent block, which diverges from traditional syntactic interpretations of sentences. Let’s explore this concept through a practical example derived from a dialogue between an interviewer (E) and a participant (P).

For instance, consider a turn from the participant (P2) that can be segmented into two distinct speech acts:

- P2a: This act reflects hesitation and uncertainty, as captured in the phrase, “Um, I’m not sure…”

- P2b: A separate act emerges that conveys surprise or lack of readiness with the statement, “I feel a bit caught off guard.”

Topological Encoding

In the 2TK model, every speech act, denoted as ( \kappa ), is assigned a specific address ( \xi ) that defines its location within the exchange. This address is expressed as ( (x,y) ), capturing two essential dimensions:

- (x): Represents the axis of thematic progression, indicating when a new theme or sub-theme is introduced or linked to a previously discussed theme.

- (y): Corresponds to the axis of informational depth, marking moments when clarifications, details, or examples are solicited regarding an ongoing topic.

Additionally, the ( \varepsilon ) index acts as a temporal marker, organizing the speech acts in their chronological sequence.

To illustrate, let’s analyze another dialogue example between E and P:

- E1 poses a request for the participant to recount “the best memory,” thus initiating a new theme. This act corresponds to ( (x=1,y=1) ).

- P2a continues along the same thematic line by mentioning an initial idea (“I won’t say our wedding”), causing ( y ) to increment to ( (y=2) ) for further elaboration.

- P2b introduces a new perspective (“the liberation”), shifting the ( x ) value from 1 to 2 while maintaining ( y ) at ( (y=2) ).

- E3 seeks clarification (“Liberation from?”), refining the existing theme ( (x=2) ) and increasing ( y ) to ( (y=3) ).

- P4 responds (“Well, the war”), advancing the depth further to ( (y=4) ) within the same thematic context ( (x=2) ).

Matrix Conversion

Once situated within the topological space through their addresses ( \xi = (x,y) ), these speech acts are transitioned into a matrix format. Specifically, a two-dimensional matrix is created where columns represent the varying ( x ) values (thematic progression) and rows reflect different ( y ) values (elaboration).

Each cell within this matrix contains identifiers for the speaker, followed by the temporal position ( (\varepsilon) ) of the speech act. For example, in the previously mentioned dialogue, where ( x ) and ( y ) adopt values ( {1,2} ) and ( {1,2,3,4} ) respectively, the resulting matrix may look as follows:

- The cell ( (x=1,y=1) ) includes ( \text{E1(1)} ), marking the speech act performed by E at ( \varepsilon = 1 ).

- The ( (x=2,y=2) ) cell represents ( \text{P2b(3)} ), denoting the action at ( \varepsilon = 3 ).

Notably, the presence of empty cells can convey thematic coherence. If cell ( (x=2,y=2) ) is filled while ( (x=2,y=1) ) remains vacant, it signals a connection returning to the original theme ( (x=1,y=1) ) instead of indicating a discontinuous thematic shift.

Thus, this annotated topological matrix allows for structural mapping of conversations, embodying not only thematic anchoring ( (x) ) and degree of elaboration ( (y) ) but also chronological order ( (\varepsilon) ). This abstraction enables elimination of verbatim transcripts while facilitating automated analyses for diverse applications—such as computing transition probabilities or developing image representations for convolutional neural networks.

Resolving Topological Ambiguity

The 2TK framework navigates ambiguity using a purely topological criterion, distinct from any discursive annotations. Essentially, the first speech act that establishes its super-ordinate element is positioned at ( (x,y+1) ) relative to that anchor. The subsequent corroborative acts are mapped laterally with increasing ( x ) values, ensuring clear and deterministic placements even amid significant pragmatic ambiguity.

In select conversations, certain speech acts may simultaneously refine established themes while introducing collateral sub-themes, thus complicating traditional “vertical versus horizontal” rules. When ambiguity arises, a proveability matrix can be employed—a symmetric table that records the presence (1) or absence (0) of justificatory links between pairs of speech acts.

Filmstrip Generation

The creation of filmstrips is particularly interesting. Utilizing Python (v3.12.4), each participant’s topological matrix translates into a sequence of images that collectively represent both temporal ( (\varepsilon) ) and spatial ( (x,y) ) progression. First, the maximum dimensions for ( x ) and ( y ) are established from the stored matrices. Then, for each participant, each non-empty cell ( (x,y) ) acquires its own frame, with all initially set to zero except for the relevant cell, which signifies that at time ( \varepsilon ), the conversation occupies position ( (x,y) ).

These frames are stored chronologically based on their ( \varepsilon ) values, leading to a filmstrip that visually conveys the evolution of the conversation. For a comprehensive analysis, a total of 143 filmstrips were generated, reflecting the multifaceted dynamics of conversations during autobiographical recall tasks.

Model and Training

The resulting filmstrips were subjected to analysis using Teachable Machine v2.0. This involved a MobileNet-V2 convolutional neural network (CNN), ideally suited for processing image data. The model incorporated depth-wise separable convolutional blocks, with only the global-average-pooling layer feeding into a newly established dense layer for classification.

Training spanned 50 epochs with a batch size of 16, utilizing the Adam optimizer, and began with an initial learning rate of ( 1 \times 10^{-3} ). Following training, 143 filmstrips (two per participant where applicable) were aggregated and labeled based on group categories—Alzheimer’s (AD) or Healthy Control (HC).

The integration of filmstrips based on distinct autobiographical tasks allows for sophisticated insights, recognizing that positive and negative memory recalls stimulate varied autobiographical networks, manifesting dissimilar neurophysiological responses.

Computational Psychometrics Experiment

To evaluate the robustness of this approach, a method inspired by computational psychometrics was implemented. This included defining two conditions: one where topological profiles were accurately associated with their true group (AD vs. HC), and a control condition involving artificially mixed groups (each comprising 50% AD and 50% HC profiles).

This structure assessed the “background noise,” as the absence of class differences was expected in the second condition. The same predictive protocols were applied in both environments, which included the convolutional network framework, allowing for sensitivity, specificity, and accuracy metrics to be calculated.

Progression Probabilities

The calculation of the probability of speech act occurrences involved analyzing topological matrices derived from 2TK encoding for the respective groups. Each group’s matrices were consolidated, identifying a maximum size matrix before resizing smaller matrices appropriately.

This process culminated in creating average frequency maps for each potential ( (x,y) ) position across conversations, offering valuable quantitative insights into speech act occurrences relative to group classifications during dialogue.

Metrics Calculation

Various topological and kinetic metrics were computed for each interaction, utilizing Python functions applied to encoded trajectories. For example, the lateral-to-vertical ratio quantified lateral moves against vertical ones, while transition entropy measured the uniqueness of transitional patterns within the conversation.

Additional metrics, such as thematic novelty slope and mean thematic dwell time, were instrumental in understanding the nuances of conversation structures. Collectively, these metrics provide a comprehensive framework for analyzing conversational dynamics, enabling deeper psychometric evaluations and promoting further explorations into the complexities of human speech.