Enhanced Visual-Language Pre-Training for Chest Radiology Images

Enhanced Visual-Language Pre-Training for Chest Radiology Images

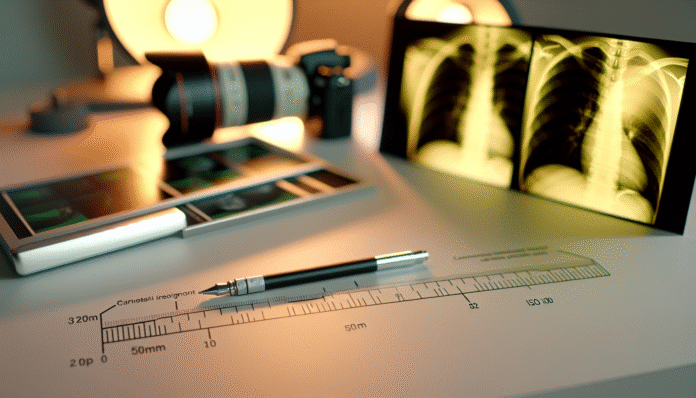

The intersection of advanced machine learning techniques and healthcare offers transformative potential, especially in interpreting chest radiology images. However, the challenge lies in harnessing these technologies to yield actionable insights for clinical practice. Imagine a world where radiologists can instantly access a model trained not just on images, but also on nuanced language descriptions of the findings. This enhanced visual-language pre-training could redefine diagnostic accuracy and efficiency. Recent developments in multimodal models raise critical questions about their implementation and impact on patient outcomes.

Understanding Visual-Language Models: A Primer

Visual-language models (VLMs) integrate image recognition capabilities with natural language processing, allowing systems to comprehend and articulate visual content.

Example: Consider a model trained to analyze chest X-rays. Instead of merely identifying pneumonia or tumors, it articulates a detailed report that includes specifics about the affected lung areas, possible differential diagnoses, and suggested follow-up scans.

The Mechanism Behind VLMs

VLMs operate through deep learning architectures, such as transformers, which process data across multiple modalities.

-

Comparison Model: Feature Traditional Image Models Visual-Language Models Input Images only Images + Text Output Classifications Detailed Descriptions Context Awareness Limited Enhanced with text

Reflection: How might the reliance on textual descriptions limit a model’s effectiveness? A professional might overlook the need for diverse data in medical contexts, where linguistic clarity is critical.

Practical Closure: Implementing VLMs can result in expedited diagnostic processes. Radiologists can leverage these tools to generate comprehensive reports faster, allowing for timely patient management.

The Importance of Pre-Training in Medical Contexts

Pre-training on large, diverse datasets is essential for VLMs to perform effectively in specialized areas like radiology.

Concrete Example: A VLM pre-trained on millions of chest radiology images and corresponding reports can learn intricate relationships between visual patterns and their linguistic descriptions. This empowers the model to generate more accurate interpretations.

Pre-Training Techniques

Several strategies exist for effective pre-training, including self-supervised learning and multi-task training.

- Lifecycle Map:

- Data Collection: Curate diverse chest imaging datasets.

- Self-Supervised Learning: Use unsupervised methods to leverage vast unlabeled data.

- Fine-Tuning: Adjust the model on specific tasks like anomaly detection.

Reflection: What if the pre-training data is biased? Medical professionals need to assess how biases in training datasets can affect the model’s accuracy in diverse populations.

Practical Closure: Fine-tuning the model with local healthcare data can significantly enhance its relevance and performance in specific clinical settings.

Addressing Challenges in Implementation

Despite the promise of enhanced pre-training methods, challenges remain in integrating VLMs into clinical workflows.

Example: Radiologists may encounter difficulties in interpreting model outputs that lack context or clinical relevance. Clinical governance must ensure that these AI tools complement rather than replace human expertise.

Common Pitfalls and Solutions

-

Over-reliance on Model Outputs: Radically altering clinical pathways based on AIs can lead to misdiagnoses.

- Fix: Strengthen collaboration between machine and human interpretations.

- Data Privacy Concerns: Utilizing patient information without consent can hamper model development.

- Fix: Implementing federated learning can allow models to learn from decentralized data without compromising privacy.

Reflection: What ethical considerations should guide the deployment of AI in radiology? Understanding patient perspectives on AI usage can be crucial for successful integration.

Practical Closure: Establishing a framework for transparent communication about AI use in clinical settings can foster trust between patients and healthcare providers.

Future Implications: A Toward Multimodal Integration

As we look ahead, the evolution of VLMs in interpreting chest radiology images will likely involve ongoing research and adaptation.

Example: Future models may incorporate real-time patient data, such as demographics and clinical history, into their evaluations, providing even richer context for decision-making.

Forward-Looking Strategies

- Invest in cross-disciplinary research to enhance the interplay between the medical community and AI specialists.

- Build comprehensive evaluation frameworks to continually assess the impact of these models on patient outcomes.

Reflection: What happens if VLMs evolve without sufficient regulatory oversight? Navigating the balance between innovation and ethical responsibility will be paramount for sustainable growth.

Practical Closure: Engaging in continuous dialogue with regulatory bodies and stakeholders can ensure that AI developments in radiology align with best practices and patient safety.

Audio Summary: In this article, we explored the intricacies of enhanced visual-language pre-training for chest radiology images, addressing the mechanisms behind visual-language models, the importance of pre-training, the challenges of implementation, and future implications.

This fusion of advanced AI and clinical practice not only enhances diagnostic processes but also sparks essential conversations around ethics, collaboration, and continual assessment within medical frameworks.