Experimental Setting in Material Classification

Introduction to the LMT-108 Dataset

In our quest to validate the proposed method, we turned to the LMT-108 dataset, a rich repository of material information. This dataset comprises 108 distinct objects categorized into nine different types:

- Meshes

- Stones

- Glossy Materials

- Wood Types

- Rubbers

- Fibers

- Foams

- Foils and Papers

- Textiles and Fabrics

What makes LMT-108 particularly valuable is its diverse data forms, including acceleration, friction, imaging, metal detection, infrared reflection, and sound. For our experiments, we selected three modalities: images, sound, and acceleration. We recorded acceleration signals using a three-axis ADXL335 accelerometer (±3g, 10kHz) and captured sound with a CMP-MIC8 microphone (44.1kHz). The images used in our experiments have a resolution of 320×480 pixels. Nearly every object is represented by 10 samples, leading to a total of 1,080 samples. Each object’s data was divided into training and testing sets, ensuring no overlap, to maintain the integrity of our evaluations.

Advancing with the SpectroVision Dataset

To rigorously assess the robustness and adaptability of our proposed method, we extended our exploration to the more demanding SpectroVision dataset. This dataset offers 14,400 paired samples featuring near-infrared (NIR) spectral measurements and high-resolution texture images of household objects (1,600 × 1,200 pixels). The data was gathered in a non-invasive manner using a PR2 mobile manipulator equipped with a SCiO spectrometer and a 2MP endoscope camera, ensuring consistent illumination conditions.

The objects featured in SpectroVision fall into eight material categories: ceramic, fabric, foam, glass, metal, paper, plastic, and wood. Each underwent 100 randomized interactions, accounting for variations in height, roll, and position to enhance real-world applicability. For testing, we randomly selected four objects from each material type, culminating in a dataset comprising 32 unseen object samples.

Feature Representation and Dimension Reduction

Addressing the small sample count necessitated improving our model’s generalization capabilities. This led us to employ conventional dimension reduction methods on the acceleration data. We evaluated four primary techniques:

-

Single Axis (SA): The simplest of methods, which examines acceleration data along individual axes (x, y, and z).

-

Shadow of Clustering (SoC): Combines directional data to create a comprehensive representation, though it remains relatively straightforward.

-

Magnitude (Mag): Calculates the square root of the sum of squares of the data across various measurement processes, resulting in a more complex representation but risks losing negative data features.

- Principal Component Analysis (PCA): A standard dimensionality reduction technique that transforms n-dimensional features into k-dimensional space, ensuring feature orthogonality.

After thorough evaluation, we settled on the SA-z method as the optimal approach for dimension reduction of acceleration signals, reinforcing our earlier findings.

Feature Extraction Techniques

Feature extraction is crucial for maximizing the effectiveness of our method. Drawing on analyses from previous experimental results, we employed specific algorithms tailored to extract discriminative features from each modality:

- Local Binary Patterns (LBP) for image data.

- Mel-Frequency Cepstral Coefficients (MFCC) for both sound and acceleration signals.

These techniques are well-suited to capture the nuanced characteristics inherent in the diverse types of data we are working with.

Performance Comparison with Traditional Methods

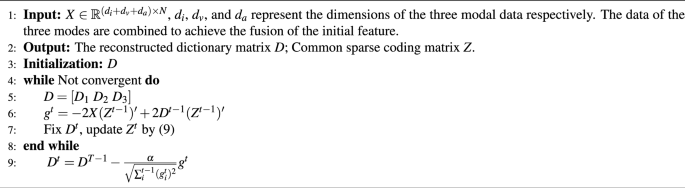

To benchmark our proposed method (Deep Dictionary Learning, or DRDL), we conducted experiments involving ten traditional approaches, highlighting our model’s performance against various established methods:

-

K-SVD: Utilizes orthogonal matching pursuit to address sparse coding problems.

-

Support Vector Machine (SVM): A supervised learning method that determines a hyperplane optimizing class separation.

-

Multi-Layer Perceptron (MLP): A classic feedforward neural network trained via backpropagation.

- Convolutional Neural Network based on Vision (CNN-V): A prevalent choice for texture recognition, leveraging its superior ability to extract features.

Further methods such as Greedy Deep Dictionary Learning (GDDL), Twin-incoherent Self-expressive Latent Dictionary Pair Learning (SLatDPL), and others culminate in a nuanced understanding of comparative performance. These traditional techniques exhibited limitations, primarily in terms of their inability to effectively capture deeper abstractions in feature representations, which our DRDL significantly addressed.

Statistical Validation of Results

To bolster the credibility of our findings, we performed statistical significance testing. Employing 10-fold cross-validation, we generated multiple accuracy scores for our top methods and conducted the Wilcoxon signed-rank test against the DRDL model. The results revealed a consistent significance level (α = 0.05) across all comparisons, confirming the DRDL’s superior performance as statistically significant and not a result of random chance.

Additional Verification: The SpectroVision Dataset Revisited

As part of our validation process, we applied the DRDL model to the SpectroVision dataset. Extracting LBP features from texture images and only performing PCA on the spectral data demonstrated that DRDL once again achieved state-of-the-art performance, with an accuracy of 89.4%. This further illustrates the model’s ability to learn effective features from complex data.

Layer Count Impact on Model Performance

We also explored how varying the model’s layer count influenced performance. By configuring the model with 1 to 4 layers and studying the results, we noted that while deeper models generally improved accuracy, a 4-layer implementation led to redundancy, weakening its effectiveness. This underscored the significance of choosing an optimal number of layers for peak performance.

Material Comparisons with the LMT-108 Dataset

Building on the LMT-108 dataset’s diverse material types, we conducted experiments focusing on how our methods performed across different materials. Notably, our DRDL approach achieved a recognition accuracy of 97.8%, surpassing other methods for almost all material types except for meshes.

Parameter Influence Analysis

Analyzing the effects of critical parameters on model performance revealed significant insights. Joint parameter analyses of λ and μ indicated optimal values leading to improved classification accuracy, guiding subsequent experimental setups.

Ablation Studies for Method Verification

To validate different components of the DRDL framework, we performed three ablation experiments, separating multi-modal inputs and dictionary reconstructions. The results, clearly presented in comparative tables, emphasized the contributions of each framework aspect to overall performance.

Computational Efficiency Considerations

While our DRDL model demonstrated impressive accuracy, computational efficiency remained a concern, particularly in real-time applications. The time taken to classify samples was significantly higher compared to single-layer methods. Future work will focus on enhancing efficiency through strategies such as faster solvers, dictionary pruning, and quantization.

“Limitations and Generalizability” Section

Despite its strong performance, the DRDL model comes with its computational complexities and potential biases stemming from controlled dataset acquisition environments. Acknowledging these limitations is critical as we seek to expand its applicability to variable real-world scenarios.

This structured approach not only outlines the experimental settings and findings but also illuminates the nuanced decision-making that contributes to advancing material classification through innovative methodologies.