The Intricacies of a CNN Architecture for Keypoint Detection

Overall Architecture

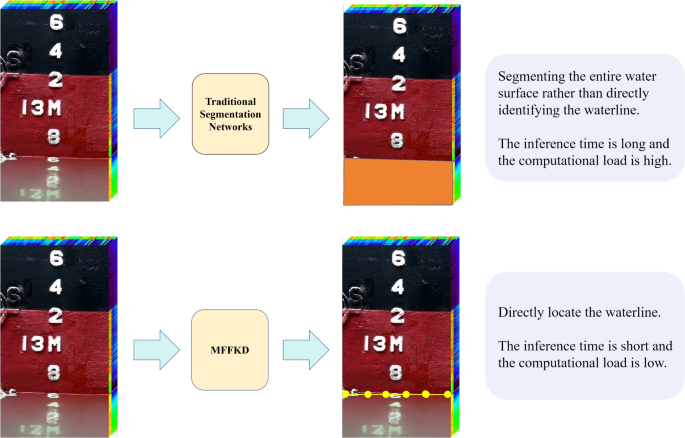

Our model is constructed on a Convolutional Neural Network (CNN) framework, meticulously tailored for the task of keypoint detection. The architecture consists of several specialized modules that systematically extract and amalgamate spatial structures pertinent to the visual characteristics of waterlines. To commence, the model applies two convolutional blocks aimed at extracting fundamental features from the input data. Following this, the processed data traverses through four stages of Dilated Residual-Channel Recalibration Modules (DR-CRM). Each stage produces a refined output that is further enhanced using Feature Enhancement Extraction Modules (FEEMs). The outputs from the FEEMs are then subject to multi-scale feature fusion through the Multi-Scale Feature Integration (MFWI). Ultimately, these integrated feature maps feed into a task-specific head intended for keypoint detection, predicting the exact locations of waterline keypoints. This culmination integrates the keypoints with character recognition results to compute a final waterline prediction through a mathematical model.

Backbone Network Design

DR-CRM Block

The backbone network features the advanced DR-CRM block, enhancing traditional ResNet architectures. This is achieved by substituting standard convolutions with dilated convolutions while incorporating a Channel Recalibration Module (CRM) in each residual block. Comprising four DR-CRM stages with varying block counts (3, 4, 6, and 3), the backbone employs a consistent 3×3 kernel for convolutions. Notably, downsampling occurs via a stride of 2 in the initial convolution of stages two through four, with remaining convolutions using a stride of 1.

The dilated convolution substantially amplifies the receptive field of the convolution kernel, thereby broadening the contextual comprehension of the input data, while the CRM highlights significant features. The block’s architecture, illustrated in accompanying figures, captures both overall and detailed channel feature information, ensuring that subsequent convolutional layers process a finely-tuned representation of the input.

After completing two dilated convolutions, features are processed through adaptive average and standard deviation pooling layers. This reduces the spatial dimension of the input, creating a singular value representation for each channel. The average pooling captures overall channel information while the standard deviation pooling accentuates the variability among features, thereby preserving essential global aspects throughout various input sizes.

FEEM

The Feature Enhancement Extraction Module (FEEM) is a transformative component designed to heighten feature extraction capabilities. The input feature map is first bifurcated into three distinct groups along the channel dimension, allowing for independent processing. Each group encounters three parallel branches utilizing convolutions of varying kernel sizes—1×1, 3×3, and 5×5—to holistically capture local and global contextual data.

The outputs from these branches undergo fully connected processing, producing attention weights that recalibrate and optimize focus on the most pertinent spatial and channel-wise information. The meticulously re-weighted feature maps are concatenated, ultimately fused through another 1×1 convolution layer to form a condensed, enhanced feature representation. This strategy not only diversifies the feature extraction but also mitigates computational overhead through efficient use of depthwise separable convolutions.

MFWI

To harness multi-scale feature information fully, our backbone includes the MFWI module tailored for feature fusion. This segment incorporates two initial blocks, which utilize convolution, batch normalization, and ReLU activation to extract fundamental features. As previously mentioned, the data subsequently enters the four-stage DR-CRM blocks, generating feature maps at different scales, which MFWI adeptly processes.

Within MFWI, every convolution employs a 3×3 kernel with a stride of 2. As depicted in diagrams, the six feature maps produced by FEEMs are progressively integrated, merging the contributions of information across various scales. The final output comprises a weighted fusion of these collective features, as articulated in the corresponding mathematical equations, enabling the model to capitalize on the significance of multi-scale information.

Task Head Design

Detection Head

In our architecture, the detection head takes on a dual-branch design tailored for keypoint detection. This approach critically processes spatial data along two separate paths—one aligned with the X-axis and the other with the Y-axis—before integrating their outputs to pinpoint keypoint coordinates accurately. Initially, the input feature map is subjected to dimensionality compression, ensuring essential data for accurate localization is preserved.

After compression, the features are refined through tailored deconvolution layers specific to each axis, restoring spatial resolution compromised during earlier stages. A series of convolutional layers follow, enhancing details crucial to keypoint localization. Each branch culminates in a dedicated fully connected layer regressor for its respective axis, converting high-level features into coordinate vectors representing keypoint locations in the 2D space.

Loss Function

For training our keypoint detection model, the Mean Squared Error (MSE) loss function is employed, suitable for assessing the average squared difference between predicted values and ground truth. This statistical measure ensures sensitivity and continuity in predictions—a necessity for accurately gauging water levels.

Character Recognition Head (CR Head)

Incorporated into our network is a character recognition head, which utilizes YOLOv5, a state-of-the-art model adept at recognizing visual patterns within images. Trained across various datasets, including those containing challenging examples such as rusted characters and low-light conditions, YOLOv5 offers robust integration and deployment within our architecture. The recognition system effectively identifies scale numbers and characters on ships, providing essential supplemental data for final keypoint prediction.

Mathematical Modeling

To quantitatively assess water levels, we utilize a mathematical model that translates observed data into measurable values. By referencing key points on a line connecting detected keypoints and adjusting for perspective distortion, the model accurately calculates the water level. The defined relationships among various points allow for corrective measures, enhancing prediction accuracy and establishing a reliable watermarking system.

Two-Phase Training Approach

Phase 1: General Feature Acquisition

Initially, the model is trained on a dataset of daylight images, focusing on foundational aspects of draft lines. This phase employs a higher learning rate, facilitating speedy assimilation of essential visual features. It sets the groundwork for subsequent specialized training phases.

Phase 2: Specialized Refinement

In the second training phase, targeted adjustments enhance performance across three distinct image streams. Each focuses on a specific challenge, such as nighttime visibility, variability in ship colors, or slight daylight variations, allowing the model to refine its detection capabilities effectively. A lower learning rate governs this phase, ensuring meticulous calibration of the model’s parameters without losing broader contextual understanding developed previously.

This two-step training approach underpins the model’s versatility and precision, equipping it to function optimally across diverse operational environments, thereby exemplifying the marriage of advanced CNN architecture with practical application demands.