Unraveling the Outcomes of Machine Learning and Deep Learning Models for Sentiment Analysis of Deepfake Posts

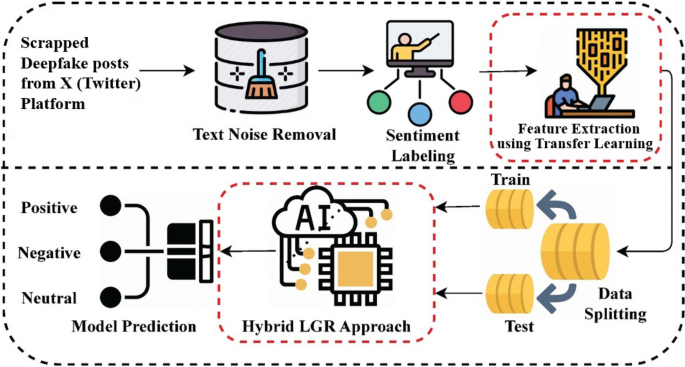

As our digital landscape evolves, the emergence of deepfake technology presents both intriguing opportunities and significant challenges, particularly in the domain of sentiment analysis. In recent studies, various Machine Learning (ML) and Deep Learning (DL) models have been deployed to analyze sentiment in deepfake posts extracted from social media platforms, notably Twitter (now X). These explorations predominantly focus on labeling sentiments as positive, negative, or neutral using TextBlob, while employing diverse feature extraction techniques such as Bag of Words (BoW), Term Frequency-Inverse Document Frequency (TF-IDF), word embeddings, and a novel transfer learning feature extraction approach.

Experimental Design

The experimental setup delves into sophisticated methodologies implemented through Python, capitalizing on libraries including NLTK, TextBlob, Sklearn, Keras, Pandas, Numpy, TensorFlow, Matplotlib, and Seaborn. To conduct these experiments efficiently and effectively, the Google Colab platform was utilized. Detailed environments for experimentation can be referenced in the provided tables, elucidating the rigorous setup necessary for these analyses.

Outcomes Using BoW Features

When comparing performances across different ML models utilizing BoW features, results indicate that Logistic Regression (LR) stands out with a remarkable accuracy of 87%. This model excels in additional metrics such as precision, recall, F1 score, geometric mean, Cohen Kappa score, ROC AUC score, and Brier score; its capacity to handle high-dimensional data efficiently reduces the likelihood of overfitting. In contrast, Decision Trees (DT) and K-Nearest Neighbors Classifier (KNC) demonstrate poorer performances with accuracy marks of 78% and 64%, respectively. The Support Vector Machine (SVM) particularly struggles with a low accuracy of 43%, attributed to its computational complexity and sensitivity to data variations.

A visual comparison via a confusion matrix highlights this disparity further, showcasing LR with 18,401 correct predictions out of 21,071 total predictions, establishing a notable lead over DT and SVM.

Outcomes With TF-IDF Features

Transitioning from BoW to TF-IDF features, the performance metrics present a slight decline, with LR achieving 81% accuracy, followed closely by DT with 80%. Again, KNC lags behind at 65%, while SVM remains consistent in its underperformance with just 48% accuracy. The confusion matrix provides further insight, indicating that LR continues to lead with 17,220 correct predictions.

Graphically, results indicate that while DT and LR show promising accuracy, KNC and SVM consistently yield less favorable outcomes across the board.

Outcomes With Word Embedding Features

The DL models, particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), positively impact sentiment classification, achieving milestone accuracies of 94%. Employing word embeddings allows these models to excel in capturing long-term dependencies within textual sequences, thus mitigating gradient-related issues. Performance assessment through various metrics outlines continued success for LSTM and GRU, with RNN trailing slightly with 93% accuracy.

Graphical analysis accentuates these findings, with LSTM and GRU distinguished as superior due to their high precision and F1 scores.

Results With Novel Transfer Features

Incorporating novel transfer learning features yields even more optimistic results. Here, LR leads with an exceptional accuracy of 97%. However, the alternative models, such as DT, SVM, and KNC, follow closely at 96%. This advancement indicates the novel feature extraction method’s favorable impact on ML models compared to traditional techniques like BoW and TF-IDF.

Results Using Proposed LGR Model

The exploration expands further with the introduction of a hybrid LGR model combining LSTM, GRU, and RNN. This model achieves an impressive accuracy of 99%, further substantiating its prowess against traditional methods. All metrics, including precision, recall, and F1 score, illustrate advantageously high scores for the LGR model.

Cross-Validation Results

Engaging in K-fold cross-validation, the model performance substantiates the robustness of the proposed approach. LR consistently demonstrates superior performance, overshadowing other models regardless of the features applied, while KNC and DT portray satisfactory results.

Computational Cost Analysis

An analysis dedicated to understanding the computational cost establishes that KNC exhibits efficiency in training time when utilizing TF-IDF features. However, training time increases with the BoW method, showcasing a shift in computational demands based on the feature extraction approaches applied.

Statistical Significance Analysis

In a quest to validate the efficacy of the proposed LGR approach, statistical significance comparisons employing paired t-tests reveal significantly low p-values, underscoring the model’s superiority over classical techniques, including SVM, DT, KNC, LR, LSTM, GRU, and RNN.

Error Rate Analysis

An analysis focusing on error rates across ML models using various techniques showcases the proposed transfer feature improves the likelihood of correct predictions. The LR classifier reduces the error rate significantly with new features contrasted against traditional methods, further validating the model’s performance.

Ablation Study Analysis

The ablation study reveals that incorporating transfer features significantly elevates model accuracy from prior conditions, emphasizing the strategic advantage derived from such enhancements in feature extraction.

State-of-the-Art Comparisons

A comparison against existing state-of-the-art methodologies indicates that the proposed approach leads the field, achieving striking accuracy benchmarks for deepfake sentiment analysis.

Practical Deployment for Deepfake Content Detection

Implementing this robust sentiment analysis model can facilitate the monitoring of emotional responses to fabricated content, detect harmful trends in real-time, and help foster a safer digital ecosystem by acting as an early warning system for malicious deepfake content.

Ethical Considerations

The methodology adheres to ethical research standards, ensuring individual privacy through anonymization and public data usage. Strong commitments to ethical practices safeguard against data bias and misrepresentation.

Limitations

Despite notable advancements, several limitations exist including dataset imbalances, computational costs impacting real-time application feasibility, and the narrow scope of tested languages and platforms.

Future Work

Future advancements could pivot towards multi-modal integrations, sarcasm detection, cross-lingual models, and enhancing explainability in AI-powered sentiment analysis for more nuanced interpretations and applications in various contexts regarding deepfake technology.

This structured examination of ML and DL models illustrates the dynamic field of sentiment analysis, particularly in grappling with the challenges posed by deepfake content, and paves the way for further innovations and enhancements in the domain.