Vision-Based Gaze Estimation: A Comprehensive Overview

Gaze estimation, the technology that allows systems to determine where a person is looking, has seen remarkable advancements over the past few years. Researchers and engineers have developed various techniques that leverage computer vision, deep learning, and physiological metrics. This article delves into the state-of-the-art in gaze estimation based on a robust body of recent literature.

Understanding Gaze Estimation

At its core, gaze estimation is about pinpointing the point of gaze of an individual based on visual inputs. The common approaches can be broadly categorized into two foundational techniques: appearance-based methods and geometric methods. While appearance-based techniques utilize features from images to estimate gaze, geometric techniques rely on measurements of eye anatomy and geometry.

Appearance-Based Gaze Estimation

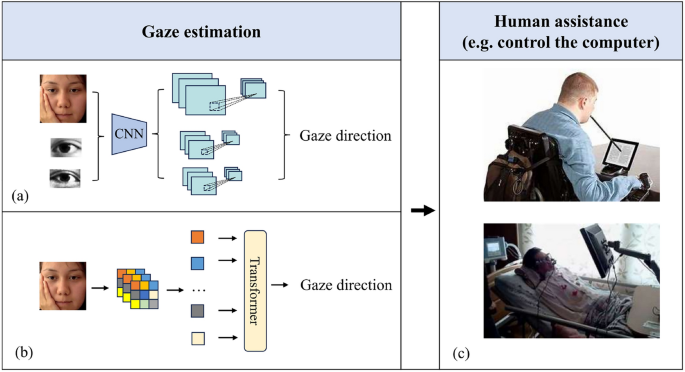

Cheng et al. (2024) provided an extensive overview of appearance-based gaze estimation using deep learning techniques. Their research emphasizes the integration of convolutional neural networks (CNNs) with advanced architectures that enhance performance across varied lighting conditions and head poses. They benchmark their findings against existing models, illustrating the superiority of contemporary deep learning methods (Cheng et al., 2024).

In a prior review, Wang et al. (2021) explored several vision-based gaze estimation methodologies, offering insights into the limitations of traditional methods in dynamic environments. The evolution depicted in this comprehensive analysis highlights the critical shift toward utilizing datasets that encompass various ethnicities, lighting conditions, and head orientations.

Geometric Methods and Physiological Gaze Estimation

The geometrical approach zeroes in on the anatomical structure of the eye. Zeng et al. (2023) proposed a robust gaze estimation method involving electrooculogram (EOG) features. Their work emphasizes the strategic placement of electrodes to optimize gaze tracking in real-time applications, such as assistive technologies for individuals with disabilities.

This intersection between gaze estimation and physiological metrics is particularly important. Utilizing EOG can enhance the accuracy of gaze estimation, especially for disabled individuals who rely on assistive technologies (Edughele et al., 2022). The blend of appearance-based and geometric approaches provides new avenues for creating hybrid systems that maximize accuracy and reliability.

Applications of Gaze Estimation

Human-Computer Interaction

One of the most exciting applications of gaze estimation is in the field of human-computer interaction (HCI). Dondi and Porta (2023) discuss how gaze-based interactions can enhance user experience in museum exhibitions by allowing visitors to engage with content using their gaze. Such applications not only improve interactivity but also provide an unprecedented method for gathering visitor insights in real time.

Moreover, the incorporation of gaze metrics can inform the design of more intuitive and responsive interfaces. For instance, gaze-aware displays are becoming prevalent in various sectors, allowing systems to adapt based on user interest and engagement.

Autonomous Driving

Another burgeoning field for gaze estimation is in autonomous driving systems. Sharma and Chakraborty (2024) reviewed how understanding driver gaze behavior can inform safety protocols and enhance vehicle automation. By analyzing where drivers focus their attention, systems can better predict potential hazards and mitigate risks by alerting drivers or taking over control when necessary.

Virtual Reality and Gaming

Virtual reality (VR) systems are continually evolving, with gaze estimation playing a critical role in enhancing immersion. Liu and Qin (2022) delve into how gaze tracking can facilitate self-position estimation in virtual environments. The ability to create VR experiences that respond dynamically to user gaze can revolutionize gaming and training simulations.

Future of Gaze Estimation

The journey of gaze estimation continues to be shaped by technological advancements and growing datasets. Systems are becoming more sophisticated, integrating deep learning algorithms and 3D modeling techniques to enhance robustness.

Researchers are exploring various architectures, including transformer networks, to improve gaze tracking accuracy. Cheng and Lu (2023) introduce the DVGaze model, showcasing the potential of dual-view setups to achieve remarkable fidelity in gaze estimation across different contexts.

Meanwhile, Zhao et al. (2024) take a step further by developing the Gaze-Swin hybrid network. This integration of CNNs and transformer mechanisms promises to push the boundaries of what is achievable in gaze estimation by efficiently leveraging both spatial and contextual information.

The combination of these advanced methods with potential applications showcases a growing field filled with opportunities for innovation. As gaze estimation technology becomes more widespread, its implications for HCI, automotive safety, VR, and beyond will undoubtedly multiply.

In summary, the advancements in gaze estimation reveal a dynamic intersection of human behavior understanding and technological innovation. With continuous research and development, gaze estimation will play an increasingly influential role in a wide array of applications spanning multiple industries.