Enhanced Fake News Detection through Dual-Stream Feature Extraction

The rise of fake news in today’s social media-driven world poses significant challenges in discerning credible information from misinformation. To combat this issue, researchers have proposed a novel methodology that combines dual-stream feature extraction, leveraging textual representation learning alongside graph-based social context modeling. This innovative approach aims to improve the detection of fake news more effectively than traditional methods.

Overview of the Proposed Methodology

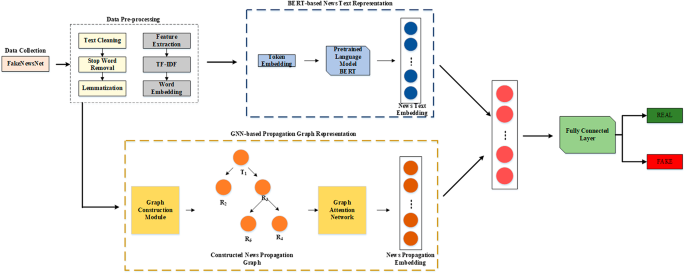

The proposed system integrates two independent streams. The first stream focuses on text analysis, while the second stream examines the social context surrounding the news articles. This dual approach not only enables a deeper understanding of the content itself but also considers how social interactions might amplify or undermine that content. The workflow of the study is depicted in a diagram (see Fig. 1), illustrating the entire process from data preprocessing to classification.

Data Collection

In order to construct a robust foundation for the study, the researchers utilized the FakeNewsNet dataset, which aggregates information from various news articles, social media interactions, and source metadata. This dataset is rich with components such as article content, authorship details, and multimedia elements, making it particularly important for analyzing linguistic patterns inherent in fake news narratives.

Additionally, the dataset includes social context factors, like user profiles, recent posts, and follower connections. These aspects are crucial for analyzing how fake news propagates through social networks, allowing for a comprehensive study that highlights both content and social behavior.

Data Pre-processing

Once the dataset is collected, it undergoes a thorough preprocessing phase to ensure its quality and relevance for fake news detection. This initial step is critical in cleaning the text and removing noise, which can obscure meaningful patterns. Techniques employed in this phase include:

-

Stop Word Removal: This process filters out common words that provide little semantic value for the detection algorithms. For example, removing words like "the," "and," or "is" allows the model to focus on the more substantial content of the articles.

-

Lemmatization: This technique reduces words to their base or dictionary form, ensuring that variations of a word are treated as the same entity. It enhances model generalization by recognizing that different forms of a word (such as “claims,” “claiming,” or “claimed”) share the same underlying meaning.

- Feature Extraction: Following the cleaning of the text, the study employs methods like TF-IDF (Term Frequency-Inverse Document Frequency) and word embeddings to transform the cleaned text into structured numerical representations suitable for machine learning algorithms.

Textual Representation Using BERT

At the core of the text analysis stream is BERT (Bidirectional Encoder Representations from Transformers), a powerful model that generates deep contextual embeddings for the words or tokens in the input text. BERT has gained substantial popularity due to its ability to understand the nuance and complexities of language.

-

Input Representation: BERT processes a sequence of tokens derived from the articles, creating rich embeddings based on token, segment, and positional information.

-

Transformer Layers: BERT’s architecture comprises multiple layers of transformers, incorporating mechanisms like self-attention. This allows the model to evaluate relationships among tokens and grasp the overall context of sentences, capturing dependencies even between non-adjacent words.

- Output Representation: The resulting contextual embeddings for each token serve as the model’s final output, providing a meaningful representation of the text.

Graph-Based Context Representation

In addition to textual features, the methodology incorporates a social context model using Graph Neural Networks (GNNs). This approach facilitates a deeper understanding of how fake news propagates through social networks.

-

Graph Construction: A heterogeneous graph is built to depict relationships between various entities involved in news dissemination, such as articles, users, and news sources. Each node in this graph has distinct attributes, while edges represent various forms of interactions.

-

Learning Node Embeddings: Graph Attention Networks (GATs) are utilized to generate node embeddings that emphasize important connections based on user interactions, thus identifying influential nodes that may perpetuate misinformation.

- Graph Transformers: To encapsulate global context in the relationships, Graph Transformers enable the model to draw on long-range dependencies, enhancing the identification of misleading narratives across different entities.

Feature Fusion & Classification

The final component of the proposed methodology is the fusion of the textual and graph embeddings into a unified representation. This multimodal fusion layer employs an attention mechanism that learns the relative importance of each feature set.

-

Dynamic Attention Mechanism: By computing scalar weights, the model dynamically adapts the influence of textual and graph-based features, improving the discrimination capability during classification.

- Transformer-Based Classification: The fused representation is then fed into a Transformer-based classification model, which outputs a probability indicating whether a news article is likely to be true or fake.

Moreover, the methodology embraces the interplay between textual indicators (like misleading language) and social dynamics (e.g., suspicious patterns of engagement), resulting in a comprehensive model for fake news detection.

The integration of both textual and social context features into a cohesive framework exemplifies an advanced methodology poised to tackle one of the most pressing issues in today’s digital landscape. This dual-stream model effectively captures the complex relationships among news articles, users, and sources, thereby equipping researchers and industry professionals with a powerful tool for identifying and mitigating the spread of misinformation.