Evaluation Metrics for Network Model Performance in Remote Sensing: A Detailed Examination

When assessing the efficacy of a network model designed for detecting and analyzing the unique architectural attributes of Qiang villages, it is paramount to employ a comprehensive set of evaluation metrics. This approach ensures that we have a nuanced understanding of our model’s performance. Let’s delve into the various metrics and processes used in this study to achieve reliable and objective assessments.

The Confusion Matrix: A Fundamental Tool

At the heart of model evaluation is the confusion matrix, a powerful tool for visualizing how well a model performs against the ground truth. The matrix contains four key elements:

- True Positive (TP): These are cases where the model correctly predicts the positive class.

- True Negative (TN): These indicate instances where the model correctly identifies a negative class.

- False Positive (FP): Here, the model mistakenly predicts a positive class when the actual label is negative.

- False Negative (FN): This depicts scenarios where the model fails to identify a positive case.

The metrics derived from the confusion matrix provide essential insights into the model’s performance.

Key Evaluation Metrics

1. Precision

Precision measures the accuracy of the positive predictions by taking the ratio of true positives to the total predicted positives. The formula is as follows:

$$

Precision = \frac{TP}{TP + FP}

$$

This metric is vital when false positives carry a high cost, such as in healthcare or security applications.

2. Recall

Recall reflects the model’s ability to identify all relevant instances in the dataset. It is defined as:

$$

Recall = \frac{TP}{TP + FN}

$$

A high recall indicates that the model successfully identifies a large portion of the actual positives, which is often crucial in contexts where missing a positive instance is critical.

3. F1 Score

The F1 Score balances precision and recall, serving as a harmonic mean:

$$

F1 = \frac{2 \times Precision \times Recall}{Precision + Recall}

$$

This metric is particularly useful in scenarios where an uneven class distribution may skew results.

4. Intersection over Union (IoU)

IoU evaluates the overlap between the model’s predicted bounding box and the ground truth bounding box, emphasizing the accuracy of predicted locations:

$$

IoU = \frac{GroundTruthBox \cap PredictionBox}{GroundTruthBox \cup PredictionBox}

$$

IoU is especially relevant in applications with spatial detection.

5. Average Precision (AP)

AP extends precision and recall across varying thresholds, providing a comprehensive measure of detection performance by evaluating:

$$

AP = \int{0}^{1} P{smooth}(r) dr

$$

This integral accounts for different levels of confidence in predictions.

6. Mean Average Precision (mAP)

To summarize detection across multiple categories, mAP averages the AP for each class:

$$

mAP = \frac{\sum{i=1}^{n} AP{i}}{n}

$$

This aggregate measure highlights overall model performance.

7. Accuracy

Accuracy assesses the overall correctness of the model’s predictions, encompassing both categories:

$$

Accuracy = \frac{TP + TN}{TP + TN + FP + FN}

$$

While straightforward, it may not provide a complete picture in imbalanced datasets.

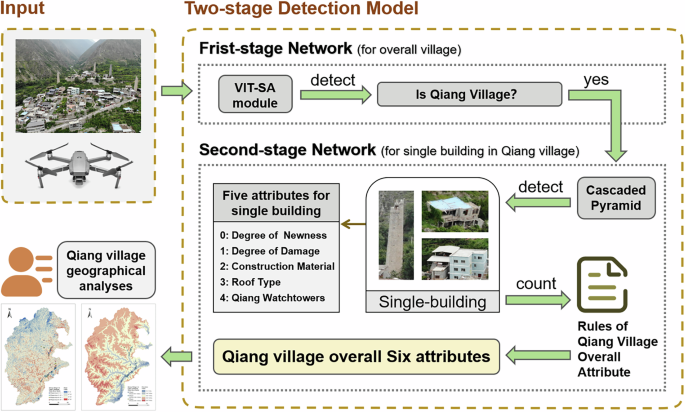

Model Training Configuration and Computational Costs

The design of our two-stage network employs a distributed training strategy, essential for addressing the complexities of multi-label annotations. The first stage focuses on independent optimization, followed by a joint training phase to refine feature interactions. Detailed hyperparameters and computational costs are delineated in accompanying tables, providing transparency regarding resource utilization.

Comparative Analysis of Detection Methods

In evaluating our model against established detection algorithms, we ensure fairness in comparisons by utilizing the same datasets and experimental settings. Notable benchmarks include:

- Sparse RCNN: A two-stage detector emphasizing computational efficiency through high-quality proposals.

- Anchor DETR: Merging Vision Transformer architecture with anchor methods to enhance localization accuracy.

- UNet3+: Originally designed for medical imaging yet informative in distinguishing architectural features.

- SRE-YOLOv8: Focused on UAV detection, adept at capturing multi-scale features in aerial imaging.

- IDE-YOLOv9: Designed for weather-adaptive detection with robust localization capabilities.

Each model is tested multiple times to mitigate biases from fluctuation, ensuring reliable validation of results.

Results Overview from Qiang Village Detection

Our proposed model demonstrated superior performance across all evaluation metrics, with impressive scores reflecting its efficacy. For instance, it achieved a Precision of 98.1%, substantially higher than competing models. The results also indicated strong performance in IoU and F1 Score, crucial for tasks where spatial accuracy is paramount.

Visual outputs, including heat maps, provide intuitive insights into the model’s detection capabilities, highlighting areas of high target concentration relevant to Qiang village characteristics.

Single Building and Attribute Detection

In the second phase, the model transitions to analyzing individual buildings within the Qiang villages and their respective attributes. Attributes such as construction materials, degree of newness, and structural integrity are tagged and assessed rigorously. This multilayered detection approach showcases the model’s adeptness at handling overlapping structures and various building sizes.

The attributes’ detection performance elucidates the model’s strengths and areas for improvement. For instance, while it excels at identifying construction material types like “rammed earth,” it faces challenges in discerning “stone masonry” and other closely resembling materials.

Ablation Studies: Dissecting Model Anatomy

To understand the contributions of individual components within the network, rigorous ablation studies are conducted. Critical modules—such as the spatial attention mechanism, cascaded pyramid structure, and deconvolution layers—are removed to observe changes in performance.

The results emphasize the significance of the spatial attention module, which dramatically impacts attribute detection accuracy. This iterative analysis reaffirms the necessity for a tailored architecture capable of addressing intricate detection tasks.

Conclusion

This extensive exploration of evaluation metrics and comparative analyses serves to illuminate the efficacy of our proposed network model for detecting Qiang villages and building attributes. Each metric adds a layer of understanding, revealing not just raw performance but also the intricacies of model functionality in the context of architectural and environmental nuance. Through detailed statistical evaluations and visual interpretations, the model’s strengths and potential areas for enhancement are well articulated, paving the way for future developments in remote sensing and automated detection systems.