Main Structure of the Network for Diagnosing Mouth Cancer

Introduction to the Proposed Model

The burgeoning intersection of deep learning and healthcare is leading to innovative solutions for early disease detection. One notable application is the use of convolutional neural networks (CNNs) for diagnosing mouth cancer. The architecture of the proposed model, illustrated in Fig. 5, is designed to robustly classify images as cancerous or non-cancerous, providing a vital tool for medical professionals.

Architecture Overview

At the heart of this model lies a sophisticated architecture composed of 19 layers, comprising 4 convolutional layers and 3 max pooling layers. Each convolutional layer extracts increasingly complex features from the images, while the max pooling layers reduce dimensionality, preserving essential information and allowing the model to focus on the most relevant aspects of the data.

The foundational layers employ a variation of batch learning with different channel sizes: 32, 16, and 8 channels in the eleventh, seventh, and third layers, respectively. This strategy enhances the model’s consistency by standardizing the input size, which is crucial for effective learning.

Optimization Procedures

To optimize the neural network, the model utilizes Stochastic Gradient Descent with Momentum (SGDM) as the primary optimizer, leveraging a learning rate of 1e-5. The cross-entropy function serves as the loss function, guiding the optimization process. Notably, the model was trained over 400 iterations and approximately 8 epochs, culminating in a training runtime of 58 seconds—a quick turnaround for such complex calculations.

Layer Configuration and Model Testing

The architecture emerged from iterative testing. Initially, the network started with 10 layers, achieving a satisfactory accuracy of 70%. Through systematic adjustments, the number of layers was increased while monitoring for overfitting. This vigilance paid off; the increased depth of the model has led to significant enhancements in its predictive capabilities.

Training was performed on a Windows 10 platform, employing dual SLI GeForce Titan GPUs and an Intel Core i7 processor. This setup not only facilitated faster computations but also enabled extensive experimentation with different configurations, which informed the final architecture of the model.

Performance Metrics

Performance analysis is a cornerstone of model validation. To rigorously evaluate the proposed architecture, five key metrics were employed:

-

Precision: This metric gauges the accuracy of positive predictions, defined as:

[

\text{Precision} = \frac{TP}{TP + FP}

]

where TP is true positive and FP is false positive. -

Accuracy: Overall correctness in classifying instances as either cancerous or non-cancerous, expressed as:

[

\text{Accuracy} = \frac{TP + TN}{TP + TN + FP + FN}

] -

Specificity: This measures the true negative rate, presenting the proportion of accurately classified negative instances:

[

\text{Specificity} = \frac{TN}{TN + FP}

] -

Sensitivity: Also termed as recall or true positive rate, calculated as:

[

\text{Sensitivity} = \frac{TP}{TP + FN}

] - F1-score: A harmonic mean of precision and sensitivity, providing insights into model accuracy:

[

\text{F1-score} = \frac{2 \times \text{Precision} \times \text{Sensitivity}}{\text{Precision} + \text{Sensitivity}}

]

Together, these metrics provide a comprehensive view of the model’s diagnostic capabilities.

Dataset Utilization

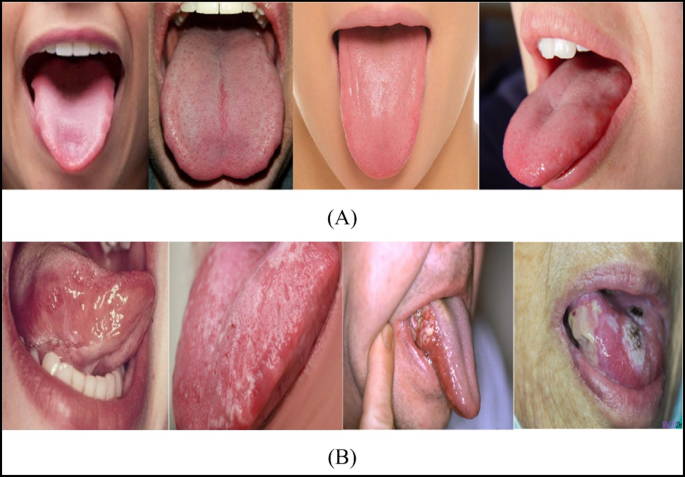

Model training involved a carefully split dataset—the Oral Cancer Images (OCI)—with 80% of data allocated for training and 20% reserved for testing. This structured distribution reinforces the robustness of performance assessments across different segments of the data.

Comparative Performance Analysis

The proposed CNN’s architecture achieved compelling results, with an astonishing accuracy of 99.54%. When compared to other contemporary models like Inception, AlexNet, and VGG19, it stands out remarkably. For example, Inception attained a 91.06% accuracy, while AlexNet scored 83.44%. Such comparative results underscore the efficacy of the proposed configuration, making it a leading contender in the domain of oral cancer detection.

In sensitivity evaluations, the proposed method recorded a value of 95.73%, further demonstrating its reliability over other tested architectures. These findings are substantiated with detailed statistical analyses, including confusion matrices that elucidate the performance across training, validation, and testing sets.

ROC Curve Analysis

To visualize the diagnostic performance, the Receiver Operating Characteristic (ROC) curve was generated, yielding a mean Area Under the Curve (AUC) of 0.6026. This metric is critical as it delineates the trade-off between true positive rates and false positive rates—an essential aspect in diagnostic settings.

Confusion Matrix Insights

The confusion matrix results were presented in Fig. 6 and elucidated a stable performance across various datasets, reinforcing the generalizability of the model. Through nuanced assessments of true and false positives/negatives, the model’s performance is explicitly indicated.

Training and Loss Evaluation

The training dynamics are visualized in Figs. 8 and 9, with accuracy and loss curves indicating effective learning. The absence of overfitting is prominently demonstrated, as both training and validation accuracies steadily converge without significant divergence.

Addressing Limitations and Future Work

Despite its impressive performance, this study bears limitations. The reliance on the specific OCI dataset may hinder generalizability, necessitating further validation across diverse datasets to reinforce findings. Moreover, the absence of explainable AI techniques constricts interpretability—a crucial aspect for real-world adoption in clinical settings.

Future work should prioritize incorporating interpretability methods like Grad-CAM to visualize the features influencing predictions, thus enhancing transparency and trust within clinical applications.

Practical Deployment Considerations

The proposed 19-layer CNN design is not only robust in terms of diagnostic performance but also feasible for integration into everyday clinical workflows. With minimal infrastructure requirements, it can be efficiently executed on standard clinical hardware, thus making it a practical tool for oral cancer screening and diagnosis. The integration with existing hospital systems would further streamline processes, ensuring seamless transitions from image capture to decision support.

In conclusion, this innovative model represents a fraction of what the future may hold for AI-driven tools in healthcare, driving advancements in diagnosis and treatment methodologies.