Collecting and Preprocessing Coal Gangue Image Data

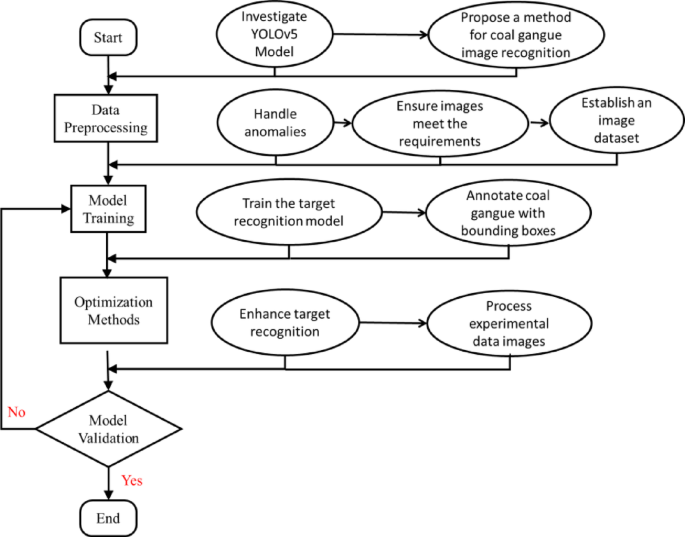

Introduction

In the realm of image recognition, data quality and quantity play pivotal roles in the efficacy of machine learning models. This article delves into the intricate process of collecting and preprocessing coal gangue image data, which is crucial for developing robust models capable of effectively distinguishing between coal and gangue. Our research involved meticulous efforts to capture a diverse set of images, amounting to 3,200 unique pictures of coal and gangue for model training and testing.

Image Collection

The images used in this study were meticulously captured using an in-house camera, ensuring that the dataset accurately reflects the real-world variances in coal and gangue. The visual data, depicted in Fig. 3, showcases an array of conditions under which the coal gangue was photographed, covering different lighting and environmental settings. This comprehensive dataset is fundamental for ensuring that our model can generalize well, maximizing its performance in diverse applications.

Data Augmentation Techniques

Given the limited size of the original dataset, we employed various data augmentation techniques to enrich the collection of coal gangue images. Techniques such as rotation, scaling, flipping, and color adjustments were systematically applied to enhance the dataset’s diversity. This not only helps in overcoming the limitations of the original dataset but also aids the model in learning more robust features, crucial for precise classification and detection.

Annotation Process

Before the images could be used for training, they required detailed labeling—this involved annotating each image with pertinent information regarding the location and category of the targets present. For this purpose, we used the LabelImg tool, which facilitates an efficient and straightforward annotation process, as indicated in Fig. 4. This step is essential for delineating the boundaries of different elements within the images, enabling the model to learn effectively from the training dataset.

Once the annotation was complete, we organized the images into designated folders. Training images were placed in a designated ‘train’ directory, while validation images were organized under a separate ‘val’ directory. Corresponding labels were systematically categorized in a ‘labels’ folder, completing the dataset preparation and illustrated in Fig. 5. This structured approach ensures that the model can easily access and process data during training.

Experimental Setup

The experiments carried out employed a Windows 10 operating system, utilizing an Intel(R) Core (TM) i7-8700 CPU coupled with an NVIDIA GTX 2070 GPU to optimize processing capabilities. The development framework was powered by Python 3.8.5, CUDA 10.2.89, cuDNN 7.6.5, and PyTorch 1.6.0, ensuring a robust environment for training deep learning models based on the YOLOv5 architecture.

We implemented transfer learning, utilizing a pre-trained model which significantly boosts efficiency. The training parameters were finely tuned, with a batch size of 16, a momentum value for the learning rate set to 0.934, and a weight decay set at 0.0005. The optimizer chosen for model training was Stochastic Gradient Descent (SGD), initialized with a learning rate of 1 × 10^-2. These parameters, summarized in Table 1, laid the groundwork for effective model training.

Evaluation Metrics and Performance Analysis

The efficacy of each trained model on the ARDs-5-TE dataset was evaluated through a suite of metrics. For each test image, we computed precision (P) and recall (R) by contrasting the detection results with the ground truth labels derived from our annotations. The formulas used are standard in the field:

[

P = \frac{TP}{{TP + FP}} \quad (1)

]

[

R = \frac{TP}{{TP + FN}} \quad (2)

]

[

F_{1} = 2 \times \frac{P \times R}{{P + R}} \quad (3)

]

Additionally, we considered the mean average precision (mAP), which offers a comprehensive view of model performance across categories. This was achieved by averaging the area under the precision-recall curve for each class.

Computational Complexity

Understanding the computational overhead of our models is crucial; hence, assessments of parameter numbers (Par, in Mb) and FLOPs (floating-point operations, in G) were conducted. Higher values of Par typically indicate increased training times, while FLOPs provide insight into the operational complexity during model inference. Importantly, we aimed to minimize FLOPs, enhancing the model’s operational efficiency, with inference times calculated in milliseconds per image on the GTX 2070.

Experimental Results

The dataset was divided for training and validation into four distinct models: the YOLOv5 basic model, YOLOv5-MCA, YOLOv5-CARAFE, and the optimal YOLOv5 model. Each experiment involved the evaluation of 310 images, supporting thorough cross-validation through a training set of 2472 images and 309 for validation.

Each validation phase demonstrated the models’ capabilities in identifying various coal and gangue types against varying backdrops. Notably, all targets were efficiently detected, affirming the model’s proficiency in distinguishing multiple coal gangue types and sizes, as displayed in Fig. 6.

Results Analysis

The results were subjected to rigorous statistical analysis, focusing on precision (P), recall (R), and mAP values for three categories: coal, rock, and overall performance. These findings are summarized in Table 2 and illustrated in Fig. 7. As indicated, the YOLOv5 optimal model achieved notable enhancements in recognition metrics, illustrating improvements in precision, recall, and mAP values across all categories.

For instance, the optimal model’s P value increased from 0.963 to 0.966, reflecting a relative improvement of 0.31%. Similarly, the R value rose from 0.954 to 0.959, corresponding to a 0.52% enhancement, indicative of improved target detection capabilities. The mAP value improvement, from 0.975 to 0.977, showcases a 0.20% increase, thereby validating the model’s efficiency in recognizing coal and gangue.

Comparative Analysis

In reviewing the experimental results across the four model variations, we illustrated the recognition impacts through comparative analysis in Fig. 9, showcasing the differences in performance and confidence levels among the YOLOv5 basic, YOLOv5-MCA, YOLOv5-CARAFE, and the YOLOv5 optimal models. It is apparent that the optimal structure provides a significant enhancement in recognition ability, validating the model’s practical efficacy for real-world applications.

The systematic organization of image data collection, preprocessing, and rigorous evaluation forms the backbone of our approach to coal gangue image recognition and classification, paving the way for future explorations in this essential field.