A New Dawn in Robotics: The Power of Vision-Based Control

In a quiet office nestled within MIT’s prestigious Computer Science and Artificial Intelligence Laboratory (CSAIL), a remarkable feat of engineering is taking shape. Picture a soft robotic hand, gracefully curling its fingers around a small object. While the mechanical design may intrigue you, what’s truly remarkable is what’s not present in this creation: an array of sensors. Instead, this innovative hand operates through a single camera, which observes its movements and utilizes that visual data to control them. This breakthrough demonstrates a compelling shift in how robots can engage with their environments.

Neural Jacobian Fields: A Game-Changer

The cornerstone of this advancement is a novel approach known as Neural Jacobian Fields (NJF). Developed by researchers at CSAIL, this methodology empowers robots to learn about their own capabilities through observation—without the need for pre-programmed models or complex sensor systems. According to Sizhe Lester Li, a PhD student and lead researcher on this project, this technology signifies a fundamental shift from the intricate coding often associated with robotics to an intuitive teaching approach. Instead of meticulously engineering every robotic function, we might soon simply show robots how to act, allowing them to figure out the mechanics of their movements autonomously.

This realization stems from an effective rethinking of existing paradigms: the major hurdle to affordable, adaptable robotics isn’t the hardware itself but rather the control methodologies we apply. Traditional robotics often leans on rigid structures and comprehensive sensor arrays, which are designed to simplify complex modeling. In contrast, NJF invites robots to construct their own internal models based on their observations, regardless of their shape or material.

Learning Through Action: The Mechanism Behind NJF

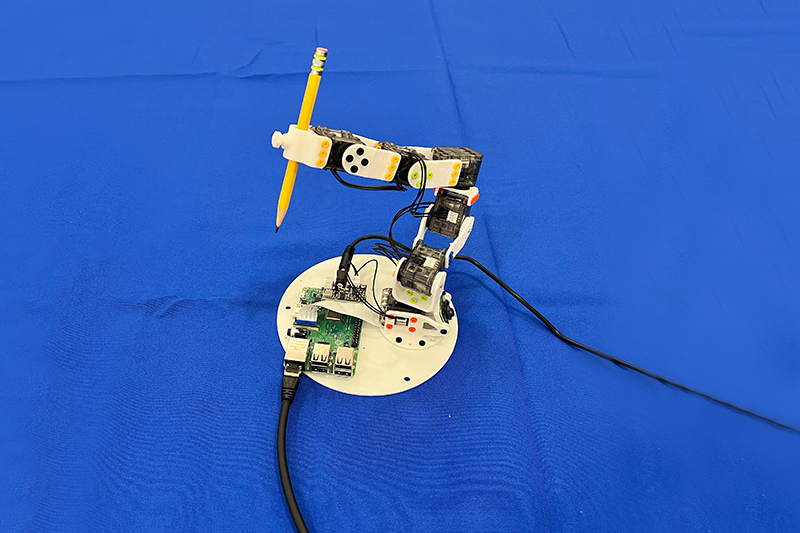

What does this learning process look like? It mirrors human development—think of how you might learn to control your fingers. You wiggle them, observe the results, and adapt based on what you see. NJF operates in a remarkably similar fashion. By executing random movements while capturing visual data with a camera, the system learns how different parts of the robot respond to various control commands. This method has been demonstrated across an impressive range of robotic platforms, from pneumatic soft robotic hands to rigid arms, showcasing its versatility.

Unleashing Creativity in Design

One of the most thrilling aspects of NJF is its potential to free robotic design from conventional constraints. Without the need for sensors or intricate designs that ensure accurate control, engineers can explore innovative forms and functions. This could lead to a new generation of soft, bio-inspired robots capable of agile movements in unpredictable environments. The NJF framework encourages designers to think beyond traditional specifications, promoting a creative exploration of robotics without the burden of precise modeling.

Real-Time Feedback in Motion

At the heart of NJF lies a sophisticated neural network designed to capture two critical aspects of a robot’s functionality: its three-dimensional geometry and how it responds to control signals. Central to this system is the concept of a Jacobian field, which predicts how various points on the robot will move according to motor commands.

To train the robot, it performs a series of random motions while a camera observes the outcomes. This process requires no human supervision or prior knowledge of the robot’s structure. The intuitive nature of this learning means that once trained, the robot can achieve real-time control with just a single camera, operating at around 12 Hertz for continuous observation and action planning.

Expanding Applications Beyond the Laboratory

The implications of NJF extend far beyond the confines of the laboratory. With further development, robots using this technology could execute complex tasks in areas like agriculture and construction, where precision and adaptability are crucial but often challenging with traditional robotics. The NJF framework empowers robots to navigate changing landscapes without requiring an extensive network of sensors, drastically lowering the costs and complexities involved in deploying robotic solutions in real-world scenarios.

The Future of Robotics: Accessible and Adaptive

Despite its groundbreaking capabilities, the current iteration of NJF faces limitations. Although requiring multiple cameras for initial training, researchers are already envisioning a more accessible future. Imagine hobbyists recording a robot’s movements with a basic smartphone, much like capturing a video of a rental car before driving. This democratization of robotics technology could open doors for amateur innovators across various fields.

While NJF presently lacks generalization across different robot types and does not incorporate tactile feedback, ongoing research aims to refine these aspects. Efforts to enhance generalization capabilities and enable the robot to work efficiently in more intricate environments are underway.

Embracing an Era of Self-Awareness in Robotics

As the research team delves deeper into this subject, they highlight an exciting prospect: NJF could offer robots a sense of “embodied self-awareness,” akin to what humans experience in understanding their own movements. This quality not only simplifies the learning process for robots but also lays the groundwork for the development of flexible and intuitive robotic systems.

With contributions from diverse experts in the field, including field specialists from both the computer vision and robotics sectors, this research marks a pivotal moment. Support from notable organizations further solidifies its potential impact.

By moving away from rigid programming and traditional modeling approaches, NJF encapsulates a broader trend in robotics: a future where robots learn through observation, interaction, and, ultimately, a more natural understanding of their environments.