“EgoVision: A Robust YOLO-ViT Hybrid for Egocentric Object Recognition”

EgoVision: A Robust YOLO-ViT Hybrid for Egocentric Object Recognition

Understanding Egocentric Object Recognition

Egocentric object recognition focuses on identifying and understanding objects from the first-person perspective, typically through wearable cameras or smartphone devices. This technology is crucial for applications such as augmented reality, assistive technologies, and human-computer interaction.

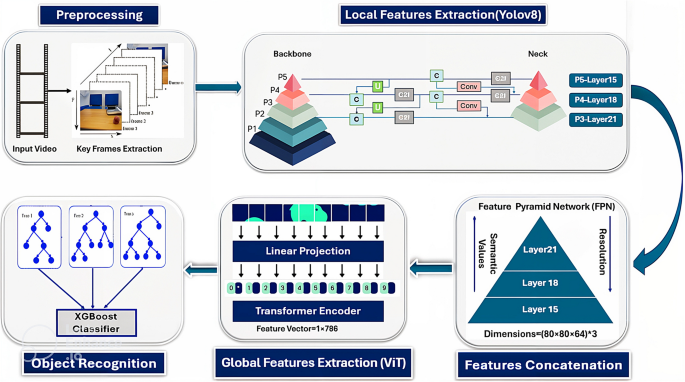

EgoVision integrates two powerful frameworks: YOLO (You Only Look Once) and Vision Transformer (ViT). YOLO excels in real-time object detection by localizing objects within a scene, whereas ViT captures global contextual relationships, enhancing object understanding. This hybrid approach addresses the unique challenges of egocentric settings, like occlusion and dynamic viewpoints.

Core Components of EgoVision

Central to EgoVision’s architecture are three main components: key-frame extraction, feature extraction, and classification.

- Key-Frame Extraction: Extracting frames from egocentric video streams enables the model to retain only contextually significant moments, reducing noise from irrelevant frames.

- Feature Extraction: YOLOv8 captures local object features while ViT addresses global spatial relationships. This dual approach improves overall recognition accuracy in scenarios of motion blur or occlusion.

- Classification: A Random Forest classifier evaluates fused features from both YOLO and ViT for final object categorization.

Each element plays a pivotal role in maximizing the system’s robustness and efficiency in dynamic environments.

The Step-by-Step Process of EgoVision

EgoVision employs a structured process to achieve its object recognition goals:

- Key-Frame Selection: The system processes continuous video streams to select relevant frames that include human-object interactions. This reduces computational load while maintaining essential visual information.

- Data Annotation: Each selected frame is manually annotated using tools like the Computer Vision Annotation Tool (CVAT), ensuring high-quality training data.

- Feature Extraction: The YOLO model captures local features from detected objects, while ViT focuses on contextual information.

- Fusing Features: A Feature Pyramid Network (FPN) aligns the outputs from both models, creating a unified feature set.

- Classification: The fused feature set is input into a Random Forest classifier, which categorizes the objects based on learned attributes.

This structured flow guarantees that each step contributes to the holistic functionality of EgoVision.

Practical Examples of EgoVision in Action

EgoVision significantly enhances user experiences in wearable technology applications, especially during tasks like cooking or assembly where hands-on object interactions occur. For instance, in a cooking application, EgoVision could accurately identify various kitchen utensils and ingredients shown from a user’s point of view, allowing smart recipe suggestions or guidance for users with limited mobility.

In contrast with solely YOLO or ViT-based systems, EgoVision’s hybrid architecture offers a more comprehensive solution capable of handling complex interactions users encounter in daily life, highlighting its versatility and practical applicability.

Common Pitfalls and Solutions

While implementing EgoVision, there are several common pitfalls. One common issue is overfitting due to small datasets. This often leads to models that perform well on training data but fail in real-world scenarios.

To mitigate this, employing techniques such as data augmentation can expand the dataset’s variability. By introducing minor distortions and transformations, the model can learn to generalize better across different lighting and occlusion scenarios.

Another challenge is the potential computational overhead from fusing features from two distinct networks. However, using efficient architectures like YOLOv8 and lightweight classifiers like Random Forest helps maintain real-time inference capabilities without compromising accuracy.

Tools and Frameworks in Practice

EgoVision utilizes several crucial tools and frameworks:

- YOLOv8: Offers rapid local detection through a multi-scale convolutional backbone, allowing for efficient feature extraction in real-time settings.

- Vision Transformer (ViT): Models global context and spatial relationships, essential for disambiguating objects under dynamic conditions.

- Random Forest Classifier: Works effectively with high-dimensional feature sets, making it suitable for handling the intricacies of ego-centric data.

- Data Annotation Tools: Platforms like CVAT ensure high-quality annotations necessary for training robust models.

These tools collectively enhance the efficacy of EgoVision in egocentric object recognition scenarios.

Exploring Variations and Alternatives

There are several variations and alternatives to EgoVision depending on resource availability and project requirements. For instance, if real-time processing isn’t crucial, one might prefer a sequential pipeline using only ViT for improved context comprehension at the cost of speed. Alternatively, a pure YOLO implementation could suffice for applications heavily reliant on speed rather than contextual understanding, such as real-time tracking in sports.

The choice among these approaches is often dictated by the specific use case, desired accuracy, and available computational resources.

FAQ

What benefits does EgoVision provide over traditional models?

EgoVision combines local detection capabilities with global context awareness, which improves recognition accuracy in dynamic environments, addressing challenges like occlusion and varied perspectives.

Is there a trade-off between speed and accuracy in EgoVision?

While EgoVision is designed for real-time efficiency, integrating both YOLO and ViT can introduce slight latency compared to pure YOLO systems. However, this trade-off yields greater overall accuracy and robustness in complex scenes.

What industries can benefit from EgoVision technology?

Industries such as healthcare, automotive (for driver assistance), and consumer electronics (like wearable devices) stand to gain significantly from the robust capabilities of EgoVision in interpreting egocentric data.

How does EgoVision handle occlusion?

EgoVision’s hybrid model uses YOLO for local object detection and ViT for global context, enabling better discernment of objects even when partially obscured.