Experiment Setup: Evaluating Weakly Supervised Semantic Segmentation

To evaluate the performance of the proposed semantic segmentation method, we conducted extensive experiments with two well-established datasets: the Potsdam and Vaihingen datasets. This section aims to provide a comprehensive overview of the experiment setup, including dataset details, evaluation metrics, and the specific experimental configurations used.

Datasets

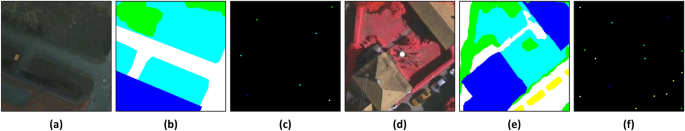

Potsdam Dataset

The Potsdam dataset is part of the ISPRS 2D Semantic Labeling Contest and comprises 38 high-resolution aerial image tiles, each with an impressive resolution of 6000 × 6000 pixels. Each image contains four spectral bands: near-infrared (NIR), red (R), green (G), and blue (B), along with a Digital Surface Model (DSM) and a normalized DSM. For the purposes of this study, we focused solely on the RGB bands for both training and testing. The dataset is divided into 24 training images and 14 test images, organized into six semantic classes—five foreground categories and one background class.

Vaihingen Dataset

Similar to the Potsdam dataset, the Vaihingen dataset is also provided by the ISPRS 2D Semantic Labeling Contest. It contains 33 aerial image tiles, each captured with three spectral bands: red, green, and near-infrared, with a spatial resolution of 9 cm. In our research, 16 labeled tiles were designated for training, while the remaining 17 tiles were used for testing. This dataset also features the same six-class structure as the Potsdam dataset.

Evaluation Metrics

To evaluate the performance of the proposed method, we utilized several widely recognized metrics. These included Overall Accuracy (OA), Intersection over Union (IoU), F1-score, and Boundary F1-score (BF1), each playing a vital role in assessing different aspects of segmentation performance.

Overall Accuracy (OA)

Overall Accuracy measures the proportion of correctly classified pixels across the entire dataset, defined mathematically as:

[

\text{OA} = \frac{TP + TN}{Num}

]

where (TP) (True Positives) and (TN) (True Negatives) represent correctly classified positive and negative pixels, respectively, and (Num) is the total pixel count.

Intersection over Union (IoU)

IoU, or the Jaccard Index, evaluates the overlap between predicted and ground truth regions, providing a class-specific accuracy measure defined as:

[

\text{IoU} = \frac{TP}{TP + FP + FN}

]

where (FP) (False Positives) and (FN) (False Negatives) denote misclassified pixels.

F1-Score

The F1-score serves as a harmonic mean of precision and recall:

[

\text{F1} = \frac{2 \times \text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}}

]

Precision refers to the ratio of true positives to all predicted positives, while Recall reflects the ratio of true positives to all actual positives.

Boundary F1-Score (BF1)

The BF1 evaluates the accuracy of boundary localization by comparing predicted and ground truth contours:

[

\text{BF1} = \frac{2 \times \text{Boundary Precision} \times \text{Boundary Recall}}{\text{Boundary Precision} + \text{Boundary Recall}}

]

Here, Boundary Precision and Boundary Recall are based on boundary pixels from both predicted and ground truth masks with a tolerance of 3 pixels to account for minor misalignments.

Collectively, these metrics offer a nuanced evaluation of segmentation performance. OA reflects the overall classification accuracy, IoU measures spatial overlap, F1-score captures the equilibrium between precision and recall, and BF1-score hones in on boundary alignment quality.

Experimental Setting

The experiments were operationalized using the open-source PyTorch framework on a server powered by an NVIDIA GeForce RTX A5000 GPU.

Data Preprocessing

Data preprocessing varied between datasets. For the Potsdam dataset, images were cropped into non-overlapping patches of 256 × 256 pixels using a sliding window approach, while images from the Vaihingen dataset were cropped with a 50% overlap to enhance diversity and volume of the dataset. Data augmentation techniques such as random flipping and rotation were employed during testing, along with mean normalization and standardization of input images.

Model Configuration

For the segmentation task, the backbone architecture used was ResNet-18, pre-trained on ImageNet. Training utilized mini-batches with the Stochastic Gradient Descent (SGD) optimizer, featuring an initial learning rate of 0.001, momentum of 0.9, and weight decay set at 0.0005. To curtail overfitting, horizontal and vertical flipping were performed during the training phase, which spanned 100 epochs using a cosine annealing learning rate schedule. Due to memory constraints and validation performance, the batch size was set at 32, with the input image size standardized to 256 × 256 pixels using 3-channel input images for all experiments.

Pseudo-Label Generation with SAM Branch

Within our framework, the Segment Anything Model (SAM) was deployed without fine-tuning in a zero-shot setting to yield initial pseudo-labels from point and negative prompts. To elevate the quality and compatibility of these pseudo-labels with the Pseudo-Generated Branch, prompt engineering techniques were applied—utilizing one point as a positive prompt and others as negative prompts. This method aims for robust generalization without additional computational burdens from fine-tuning SAM.

Through the structured approach to dataset utilization, evaluation metrics, and comprehensive experimentation, our research endeavors to offer meaningful insights into the nuances of weakly supervised semantic segmentation within remote sensing imagery, laying the foundation for practical engineering applications and further advancements in the field.