Understanding Drone-Captured Datasets in Object Detection: Insights from VisDrone2019 and TinyPerson

The Unique Characteristics of Datasets

In the realm of computer vision, the choice of dataset can greatly influence the success of object detection algorithms. Traditional datasets often present a distribution skewed toward larger objects, which dominate the imagery. However, when we delve into drone-captured images, a striking contrast emerges: small and very small objects take center stage, whereas large objects barely make a dent. This transformation in object distribution prompts a necessary shift in research focus, making drone-captured images uniquely suited for our experiments. For validation, we turned our gaze toward two well-established public datasets: VisDrone2019 and TinyPerson.

VisDrone2019: A Comprehensive Dataset

The VisDrone2019 dataset is expansive, offering a total of 10,209 high-resolution images (2000 × 1500). It meticulously segments these images into training (6,471), validation (548), and test sets (3,190). Featuring ten target categories—including pedestrians, cars, and bicycles—this dataset is a goldmine for researchers focusing on small target detection. The high resolution and dense distribution of targets make VisDrone2019 particularly valuable for modeling scenarios where small objects are prevalent.

TinyPerson: Focus on the Minute

Switching gears to the TinyPerson dataset, we find a dedicated collection of 1,610 images, with 794 allocated for training and 816 for testing. This dataset is unique in its composition, containing an astounding 547,800 human targets, with sizes ranging from a mere 2 to 20 pixels. Included in this dataset are two types of targets: “ocean people” (those captured in marine environments) and “earthlings” (people on land). Our objective—focused on object detection—recognizes both categories as a single class, simply termed “people.” The TinyPerson dataset serves as an ideal testbed for evaluating small object detection capabilities, especially in complex environments rich with obstructions.

Implementation Details: Building the Framework

Experimental Setup

For the experiments, we operated within a robust Windows environment using Python (version 3.9) and PyTorch (version 2.4.0+cu121). The processing was conducted on an NVIDIA 4060 Ti GPU, which provides the necessary computational power for our sophisticated models.

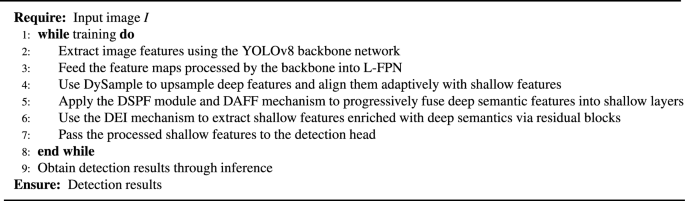

Among our tools, YOLOv8n was chosen as the baseline model, distinguished by its lower complexity and parameter count, making it ideally suited for edge devices requiring real-time performance. Moreover, the architecture’s design facilitates high-resolution shallow feature maps, crucial for detecting small objects. By incorporating the P2 layer into YOLOv8n, we established YOLOv8n+P2 as our reference baseline.

Training Protocols

The training protocols were tailored to maximize efficiency while ensuring model effectiveness. For the VisDrone2019 dataset, we utilized the SGD optimizer with a cosine annealing learning rate schedule, beginning with an initial rate of 0.01 and gradually decreasing to 0.0001 over 300 epochs. The batch size was set to 8, with data augmentation techniques employed to enhance our model’s robustness. Conversely, for the TinyPerson dataset, our training with image sizes capped at 1024 pixels necessitated a smaller batch size of 2 to avoid memory overflow.

Evaluation Metrics

To measure performance, we adopted a slew of metrics, including precision, recall, mean average precision (mAP) at various intersection over union (IoU) thresholds, and the F1 score. These metrics capture the nuances of model performance, allowing for a thorough assessment of both accuracy and efficiency.

Experimental Outcomes on VisDrone2019

Method Comparisons

The effectiveness of the proposed BPD-YOLO model was rigorously compared against existing benchmarks. Experimental results revealed that BPD-YOLO achieved notable improvements over other advanced models, including RetinaNet and Faster R-CNN. Specifically, BPD-YOLO exhibited a 9% boost in mAP50 over RetinaNet while reducing parameters significantly. Similar trends were observed when juxtaposing BPD-YOLO with lightweight models like C3TB-YOLOv5 and YOLOv7-Tiny, underscoring its prowess in balancing computational efficiency with detection accuracy.

Baseline Comparisons

In comparing BPD-YOLO with the baseline YOLOv8n+p2, a marked improvement was evident—mAP50 and mAP50-95 metrics increased by 2.8% and 1.4%, respectively, while computational cost dropped by 0.8 GFLOPs. Visual inspection of results, facilitated by confusion matrices, further confirmed these improvements, particularly in the accuracy of motor vehicle detection.

Insights from TinyPerson Dataset Experiments

The findings on the TinyPerson dataset paralleled those from VisDrone2019. BPD-YOLOn demonstrated a 1.1% improvement in mAP50 and a reduction of 1.43M parameters compared to the baseline YOLOv8n+p2. This dataset’s challenging context for small targets was again met with BPD-YOLO’s superior performance, easily identifying densely packed objects that eluded conventional models.

Ablation Studies: Unpacking the Mechanisms

Evaluating L-FPN

An ablation study assessed the effectiveness of the L-FPN architecture. Results illustrated that L-FPN consistently outperformed traditional FPN configurations across various backbone architectures, showcasing its robustness.

Testing Various Network Architectures

Further experiments sought to validate various network architectures paired with L-FPN. Here, we compared our method against alternatives like AFPN and Unet++, emphasizing how L-FPN integrates seamlessly with different models to enhance small object detection without inflating computational demands.

Future Work: Enhancing Detection Capabilities

Investigation of Upsampling Methods

Our experimentation with various upsampling techniques highlighted the efficacy of DySample over traditional methods. Notably, incorporating DySample led to significant gains in detection accuracy while conserving computational resources.

Exploring Dilation Rates

Delving into the impact of dilation rates on convolutional layers yielded promising results. The optimal configuration (1, 2, 3) resulted in the highest accuracy, reaffirming the importance of tailored network strategies in improving detection performance.

Depthwise Separable Convolutions: A Performance Enhancer

Finally, the impact of depthwise separable convolutions was assessed, revealing a notable efficiency gain without a significant sacrifice in accuracy. This technique streamlined our model further, reinforcing the robustness and adaptability required for effective small object detection.

In summary, the advancements in small object detection achieved through the utilization of drone-captured datasets like VisDrone2019 and TinyPerson unveil countless possibilities for future research. The proposed architecture, BPD-YOLO, embodies a transformative approach, seamlessly balancing detection accuracy and computational efficiency, paving the way for future developments in this critical field of study.