Optimizer Analysis: Adam vs. Adamax

In the ever-evolving field of machine learning, the selection of the right optimization algorithm is fundamental to the success of model training. In this article, we delve into the comparative analyses of two prominent optimizers: Adam and Adamax. Our exploration focuses on various metrics throughout training, including accuracy, loss, and validation curves.

Adam Optimizer Analysis

Epoch-wise Performance

The performance of the Adam optimizer can be succinctly captured in Table 3, which details epoch-wise changes in accuracy and loss. Notably, between Epoch 5 and Epoch 10, accuracy surged from 0.705 to 0.816, reflecting an impressive 0.111 improvement. Meanwhile, loss decreased from 0.739 to 0.470, a reduction of 0.269. This trend continued into Epoch 15, as validation accuracy rose from 0.797 to 0.891, corroborating the optimizer’s effectiveness.

In examining further epochs, from Epoch 15 to 20, accuracy rose from 0.901 to 0.940, marking an improvement of 0.039. Loss figures also reflected this positive trajectory, decreasing from 0.267 to 0.164. However, post Epoch 20, the metrics exhibited slight fluctuations, indicating a stabilization phase for the model.

Visual Representation

The insights provided by Figure 4 illustrate the evolution of accuracy and loss metrics throughout the 30 epochs of training. The accuracy saw a monumental rise from 0.939 to 0.970 between Epoch 25 and Epoch 30, showcasing a final adjustment period, while loss decreased from 0.171 to 0.081.

Additionally, the confusion matrix depicted in Figure 4(c) highlights the model’s classifications, providing a clear understanding of prediction accuracy.

Adamax Optimizer Analysis

Epoch-wise Performance

In contrast, the analysis of the Adamax optimizer reflected different performance dynamics, as illustrated in Table 4. The model demonstrated a steady increase in accuracy, beginning at 0.613 at Epoch 5 and reaching 0.929 by Epoch 30. The validation accuracy mirrored this trend, starting at 0.623 and escalating to 0.919. Such consistency suggests that the model effectively generalizes its performance on unseen data.

Loss values also followed a downward trajectory, beginning at 0.984 and descending to 0.210 by Epoch 30, similar to the validation metrics.

Visual Representation

In reference to Figure 5, the accuracy curve underwent a continuous improvement, while validation loss successfully minimized over the epochs. The confusion matrix presented in Figure 5(c) reveals that the classification model achieved an impressive 93.8% accuracy rate.

Comparison of Optimizers

Table 5 encapsulates a comparative analysis of the performance of both optimizers. Through the Adam optimizer, Disease Class 3 achieved a precision rate of 0.97 with a consequential F1 score of 0.99, leading to an overall accuracy of 0.96. Conversely, the Adamax optimizer delivered a precision of 0.96 for Disease Class 0 with an overall accuracy of 0.91.

While Adam consistently outperformed Adamax in precision across all classes, Adamax showcased a higher recall for Disease Class 0, indicating that it captures a broader range of true positive cases. However, Adam dominates in terms of overall accuracy.

The aggressiveness of Adam’s updates stems from its utilization of squared gradients, allowing for faster convergence, especially with smaller batches typical in many deep learning tasks. Its ability to adapt quickly using bias correction terms enhances stability during early-stage learning.

Comparative Performance Against Other Models

The Adam-optimized CNN model was subjected to comparison with established transfer learning models such as ResNet50 and YoloV8. Table 6 provides insight into this comparative analysis, revealing that the Adam-optimized CNN model ultimately achieved a remarkable accuracy rate of 0.96.

Performance Metrics Comparison

In this comparative study, YoloV8 came closest with a precision of 0.938 and the highest F1-score of 0.939. ResNet50, while performing adequately, secured an accuracy of 0.93, trailing behind both the Adam-optimized CNN and YoloV8 regarding F1-score and accuracy.

Visualization of Results

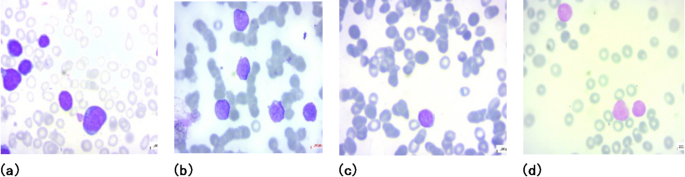

Figure 7 showcases the visual results of the proposed model, delineating the categorization of diseases into four classes representative of their severity—Benign, Early, Pre, and Pro. This classification is instrumental in diagnosing and tailoring treatment protocols effectively.

State-of-the-Art Techniques

A thorough examination presented in Table 7 covers a spectrum of techniques for identifying Acute Lymphoblastic Leukemia (ALL). The proposed Adam-optimized CNN demonstrated a competitive accuracy of 96.00%. Though several models reported marginally higher accuracies, such as ResNet50 achieving 99.38%, it is crucial to recognize that many of these leading models were developed using smaller, distinct datasets, potentially limiting their applicability to real-world scenarios.

The robust structure and accuracy of the Adam-optimized model provide a reliable and versatile framework for classifying ALL, highlighting its effectiveness in addressing intricate medical diagnostics.

The journey through the auxiliary analytics, visual illustrations, and intricate comparisons underscores the effectiveness of optimizer selection in improving model performance, suggesting that thoughtful choices in this domain can yield significant advancements in machine learning applications.