Rethinking Machine Learning in High-Stakes Decisions

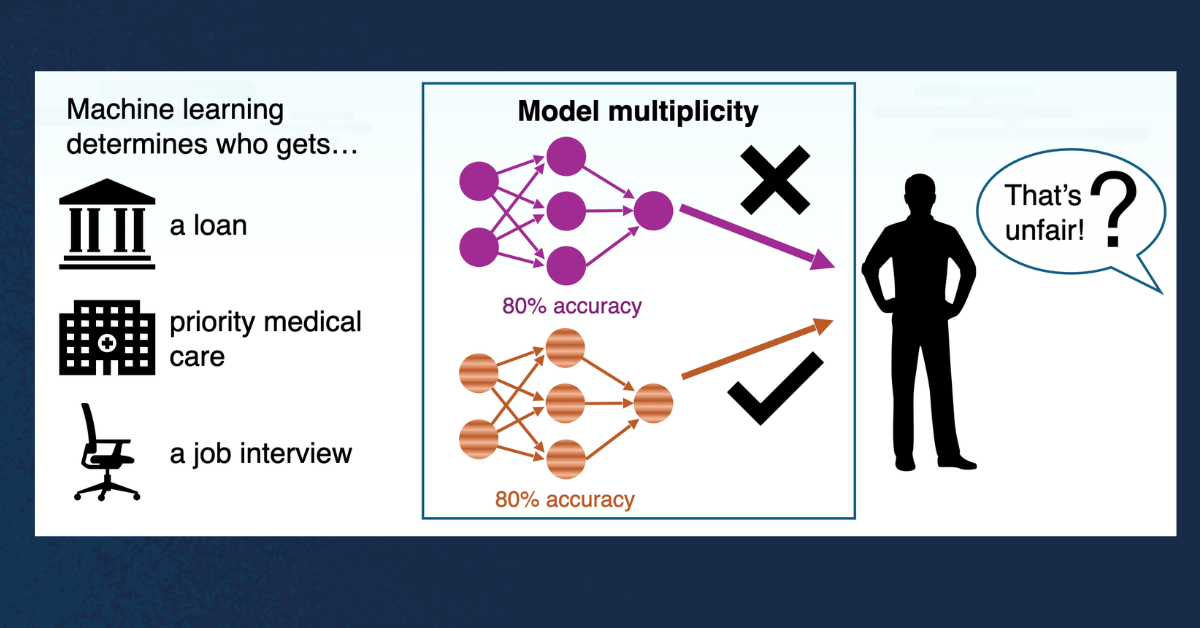

Machine learning (ML) has firmly embedded itself into the fabric of high-stakes decision-making across various domains. From hiring opportunities to loan approvals, algorithms are increasingly steering critical choices that shape individuals’ lives. But are we relying too heavily on singular ML models, especially when they can yield disparate outcomes?

The Challenge with Single-Model Decision Making

Researchers from the University of California San Diego and the University of Wisconsin–Madison have embarked on a quest to challenge the common practice of employing one model to make such pivotal decisions. Their inquiry revolves around a critical question: How do people react when "equally good" ML models arrive at different conclusions? This investigation is rooted in the burgeoning field of human-computer interaction and seeks to comprehend the implications of model multiplicity.

Groundbreaking Research Led by Loris D’Antoni

Associate Professor Loris D’Antoni, heading the research at UC San Diego’s Jacobs School of Engineering, has taken significant strides in understanding this dilemma. Recently, at the 2025 Conference on Human Factors in Computing Systems (CHI), D’Antoni presented findings from a paper titled Perceptions of the Fairness Impacts of Multiplicity in Machine Learning. This research, which began during his time at the University of Wisconsin, explores how inconsistent yet accurate models affect perceptions of fairness among stakeholders.

Exploring Objectivity and Fairness

D’Antoni and his team examined the prevalent notion that different models can produce variable outcomes. For instance, one robust model may reject a job application, while another may approve it. This variability begs the question of how, or if, objective decisions can be made in the context of multiple model outputs. “ML researchers posit that current practices pose a fairness risk,” D’Antoni stated, highlighting the impetus behind their exploration.

Stakeholder Insights: A Shift in Perspective

As part of their study, the researchers engaged with lay stakeholders—ordinary individuals who are directly impacted by these automated decisions. They delved into how these stakeholders believe decision-making should transpire when faced with conflicting but equally accurate model predictions.

The results from this inquiry were revealing. Many participants expressed concern about the attachment to a single decision-making model, particularly when confronted with disagreement from multiple sources. Additionally, they showed a strong aversion to the idea of randomizing decisions in such scenarios. These insights illuminate a significant divide between the traditional practices in ML and the perspectives of those who experience the consequences firsthand.

Implications for Future Model Development

First author Anna Meyer, a PhD student under D’Antoni’s mentorship, pointed out an intriguing contrast. "We find these results interesting because these preferences contrast with standard practice in ML development and philosophy research on fair practices." This disparity not only sheds light on current practices but also holds potential for reshaping future model development and policies.

Recommendations for a Fairer Approach

The research team proposes several key recommendations based on their findings. They advocate for a broader exploration of diverse models rather than adhering to a singular approach. Furthermore, they highlight the necessity of integrating human decision-making to mediate disagreements among models, especially in domains where outcomes carry substantial stakes, such as finance or employment.

The Bigger Picture

Alongside D’Antoni, the research team includes notable contributors such as Aws Albarghouthi, an associate professor at the University of Wisconsin, and Yea-Seul Kim from Apple. Their collaborative efforts underscore the importance of interdisciplinary research in addressing complex issues posed by technology.

In summary, this pioneering research sheds light on a growing concern in the realm of machine learning: the importance of understanding how multiple models might influence perceptions of fairness and objectivity in decision-making processes. As ML continues to evolve, these insights could play a pivotal role in shaping more equitable systems, enhancing transparency, and fostering trust between technology and the people it serves.