Exploring Patient Insights in Gastric Cancer Research: A Detailed Study

Patients Overview

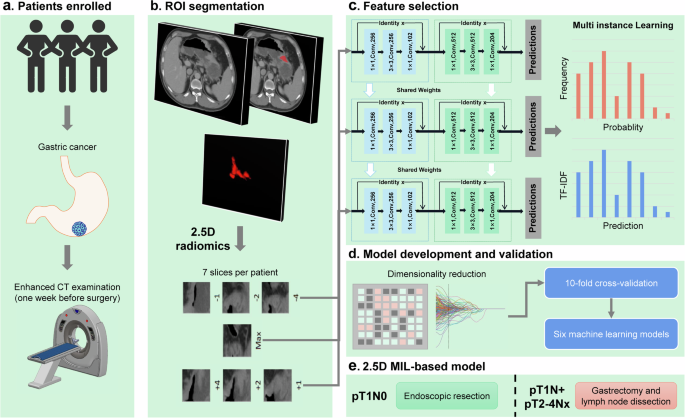

This study investigated patients across two prominent medical centers in China: Zhejiang Cancer Hospital and Zhejiang Hospital of Traditional Chinese Medicine. It focused on individuals who underwent radical gastrectomy for gastric adenocarcinoma or signet ring cell carcinoma within specific time frames between 2006 and 2019. The criteria for patient inclusion were carefully outlined to ensure a high standard of research quality.

Inclusion Criteria

To be part of this study, participants needed to meet the following criteria:

- A pathological diagnosis of either gastric adenocarcinoma or signet ring cell carcinoma.

- Completion of radical gastrectomy with R0 resection and D2 lymphadenectomy.

- An abdominal CT within one week prior to surgery.

- Comprehensive clinicopathological and follow-up data available until May 2024.

- High-quality imaging was essential, specifying that lesions must be clearly visible and without artifacts that could hinder analysis.

Exclusion Criteria

Conversely, patients were excluded based on certain factors:

- Incomplete clinicopathological data.

- Coexistence of other malignant tumors.

- Poor gastric distention, complicating tumor segmentation.

- Significant artifacts on CT images that compromised lymph node observation.

The meticulous selection ensured that the resulting data would yield high-quality insights into treatment outcomes and tumor characteristics.

Ethical Considerations

The study was multicenter and retrospective, meaning it drew on existing patient data rather than enrolling new participants. Both hospitals secured informed consent, and the research was approved by their respective ethics committees, ensuring alignment with the Declaration of Helsinki. Moreover, patient privacy was prioritized through cleaning metadata from DICOM files, safeguarding personal information while retaining necessary technical details for medical analysis.

Tumor Segmentation

Accurate tumor segmentation is pivotal in imaging studies. In this case, three experienced radiologists used ITK-SNAP software to delineate tumor boundaries on enhanced portal phase CT images. This segmentation process was vital for recognizing variations in tumor architecture and its relationships with adjacent structures.

The workload was systematically divided, allowing each radiologist to handle specific cases while ensuring thorough oversight through independent reviews and consensus discussions when discrepancies arose.

Data Preprocessing

Prior to analyses, rigorous data preprocessing was undertaken. To address potential biases from extreme values, pixel intensities were confined to a specific range, enhancing dataset consistency. Variable voxel spacing in different volumes of interest was also standardized using a fixed resolution resampling technique, facilitating more accurate comparisons across images.

2.5D Deep Learning Radiomics

An innovative approach termed 2.5D deep learning radiomics was employed. This methodology diverged from traditional 2D deep learning frameworks, which typically focus on singular slices. Instead, the 2.5D method integrates multiple layers—capturing essential contextual information around tumors. By expanding the data utilized for model training, this approach enhances predictive accuracy and contributes to more refined analyses.

Data Generation

The generation of the 2.5D dataset initiated with identifying regions of interest (ROIs) in the raw 3D images. This involved extracting several adjacent slices from the largest cross-sectional slice to form a more comprehensive dataset comprised of multiple images per patient. The inclusion of peri-tumoral information aided in integrating context during the assessment.

Model Training

Training models with the newly formed 2.5D data involved a robust transfer learning framework. Various pre-trained deep learning models were evaluated, including DenseNet121, ResNet50, and others, utilizing Z-score normalization. Real-time data augmentation further enhanced the diversity of training samples, while careful adjustments in learning rates were implemented to optimize model efficacy.

Multi-Instance Learning

A pivotal facet of this research was its application of multi-instance learning (MIL). This technique enabled the incorporation of multiple slices representing a single patient as individual instances, providing a richer feature set for predictive analysis. By merging predictions from the slices, the approach aimed to capture more nuanced data relevant to patient outcomes.

Feature Aggregation Techniques

Feature aggregation strategies, including Histogram feature aggregation and Bag of Words (BoW), were employed to consolidate predictions from different slices into comprehensive feature representations. These methodologies ensured that both the frequency and significance of features were considered, enhancing the accuracy of subsequent analyses.

2.5D Deep Learning Signature

With derived features from MIL, rigorous statistical techniques, including LASSO regression and t-tests, selected significant radiomic features. These selected features were then used in machine learning models—such as Logistic Regression and Random Forest—to create predictive models tailored for clinical insights.

Traditional Radiomics and Clinical Models

In addition to the deep learning approaches, traditional radiomic features were categorized into geometry, intensity, and texture. Various extraction techniques aligned with imaging standards provided a comprehensive set of descriptors for further analysis. The interactions among these radiomic features and clinical data were explored to foster an integrated understanding of their implications in patient prognosis.

Model Comparison

The 2.5D deep learning framework was compared against traditional radiomic models and clinical models using evaluation metrics like ROC curves and multivariate logistic regression. These comparisons illuminated the strengths of the 2.5D approach while providing insights into its clinical utility.

Visualization with Grad-CAM

To interpret and visualize the decision-making process of the 2.5D deep learning model, Gradient-weighted Class Activation Mapping (Grad-CAM) was utilized. This technique highlighted the regions in imaging data that contributed significantly to the model’s predictions, providing an additional layer of understanding in the clinical setting.

Statistical Analysis

A comprehensive statistical approach underpinned the analysis of patient characteristics and outcomes. By employing chi-square and independent sample t-tests, the researchers determined significant differences in various metrics, thereby enhancing the overall robustness and credibility of the findings.

In summary, this study exemplifies a rigorous approach to understanding gastric cancer through patient insights, advanced imaging techniques, and innovative modeling strategies. Each facet—from patient selection criteria to the nuances of model training—contributes to a deeper understanding of this critical area in medical research.