The Experimental Process for Enhancing the HRNet Model in Apple Leaf Disease Segmentation

Introduction to the Research Context

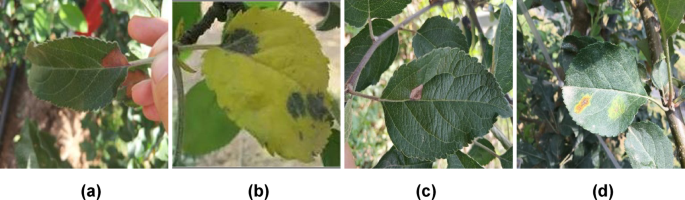

In the realm of agricultural technology, the significance of accurate plant disease detection cannot be overstated. Effective segmentation of symptoms on leaves is paramount to managing and treating plant diseases. The experimental process described herein focuses on refining the High-Resolution Network (HRNet) to improve its ability to segment apple leaf diseases, particularly four prominent types: Alternaria Blotch, Brown Spot, Grey Spot, and Rust.

Pretraining and Hyperparameter Tuning

One of the foundational aspects of this experiment involved leveraging pretrained backbone network parameters to enhance the model’s generalization ability and convergence speed. The input image size was configured to be 480 × 480 pixels, with training conducted over 100 epochs. A meticulous approach was taken to select the optimal combination of hyperparameters.

Selection of the Adam Optimizer

The Adam optimizer was employed for the training of all models, starting with an initial learning rate of 0.001. A dynamic learning rate adjustment strategy was implemented, wherein the learning rate would be reduced by 50% if the loss showed minimal variation. This carefully crafted training regime laid the groundwork for achieving effective feature learning.

Hyperparameter Impact on Model Performance

A pivotal aspect of this experiment was the exploration of hyperparameter effects on segmentation performance, particularly for the HRNet model. According to findings presented in Table 5, the selection of hyperparameters notably influenced the segmentation capabilities of the model. The HRNet demonstrated impressive stability across various settings, evidenced by minimal variation in evaluation metrics.

The optimal segmentation performance for the apple leaf disease task was achieved with a learning rate of 0.0001 and an Adam batch size of 8. Such configurations provided a solid foundation for the subsequent evaluations of model performance.

Comparative Analysis of HRNet Versions

To shed light on the enhancements of the HRNet model, a comparison was made between the original HRNet and the Improved HRNet, which incorporates advancements such as the NAM attention mechanism. Figure 7 illustrates the differential outcomes in terms of Intersection over Union (IoU) and Pixel Accuracy (PA) across the four disease types.

Findings on Improved HRNet Performance

The data reveals that the Improved HRNet significantly outperformed its predecessor in the Alternaria Blotch task, with an impressive IoU improvement of 11.54 percentage points and a PA enhancement of 7.98 percentage points. For Brown Spot, the IoU augmented by 1.5 percentage points, while in Grey Spot, it rose by 9.14 percentage points. However, the improvements for Rust were more modest, with an IoU increase of only 2.71 percentage points. These metrics reflect the NAM mechanism’s effectiveness in emphasizing local features inherent to disease lesions, thus enhancing segmentation accuracy.

Backbone Network Comparison

In-depth evaluations were conducted on various backbone network widths, including HRNet_w18, HRNet_w32, and HRNet_w48, as depicted in Figures 8(a) and 8(b). These experiments aimed to discern the ideal backbone configuration for apple leaf disease segmentation.

Performance Insights from Different Backbone Configurations

The results showcased that HRNet_w32 emerged as the most effective configuration, achieving an average IoU of 82.21%, surpassing HRNet_w18 by 2.07 percentage points, and exceeding HRNet_w48 by 0.07 percentage points in mIoU. Its pixel accuracy reached 89.59%. Although HRNet_w48 achieved the highest precision at 91.92%, this was accompanied by overfitting issues, illustrating the balance HRNet_w32 strikes between performance and computational complexity.

Attention Mechanisms and Their Impact

To further enhance model performance in segmenting apple leaf diseases, attention mechanisms were evaluated, specifically CBAM, SENet, and NAM. Figures 9(a) and 9(b) present the training outcomes associated with these attention mechanisms.

Comparative Performance of Attention Modules

The results highlighted that the introduction of the NAM module yielded a marked increase in mIoU, achieving 88.33%. This surpasses both CBAM, which produced an improvement of 2.54%, and SENet, with an enhancement of 0.4%. These findings underscore NAM’s superior ability to capture essential disease features while mitigating background noise interference, ultimately refining segmentation performance.

Ablation Studies: Module Impact Evaluation

To thoroughly evaluate the contribution of each component within the model, a series of ablation experiments were conducted. These investigated the effects of varying the backbone network, integrating the attention mechanism, and utilizing different loss functions, such as Focal Loss and Dice Loss.

Results of the Ablation Experiments

Table 8 summarizes how the implementation of HRNet_w32 led to significant increases in both mIoU and mPA. The introduction of the NAM attention mechanism brought further improvements, emphasizing the efficacy of multi-scale feature fusion. Focal Loss addressed class imbalances effectively, while Dice Loss enhanced accuracy, especially for smaller targets. The combination of these components resulted in a groundbreaking enhancement in segmentation performance.

Benchmarking Against Other Segmentation Models

To further assess the HRNet model’s contribution, it was pitted against notable existing models like DeeplabV3+, U-Net, and PSPNet. Figure 10 elucidates the comparative outcomes.

Performance Showdown among Segmentation Models

The Improved HRNet outshone all competitors, achieving an mIoU of 88.91% and an mPA of 94.13%. In contrast, DeeplabV3+ lagged with an mIoU of 79.20%. U-Net and PSPNet offered some competitive performance, but they still fell short of the precision achieved by the Improved HRNet, especially under challenging conditions such as class imbalances.

Visualization of Segmentation Results

A visual comparison of segmentation results across different models, as demonstrated in Figure 11, further elucidated performance discrepancies. Each model exhibited unique strengths and weaknesses, particularly when diagnosing diseases with similar morphological characteristics.

Challenges in Disease Segmentation

The improved HRNet exhibited a notable capacity for accurate differentiation among disease symptoms without confusion. This capability is essential for addressing the typical challenges associated with inter-class similarities and complex edge topologies, enhancing the robustness and reliability of disease detection.

Assessing Disease Severity Levels

To quantify disease impact accurately, grading parameters were established in accordance with established local standards. Disease severity assessment was executed through pixel statistics derived from Python-based calculations, offering a clear framework for classification.

Grading Mechanisms Under Different Scenarios

The study incorporated various scenarios, including single leaf, multiple separated leaves, and overlapping leaves, employing the DRL-Watershed algorithm to ensure accuracy in grading. This approach allowed for a nuanced understanding of disease severity as reflected in Tables 9 and 10.

Closing Remarks on Prediction Performance

The confusion matrices depicted in Figure 15 provide further insight into the grading accuracy of the improved HRNet model and the DRL-Watershed algorithm. While the HRNet demonstrated commendable accuracy for lower severity levels, challenges remained in accurately predicting higher severities, particularly in complex scenarios involving multiple leaves.

These detailed findings from the experimental process not only illuminate the advancements made within the HRNet framework for apple leaf disease segmentation but also highlight the intricate methodologies employed to ensure robust, accurate, and reliable assessments in agricultural applications.