Addressing Temporal Dependency and Mapping Accuracy in Sensor Fusion Semantic Segmentation

Introduction

In the rapidly evolving field of sensor fusion semantic segmentation, addressing the temporal dependency problem and the "many-to-one" mapping challenge remains critical. These issues often hinder the precision of depth value mapping and the accuracy of accumulated sensor data. This article introduces an innovative method designed to mitigate these challenges, improve mapping accuracy, and ensure robust integration of data from different modalities.

Network Overview

The proposed approach utilizes a cross-modal sensor fusion method, merging perception data from RGB images and LiDAR point clouds. The network architecture can be broken down into three fundamental components:

- Projection and Multi-scale Convolution: Captures and aligns point cloud data within the camera’s coordinate system.

- Temporal Alignment Module (TA): Synchronizes previous and current frames to address dynamic changes in the scene.

- Cross-modal Fusion Network: Integrates camera and LiDAR features, employing a residual fusion module to enhance data fusion efficacy.

How the Network Works

Initially, the mixed projection technique translates point cloud information into the camera’s coordinate space. The temporal alignment module subsequently aligns previous frames with the current frame, enabling effective semantic segmentation. This is followed by a cross-modal fusion network that extracts vital features from both RGB image and LiDAR modalities.

Timing Alignment Module (TA)

Central to our approach is the Timing Alignment Module (TA), which aims to effectively manage discrepancies between sequential frames using the SIFT (Scale-Invariant Feature Transform) algorithm for feature extraction. To align images accurately, it employs the Random Sample Consensus (RANSAC) algorithm to determine a homography matrix. This matrix acts as a critical component in transforming the current frame to the previous one, ensuring that spatial representations are synchronized.

Improving Robustness in Alignment

Low-confidence matches represent a significant challenge, particularly within dynamic environments. To bolster the reliability of the TA module, we introduce several strategies:

- Assign confidence scores to each matched feature based on its consistency across consecutive frames.

- Employ a confidence-based weighting strategy, ensuring reliable features influence the homography matrix estimation more than less reliable matches.

- Utilize iterative optimization techniques to minimize residual errors during alignment, progressively down-weighting low-confidence features to enhance the final alignment’s accuracy.

The final step of the TA generates a residual image by computing the difference between the aligned current frame and the previous one, effectively encapsulating the temporal changes present in the scene.

Projection and Multi-scale Convolution Modules (PMC)

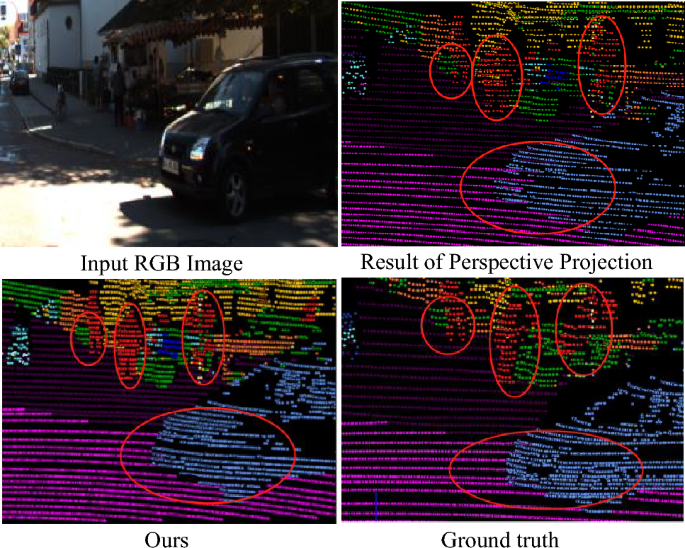

Typical point cloud projection methods often rely on less efficient spherical projections, leading to significant data loss. Our improved projection mechanism combines range projection with multi-scale convolution techniques, introducing a many-to-one correction mechanism that maximizes mapping accuracy by reducing pixel loss:

- Depth Calculation: Each point’s distance from the sensor is calculated, facilitating more accurate distance representation.

- Angle Calculation: We derive horizontal (yaw) and vertical (pitch) angles to project 3D points within a 2D image coordinate system.

- Multi-scale Convolution: The output is enhanced through a multi-scale convolution strategy, preserving high-frequency details while integrating broader contextual information.

To resolve the "many-to-one" mapping issue, a prioritization mechanism ensures each pixel retains only the most representative point cloud information, significantly improving segmentation outcomes, especially in sparse scenarios.

The Sensor Fusion Module

Combining image and point cloud data presents inherent challenges due to their differing modalities. Our cross-modal sensor fusion network, which comprises separate LiDAR and camera branches, addresses this complexity.

Architecture and Fusion Mechanism

The architecture is designed to leverage the strengths of both modalities effectively. The output from each branch is aggregated through a residual-based fusion module, enhancing the semantic richness of the resulting output.

- Image features, rich in detail, are integrated into the LiDAR branch using a carefully designed fusion module that combines both sets of features.

- The integration employs a convolutional layer, reducing the channel count and refining the information before final output.

Considering the sensitivity of cameras to environmental variables, the result is a balanced way to use LiDAR features to stabilize the effects of potential inaccuracies in RGB data, thus creating a more reliable semantic segmentation output model.

Detailed Alignment Steps

The alignment process is segregated into two key stages:

Step 1: Coarse Alignment

- Feature extraction from both the current and previous frames.

- RANSAC estimation of an initial homography matrix.

- Applying this matrix to achieve a coarse alignment of the depth image.

Step 2: Fine Alignment

- Involves inputting the coarse-aligned depth and current images into a fine alignment network.

- Produces transformation flows and confidence masks to improve pixel-level alignment.

- Iterates on filtering poorly aligned points to refine the homography matrix progressively.

Conclusion

This structured approach to temporal dependency and mapping accuracy in sensor fusion semantic segmentation represents a significant advancement in accurately capturing and interpreting dynamic scenes. By integrating multiple levels of processing—from feature extraction and alignment to innovative fusion methods—this network paves the way for enhanced performance in environments where real-time perception is critical.