Comprehensive Evaluation of the ESA Framework Across Diverse Graph Learning Tasks

In the evolving landscape of graph neural networks (GNNs), the introduction of the ESA (Edge-based Self-Attention) framework marks a significant leap forward. We’ve embarked on a thorough evaluation of ESA across 70 different tasks spanning various domains, including molecular property prediction, vision graphs, and social networks. This examination includes a nuanced look at representation learning on graphs, diving into aspects like node-level tasks across both homophily and heterophily graph types, modeling long-range dependencies, shortest paths, and intricate 3D atomic systems.

Performance Comparison Against Baselines

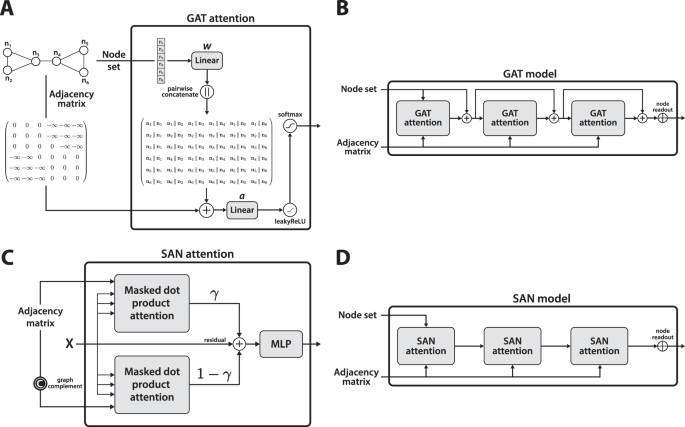

To contextualize our findings, we measured ESA’s performance against six established GNN baselines: Graph Convolutional Networks (GCN), Graph Attention Networks (GAT), GATv2, Principal Neighbors Aggregation (PNA), Graph Isomorphism Network (GIN), and DropGIN, alongside three graph transformer benchmarks: Graphormer, TokenGT, and GraphGPS. For prospective researchers and practitioners, hyperparameter tuning details and the rationale behind the selected evaluation metrics are elaborated in the supplementary material.

The findings summarized in this comprehensive evaluation illuminate ESA’s strengths and weaknesses across various tasks in molecular learning, mixed graph-level ops, and node-level tasks while also addressing performance scalability in terms of time and memory resources.

Molecular Learning

One of the most impactful applications of graph learning has been in molecular prediction, which we examined through an extensive evaluation framework. This covered benchmarks derived from quantum mechanics, molecular docking studies, and further explorations of learning within 3D atomic systems.

QM9 Dataset Insights

Our analysis of the QM9 dataset, comprising 19 targets, illustrated that ESA emerges as the top performer across 15 properties, with exceptions in frontier orbital energies where PNA led slightly. The comparative study highlighted notable performance variances, showcasing how graph transformers perform competitively on certain tasks while lagging in others.

DOCKSTRING Drug Discovery Dataset

In evaluating the DOCKSTRING dataset, which holds 260,155 molecules across various difficulty levels, ESA dominated in four out of five targets. Although performance varied between different molecular docking challenges, with PNA slightly exceeding ESA in medium-difficulty tasks, the results reaffirmed the significant correlation between molecular geometries and predictive performance.

MoleculeNet and NCI Benchmarks

Our exploration of regression and classification tasks from MoleculeNet and the National Cancer Institute (NCI) presented a mixed picture. While ESA generally excelled, certain datasets—specifically those with reduced compound numbers—presented challenges for graph transformers, hinting at the complexities in predictive modeling under resource-constrained conditions.

PCQM4MV2 Benchmarks

The large-scale PCQM4MV2 dataset, comprising over 3 million training molecules, offered fertile ground for evaluating the predictability of DFT-calculated HOMO-LUMO energy gaps from 2D molecular graphs. Here, ESA not only achieved exceptional performance but did so sans positional or structural encodings that benefitted other specialized models.

ZINC Dataset Utilization

The ZINC dataset’s application for generative purposes yielded another layer of analysis. While ESA faced off against graph transformer baselines, its performance highlighted the delicate balance needed between edge-specific augmentations and structural encodings, indicating areas ripe for ongoing research.

Long-Range Dependencies in Peptide Tasks

Explorations in long-range graph benchmarks, especially those relevant to peptide properties, showcased how traditional GNNs outperformed attention-based methods. ESA’s refinement brought competitive results, encouraging future inquiries into longer-range predictions—a focus area with pressing relevance in biological applications.

Mixed Graph-Level Tasks

Diving into mixed graph-level benchmarks across diverse domains—from computer vision and bioinformatics to social graphs—ESA showcased strong results. In many instances, it equaled or surpassed the architecture’s performance of the state-of-the-art models, especially on datasets like MNIST and CIFAR10 without reliance on structural encodings.

Node-Level Benchmarking

Node-level tasks posed a distinct challenge due to the design of ESA, which initially did not incorporate a PMA (Pooling-Masked Attention) module. However, early prototypes integrating edge-to-node pooling showed promise, leading to the adoption of the simpler node-set attention (NSA) model. This adjustment yielded robust results across various homophilous and heterophilous tasks.

Comparative Performance and Results

When compared to other cutting-edge models, ESA with NSA demonstrated remarkable performance, especially notable on challenging datasets like Cora, showcasing its adaptability and efficiency against tuned GNNs and transformer architectures.

Exploring Layer Configurations and Enhancements

An extensive exploration of the layer configurations in ESA revealed that the intricacies of interleaving structures could significantly impact performance—pointing to a need for careful design choices in neural architecture.

Time and Memory Scaling Analysis

Delving into time and memory usage, we further dissected the operational efficiencies of ESA compared to nine other baselines. The findings indicated that while GCN and GIN remained the fastest, ESA offered a reasonable balance—highlighting its potential viability for large-scale applications in real-world scenarios.

Explainability in Graph Neural Networks

Finally, explainability, a key concern in AI, proved to be an area where ESA excelled, specifically through its attention mechanisms. Analysis of the learned attention scored intriguing insights about interpretability, particularly in the realm of molecular predictions. Our empirical investigations validated the model’s intuitive understanding of molecular interactions, underscoring the practical implications of ESA in diverse contexts.

Understanding these layers of complexity within ESA not only affirms its credible performance but also lays the groundwork for future innovations in graph-based learning methodologies. The focus remains on leveraging this foundational architecture to explore novel applications across diverse fields, continuing to bridge the gap between theoretical frameworks and practical implementations.