Evaluating Natural Language Processing Tools for Classifying CTE Reports

Natural Language Processing (NLP) is rapidly transforming various fields, including medicine, by automating the extraction of insights from text. In our evaluation, we focused on two categories of NLP tools to classify Chronic Traumatic Encephalopathy (CTE) text reports: Rationale Extraction (RE) and Language Modeling (LM). Each approach serves distinct purposes — RE emphasizes interpretability, while LM prioritizes classification performance.

Rationale Extraction (RE) to Classify CTE Reports

Rationale Extraction centers around identifying specific segments of text—termed rationales—that provide sufficient evidence to classify a document. In medical contexts, this method seeks to highlight the most significant components that contribute to a diagnosis. Essentially, rationales serve as explanations for a classifier’s predictions and can enhance interpretability.

The Architecture of Rationale Extraction

Our rationale extraction architecture (as illustrated in Fig. 4) comprises two main components: the extractor and the classifier. The extractor identifies segments of the original CTE report, selecting parts that are crucial for the diagnosis. Once the rationale is determined, the classifier uses this slice of text to make a prediction about whether the report is “CTE-positive for Crohn’s Disease (CD)” or “CTE-negative for CD.” This approach promotes “faithfulness” — ensuring that the rationale provided plays a pivotal role in informing the classifier’s output.

Neural Network Implementations of Rationale Extraction

For our RE model, we employed two neural network architectures: Bi-directional Long Short-Term Memory networks (Bi-LSTM) and Convolutional Neural Networks (CNN). Both frameworks serve the dual purpose of acting as an extractor and a classifier. The models undergo training with instance-level supervision, where reports labeled as either “CTE-positive for CD” or “CTE-negative for CD” guide the learning process.

The training leverages GloVe (Global Vectors for Word Representation) to convert text into a numerical format that retains semantic meaning. The extractor then assesses the probability of each token being selected as part of the rationale, and a binary mask is applied to choose the most discriminative tokens.

Loss Function and Training Process

The loss function guiding the RE training incorporates three main terms: predictive loss, selection loss, and contiguity loss.

- Predictive Loss: Encourages the model to find better discriminative rationales to improve classification accuracy.

- Selection Loss: Aims to ensure that the selected rationales are concise, reducing noise in the input data.

- Contiguity Loss: Reinforces the notion that rationales should consist of contiguous segments of text, which aids human understanding.

This multi-faceted loss function aims to refine both the extractor and classifier simultaneously.

Insights from Rationales

Aside from their primary function, the rationales generated by our RE model also reveal strong phrase or word-level indicators for classification. By analyzing the extracted rationales, we can identify "strong indicators," which help clarify the classification process.

Using a methodical decomposition approach, we extracted keywords and phrases, creating rules that guide predictions based on their presence. For instance, if a specific keyword is present in a report known to be “CTE-positive for CD,” it directly informs prediction models. This systematic rule-based classifier—thus crafted from strong indicators—serves as an additional layer of insight to complement the neural network approaches.

Language Modeling for Classification of CTE Reports

In addition to RE models, we assessed three distinct Language Models (LMs) based on the BERT architecture, aimed at enhancing classification accuracy of CTE reports. The models include:

- DistilBERT: A distilled version of BERT that retains its performance while being more efficient.

- BioClinicalBERT: A biomedical-focused variant designed to comprehend and analyze clinical texts effectively.

- IBDBERT: Our custom Language Model, specifically tuned for Inflammatory Bowel Disease (IBD) language.

The Mechanics of BERT and Domain-Specific Adaptations

BERT operates using a Transformer architecture to understand context and semantics better. It employs a masked language modeling (MLM) technique during its training, allowing it to predict missing words within sentences based on context. This process prepares BERT for specialized tasks such as document classification.

For IBDBERT, we enhanced the original BERT model by pre-training on a text corpus that encompassed IBD-related materials, including textbooks and professional guidelines. This domain-specific preparation enables the model to foster a deeper understanding of the language and nuances prevalent in the medical field.

Fine-Tuning BERT Models for Specific Tasks

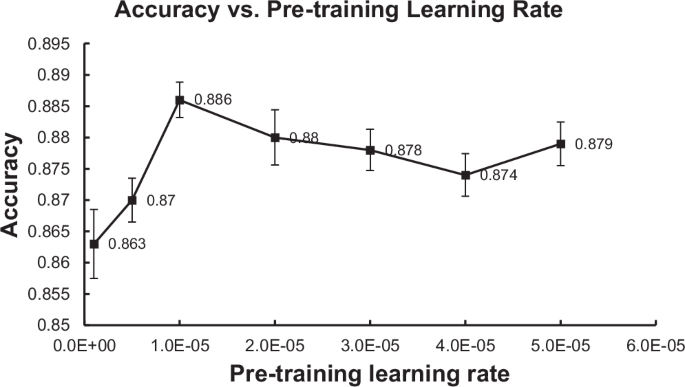

After pre-training, a second stage typically involves fine-tuning the model with a labeled dataset relevant to the specific task at hand. Our fine-tuning process for these BERT models involved carefully selected parameters and hyperparameters, yielding frameworks geared toward high classification performance.

Performance Benchmarking Against Recent LLMs

To further validate the effectiveness of IBDBERT, we compared its performance against recent, general-purpose LLMs: Meta LLaMA and DeepSeek. These models, utilized in their pre-trained state, were assessed on their ability to classify CTE reports. Despite the absence of fine-tuning for these models, we explored prompt engineering techniques to optimize their outputs for better results.

Statistical Analysis and Model Evaluation

Performance evaluation of our models was conducted using various metrics, including accuracy, precision, recall, and F1-scores. Comparisons among the models also employed statistical methods like Pearson Chi-squared analysis for categorical demographic variables.

By utilizing advanced computational frameworks—such as PyTorch and Hugging Face’s Transformers—across multiple GPU environments, we enabled robust training and immediate inference capabilities. This infrastructure facilitates efficient processing and comparative performance assessments across our diverse NLP methodologies.

Conclusion

This comprehensive evaluation of RE and LM methodologies not only showcases the intricate capabilities of NLP in classifying CTE reports but also lays the groundwork for enhancing interpretability and performance in medical text analysis. Each strategy, with its unique strengths, contributes to a richer understanding of the diagnostic narratives found in complex medical reports.