System Framework for Predictive Maintenance in Industrial Manufacturing

Introduction

In the realm of industrial manufacturing, predictive maintenance (PdM) has emerged as a game-changer, leveraging advanced technologies to anticipate equipment failures and optimize maintenance strategies. This article articulates a comprehensive framework designed for comparing deep learning models in PdM applications, particularly focusing on how to effectively utilize sensor data. The framework comprises several essential components: data acquisition and preprocessing, feature extraction and selection, model training and evaluation, and model deployment and integration.

Proposed System Framework

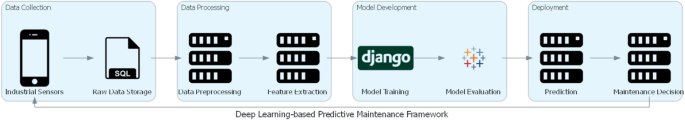

The proposed framework for deep learning-based PdM is visually depicted in Figure 1, showcasing an interconnected system that underpins the accurate and reliable prediction of equipment failures.

- Data Acquisition and Preprocessing

- Feature Extraction and Selection

- Model Training and Evaluation

- Model Deployment and Integration

The collaboration of these components allows for a streamlined approach to develop, validate, and deploy effective PdM solutions in industrial settings.

Data Acquisition and Preprocessing

Data Acquisition

The foundation of any predictive maintenance system rests on robust data acquisition methods. Various sensors are employed across industrial machinery to glean insights into performance and condition. Common types of sensor data include:

-

Vibration Sensors: Such as accelerometers that reveal mechanical health through vibration patterns, indicating issues like misalignment or wear.

-

Temperature Sensors: Devices that monitor heat levels, flagging potential overheating or insufficient lubrication by recording thermal conditions.

-

Pressure Sensors: Employed in hydraulic systems to detect abnormalities that may suggest leaks or wear.

-

Acoustic Emission Sensors: These detect high-frequency sound waves that hint at internal structural defects.

- Electrical Sensors: These monitor electrical parameters, identifying potential faults in motors or control systems.

Moreover, process sensors record manufacturing variables like production rate, enhancing context when interpreting condition monitoring data. The data acquisition process often integrates these sensors with data collection systems such as PLCs that digitize and store the gathered data for further analysis in centralized databases or cloud platforms.

Data Preprocessing

The raw sensor data is seldom perfect; it may be riddled with noise, missing values, and outliers that can skew model performance. Thus, thorough data preprocessing is crucial:

-

Data Cleaning: This entails managing missing values through methods like mean or median imputation, along with outlier detection and remediation.

-

Data Normalization: Techniques like min-max scaling and standardization ensure all sensor data is on a common scale, which promotes faster convergence during model training.

-

Feature Extraction: This step seeks to distill meaningful features from the data, with time-domain statistics and frequency-domain analyses employed to capture crucial signal characteristics.

-

Feature Selection: It aims to identify the most relevant features for the PdM tasks using correlation analysis or more sophisticated methods.

- Data Splitting: Finally, preprocessed data is divided into training, validation, and test sets to facilitate effective model training and evaluation.

Deep Learning Model Construction

With the processed data at hand, focus shifts to constructing deep learning models tailored for PdM tasks. Key architectures include Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks.

Convolutional Neural Networks (CNNs)

CNNs thrive in processing structured data, learning hierarchical features indicative of equipment health. They operate through multiple layers:

-

Convolutional Layers: Apply filters to extract localized features from the input data.

-

Pooling Layers: Reduce data dimensionality and provide translation invariance.

- Fully Connected Layers: Aggregate the learned features to perform final predictions.

In the context of PdM, CNNs can adapt the sensor signals into 1D or 2D formats to effectively harness temporal and frequency information vital for condition monitoring.

Long Short-Term Memory (LSTM) Networks

LSTM networks serve as a robust selection for sequential data. They consist of intricate cells that help capture long-term dependencies in sensor data:

-

Memory Cell: Maintains data across time steps, allowing the network to recall information from earlier data points.

- Gates: Control information flow—regulating new inputs, information retention, and outputs.

LSTM networks can process sensor data as time-series inputs, learning dependencies that span multiple time steps, which is fundamental in accurately predicting equipment failure.

Model Training and Optimization

The journey from model construction to deployment necessitates rigorous optimization. This involves:

- Initializing Parameters: We can start with random or pre-trained weights.

- Training Methodology: Preprocessed data feeds into the model, enabling forward and backward passes, with the objective of minimizing a specific loss function related to the task.

- Optimizing with Algorithms: Techniques like Stochastic Gradient Descent or Adam fine-tune network parameters throughout the training process.

- Regularization Techniques: Employing learning rate schedules, dropout measures, and early stopping to combat overfitting.

Training continues until a convergence criterion signals readiness for evaluation, where the model’s predictive power is rigorously assessed on unseen data.

Model Evaluation Metrics

Assessing the performance of trained models is paramount for ensuring reliability in real-world applications. Key metrics employed in PdM evaluations include:

-

Accuracy: This overall correctness ratio provides a snapshot of the model’s performance.

-

Precision: The proportion of true positive predictions out of all predicted positives, aiming to minimize false positives.

-

Recall: The model’s ability to capture actual fault scenarios, focusing on reducing false negatives.

- F1-Score: A balanced measure that combines precision and recall, proving especially useful in imbalanced datasets.

Each metric provides a different lens on model performance, and a comprehensive evaluation employs multiple measures to ensure a robust understanding of predictive capabilities.

Conclusion

This detailed exploration of the system framework for predictive maintenance underscores the significance of deep learning in transforming industrial manufacturing systems. By establishing structured methodologies for data acquisition, processing, modeling, and evaluation, industries are better equipped to predict failures and optimize their maintenance strategies effectively.