“Automated News Digest: Leveraging Deep Learning and NLP for Text Summarization”

Automated News Digest: Leveraging Deep Learning and NLP for Text Summarization

Understanding Text Summarization in NLP

Text summarization in Natural Language Processing (NLP) refers to the automatic generation of concise summaries from larger bodies of text. This technology enables efficient information consumption, particularly in our fast-paced digital era where vast amounts of data are generated daily. By condensing information, advanced summarization techniques can enhance user experiences, allowing for quicker access to important content without sifting through lengthy articles.

Key Components of Deep Learning Models for Summarization

Several core components determine the effectiveness of text summarization models. Firstly, the dataset used for training is crucial. Models trained on high-quality, domain-specific datasets yield better summaries. Secondly, architecture plays a significant role; models like T5, BART, and PEGASUS utilize different mechanisms for encoding and decoding text. Finally, the choice of evaluation metrics such as ROUGE and BLEU scores helps assess the performance of these models quantitatively.

For instance, companies like Google and Facebook employ distinct architectures for their summarization tasks. Google’s T5 framework standardizes various NLP functions into a cohesive format, while Facebook’s BART combines bidirectional and auto-regressive transformers to enhance context understanding.

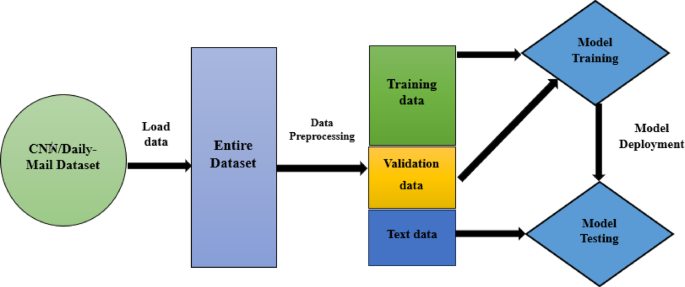

The Lifecycle of Text Summarization Deployment

Deploying a text summarization model follows a structured lifecycle:

- Dataset Preparation: Curate a relevant dataset that reflects the summary requirements.

- Model Selection: Choose an appropriate model based on the task’s complexity and performance metrics.

- Training: Feed the model with the training data, allowing it to learn summarization patterns.

- Evaluation: Utilize ROUGE and BLEU scores to assess the summarization quality.

- Fine-tuning: Adjust the model parameters based on evaluation results to enhance performance.

- Deployment: Integrate the model into applications, making summaries available for user access.

- Feedback Loop: Collect user feedback to continuously improve the model.

Each step is essential for producing high-quality outcomes, reflecting a thorough approach to building an effective summarization tool.

Practical Applications of Text Summarization

Industries such as news, legal, and academic rely heavily on text summarization to distill critical information swiftly. For example, a news aggregator might utilize T5-large to summarize daily articles, ensuring users receive the essential details without unnecessary text. Similarly, legal firms can use summarization models to extract key points from extensive case files, thereby streamlining their workflow.

Consider a fictional news site, "QuickNews," that implemented PEGASUS for summarizing breaking news. The model effectively provided clear, concise summaries that preserved key elements from the original articles, significantly boosting user engagement and reducing time spent searching for information.

Challenges and How to Avoid Them

Despite their potential, text summarization models face challenges. One common pitfall is over-simplification, where the model fails to encapsulate the core message of the text. For instance, shorter summaries might omit critical details, leading to user dissatisfaction. To mitigate this, training on diverse datasets and fine-tuning are essential.

Another challenge is the balance between precision and recall in summaries. A model with good precision might provide summaries that don’t cover all necessary aspects, while one focused solely on recall may yield verbose outputs. Utilizing mixed evaluation metrics can help strike this balance.

Tools and Metrics for Evaluating Summarization

When assessing the performance of summarization models, tools and metrics such as ROUGE and BLEU scores are integral. ROUGE, which stands for Recall-Oriented Understudy for Gisting Evaluation, analyzes how closely machine-generated summaries match human-created ones. The variation in ROUGE metrics includes:

- ROUGE-1: Assesses overlap of unigrams (single words) between generated and reference summaries.

- ROUGE-2: Evaluates bigram (pairs of words) overlap for deeper contextual insights.

- ROUGE-L: Focuses on the longest common subsequence, emphasizing indexed content rather than surface overlap.

On the other hand, BLEU (Bilingual Evaluation Understudy) scores are primarily used in translation but are also useful in summarization. They gauge how accurately generated summaries match human references by analyzing n-gram precision, offering a different lens for evaluation.

Variations in Summarization Models

Different models cater to specific summarization needs, each with trade-offs. For instance, T5-base is lightweight and effective for simpler tasks, while the more complex T5-large can handle intricate language tasks with greater capacity. Meanwhile, BART is particularly adept at generating coherent outputs due to its combined architecture. Choosing the right model depends on requirements such as the depth of content understanding and the volume of text to process.

For rapid deployments, models like PEGASUS offer dedicated summarization capabilities but may require substantial computational resources. Weighing these trade-offs is essential for administrators evaluating which framework suits their needs best.

FAQs

What is the main difference between ROUGE and BLEU scores?

ROUGE primarily focuses on recall and overlaps between generated and reference summaries, while BLEU emphasizes precision and assesses n-gram matches across translations and summaries.

Can summarization models handle multilingual tasks?

Yes, some models like T5 are designed to manage multiple languages, allowing for summaries across various linguistic inputs.

How important is fine-tuning for model performance?

Fine-tuning is crucial; it allows models to adapt to specific datasets, significantly improving recall and precision in the summarization output.

Is there a risk of model bias in text summarization?

Yes, models can be biased based on the training data they are exposed to. Ensuring a diverse and representative dataset helps mitigate this issue.