“Automated News Digest: Leveraging Deep Learning and NLP for Text Summarization”

Automated News Digest: Leveraging Deep Learning and NLP for Text Summarization

Text summarization has become a fundamental aspect of processing news and information efficiently. At its core, it relies on Natural Language Processing (NLP) techniques and deep learning models that can analyze large volumes of text and extract essential information. This capability is crucial in today’s fast-paced digital environment, where consumers seek quick and concise summaries of complex content.

Understanding Key Concepts in Text Summarization

Text summarization techniques can be broadly categorized into extractive and abstractive methods. Extractive summarization identifies and pulls key sentences directly from the text, while abstractive summarization generates new sentences, capturing the essence of the original content. For instance, an extractive summary of a news article might include verbatim quotations from important sections, whereas an abstractive summary would reformulate the key messages in new words, often enhancing clarity and coherence.

Understanding these terms is vital because businesses rely on accurate summarization for report generation, data analysis, and even customer service. The ability to condense information effectively can significantly improve decision-making processes and enhance user experience.

Key Components of Text Summarization Models

Text summarization hinges on several critical components: Neural Networks, Pre-training, and Fine-tuning. Neural networks, particularly those based on the transformer architecture, have revolutionized how machines understand and generate text. Pre-training involves training models on large datasets to grasp general language structures, while fine-tuning adapts these models to specific tasks, such as summarizing news articles.

For example, the PEGASUS model utilizes a pre-training objective focused on understanding context, which allows it to generate better summaries. Fine-tuning further enhances its capabilities by refining how the model processes specific types of content, making it particularly suitable for domains like news summarization.

The Lifecycle of a Summarization Model

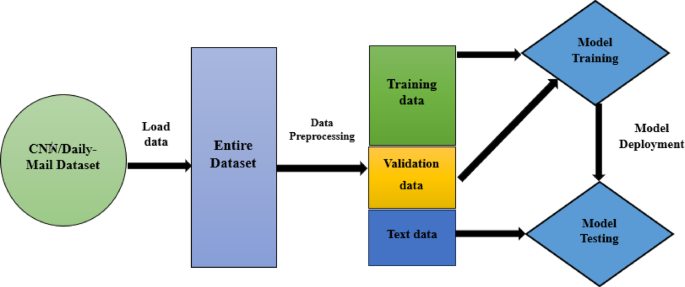

Developing a text summarization model follows a chronological process that ensures model effectiveness. This lifecycle typically includes several key steps:

-

Data Collection: Gather large and diverse datasets that cover a range of topics.

-

Pre-processing: Clean and format the data to make it suitable for model training, including removing irrelevant information or correcting errors.

-

Model Selection: Choose a deep learning architecture appropriate for the task. For instance, T5 and BART are popular choices for their robust abilities in natural language understanding.

-

Training: Apply pre-training on a substantial corpus followed by fine-tuning with a task-specific dataset, optimizing the model for summarizing.

-

Evaluation: Assess model performance using benchmarks like ROUGE and BLEU scores, which measure the similarity between the generated summaries and human-authored versions.

- Deployment and Iteration: Deploy the model in real-world applications, gather user feedback, and make iterative improvements based on performance and accuracy.

Each stage of this lifecycle is essential for ensuring that the summarization model not only performs well in theory but also delivers value in practice.

Practical Example: Comparing Model Performance

To illustrate the effectiveness of deep learning models for summarization, consider the results obtained from four distinct models—T5, BART, PEGASUS, and their respective large variations.

In initial testing, PEGASUS-large achieved a ROUGE score of approximately 0.2493 and a BLEU score of 0.0317, indicating good alignment with human-generated summaries. Meanwhile, BART performed slightly lower with ROUGE and BLEU scores around 0.1352 and 0.0182, respectively. This performance assessment highlights how different models suit various applications and their effectiveness in producing coherent summaries.

Recognizing these variations allows organizations to make informed choices about which model to adopt based on their specific summarization needs. Understanding these differences ultimately optimizes resource allocation and improves output quality.

Common Pitfalls in Text Summarization

While deploying summarization models, several pitfalls can arise, often impacting performance. One common issue is overfitting, where a model learns too much from training data, capturing noise instead of meaningful patterns. This leads to poor generalization when encountering new text.

To prevent overfitting, employing techniques such as regularization and providing diverse training datasets can help. Another pitfall is the model’s inability to capture essential context or nuances in the text. Utilizing a more comprehensive model architecture, like the T5 with its encoder-decoder format, is one way to bolster context understanding.

Training on well-structured datasets relevant to the summarization task also mitigates the risk of missing critical insights.

Tools and Metrics for Evaluation

Evaluating the effectiveness of summarization efforts involves established metrics like ROUGE and BLEU. ROUGE measures the overlap between machine-generated and reference summaries by calculating precision and recall scores for unigrams, bigrams, and the longest common subsequence. It’s crucial for determining how well a model aligned with human expectations.

Conversely, BLEU scores assess correctness by comparing n-grams from the generated text to human-crafted summaries, offering a way to gauge fluency and overall quality. These metrics are used widely, from academic research to company applications, allowing for standardized evaluations that guide model improvements.

Variations and Alternatives in Summarization Models

Different models present various trade-offs in terms of performance, computational efficiency, and adaptability. For example, while T5-large excels at capturing complex relationships within the text, it may require more computational resources compared to lighter models like T5-base, which can still effectively summarize without needing extensive processing power.

Deciding which model to use often depends on the specific application context, such as the volume of text being processed, the required summarization depth, and available computational resources. Organizations must weigh these factors to achieve a well-balanced approach to text summarization.

FAQ

-

What is the difference between extractive and abstractive summarization?

Extractive summarization pulls sentences directly from the source, while abstractive summarization creates new sentences that convey the essential messages. -

How do ROUGE and BLEU scores compare?

ROUGE focuses on recall and precision by measuring sentence overlaps, whereas BLEU assesses the precision of n-grams in the generated text versus reference summaries. -

What is the importance of fine-tuning models?

Fine-tuning allows models to adapt to specific tasks, ensuring they perform optimally for targeted applications, such as summarizing news articles. - Why is context important in summarization?

Understanding context helps models capture more nuanced meanings, improving the coherence and relevance of the generated summaries.