In-Depth Analysis of Skin-DeepNet: Experimental Setup, Methodology, and Performance Metrics

Introduction to Skin-DeepNet

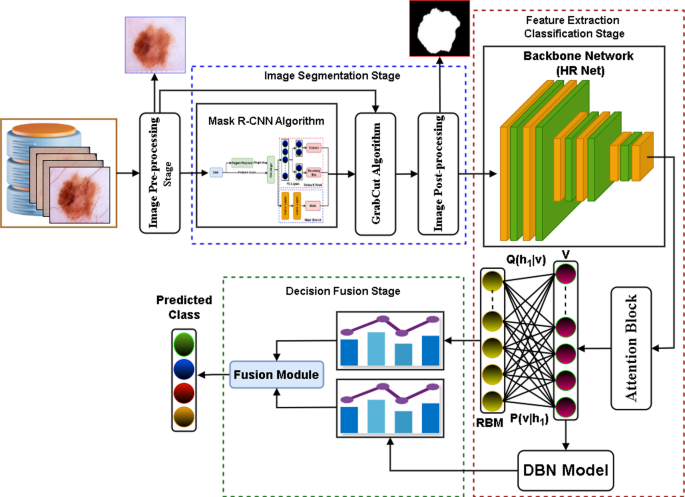

The ongoing battle against skin cancer necessitates innovative approaches in diagnostic technology. The Skin-DeepNet system emerges as a promising solution, leveraging advanced deep learning techniques for skin lesion classification. This article delves into the experimental arrangement, methodology, and performance metrics of Skin-DeepNet, while also drawing comparisons with current state-of-the-art systems.

Implementation Details

The Skin-DeepNet system has been meticulously developed using the Python programming language and operates within a Google Colab environment that boasts a robust configuration: a 69K GPU, 16GB RAM, and a 64-bit Windows 10 operating system powered by an Intel Core i7 processor. To enable efficient deep learning model development, TensorFlow serves as the chosen deep learning framework.

To ensure consistency across diverse deep neural network architectures, all input images undergo a pre-processing stage. This includes resizing each image to a standardized resolution of (224 \times 224) pixels, aligning with the prevalent dimensions endorsed by numerous pre-trained models.

Dataset Splitting

For robust testing and validation protocols, the Skin-DeepNet system employs the ISIC 2019 Challenge dataset, dividing it into training (70% or 17,732 images), validation (15% or 3,799 images), and testing (15% or 3,799 images) subsets. This careful allocation strikes a balance between sufficient training data and representative subsets for validation and testing. A similar partitioning approach is applied to the HAM10000 dataset.

Addressing Dataset Imbalance

Given the substantial imbalance in the number of images across various classes, the classification model may exhibit a bias toward the majority class. To combat this issue, data augmentation techniques—such as vertical and horizontal shifts, along with flips and rotations—are employed on the minority classes.

Training Methodology

The training methodology harnesses the Adam optimizer to enhance the performance of all employed deep learning models. Training parameters are fine-tuned, with an initial learning rate set at (0.001), a batch size of 16, weight decay at (0.0002), and a dropout rate of 0.5. To accelerate the convergence of the Deep Belief Network (DBN), its learning rate is incrementally adjusted to (0.01). A robust early stopping procedure is also in place, which halts training when the validation error begins to rise, ensuring optimal model accuracy without overfitting.

The DBN model training employs a Contrastive Divergence (CD) learning algorithm with three Restricted Boltzmann Machines (RBMs), each trained across 300 epochs. The entire network undergoes training for a total of 500 epochs with finalized hyper-parameters focusing on momentum, weight decay, and dropout rate settings.

Evaluation Metrics

Skin Lesion Segmentation

The primary aim of the image segmentation experiment within the Skin-DeepNet system is to accurately localize skin lesions in dermoscopic images. This goal necessitates an automated segmentation process to ensure precision in lesion localization, crucial for subsequent diagnostic processes.

Several metrics are implemented to evaluate the efficacy of the segmentation algorithm:

- Intersection over Union (IOU)

- Dice Coefficient (Dic)

- Jaccard Index (Jac)

- Accuracy Rate (AR)

The Dice Coefficient and Jaccard Index assess the similarity between the segmented lesion and the corresponding ground truth annotation, with each metric having optimal use case scenarios. Formulas for these metrics guide their computation.

The increased importance of accurately localizing lesions is underscored in the process by employing the Mask R-CNN architecture, which demonstrates promising results. However, some background regions are occasionally included in the segmentation outputs. To mitigate this, the GrabCut algorithm is utilized for refining Mask R-CNN outputs, supplemented by morphological reconstructions for improved masking.

Comparative Analysis

To validate the system’s effectiveness, experiments evaluate performances across different network backbones— including ResNet34, ResNet50, DenseNet121, InceptionV3, and VGG19—both with and without the GrabCut algorithm. Remarkable improvements in segmentation accuracy are observed when integrating GrabCut, as demonstrated in the experimental results.

Overall, the findings reinforce GrabCut’s effectiveness, leading to marked enhancements in segmentation accuracy across both ISIC 2019 and HAM10000 datasets.

Skin Lesion Classification

Transitioning from segmentation to classification, the Skin-DeepNet system adopts a transfer learning approach by fine-tuning various pre-trained neural networks, notably exploiting their feature extraction capabilities.

The system primarily capitalizes on advanced architectures, including HRNet, EfficientNetB0, and ResNet101 among others, for classification tasks across multiple dermoscopic image classes.

Results on Performance Metrics

The performance of these models is quantified across metrics such as accuracy, precision, recall, F1 score, and area under the curve (AUC).

For instance, on the ISIC 2019 dataset, the HRNet model notably achieved an accuracy of 97.54%, outperforming other backbone models, while on the HAM1000 dataset, it pushed the accuracy to 98.34%. Despite the overall success of the models, further investigations reveal scenarios where class imbalance affects performance—particularly for rare categories such as Squamous Cell Carcinoma.

Advanced Fusion Approaches

In pursuit of enhanced performance, various fusion methods (ensemble techniques) are compared to consolidate predictions from different classifiers. Results indicate that the XGBoost model outperforms others, achieving impressive metrics across both datasets.

Class-Wise Performance Analysis

To address potential disparities in performance across classes, a thorough class-wise evaluation is conducted. This analysis reveals varying recall rates, with good performance on more prevalent classes, while capturing potential confusions among rarer, similarly presenting lesion types.

Visualizations through normalized confusion matrices provide further insights into classification accuracy and remaining biases, directing attention to areas needing improvement, particularly in datasets where class imbalance is prevalent.

Comparative Performance with State-of-the-Art Systems

Finally, a comparative review against other state-of-the-art systems unveils Skin-DeepNet’s efficacy; it surpasses several contemporary methods regarding classification accuracy and reliability across the ISIC 2019 and HAM10000 datasets. With impressive metrics—such as an accuracy of 99.65% on ISIC 2019 and perfect metrics on HAM10000—Skin-DeepNet positions itself as a formidable player in diagnostic dermatology.

Conclusion (Not Included)

This article highlights the comprehensive performance evaluation of the Skin-DeepNet system, illustrating its innovative methodologies and promising results in the realm of skin cancer diagnosis. Through meticulous experimental arrangements and cutting-edge algorithms, Skin-DeepNet stands poised to contribute significantly to the evolving landscape of medical imaging and diagnostics.