“Automated Deep Learning Report Generator for Retinal OCT Images”

Automated Deep Learning Report Generator for Retinal OCT Images

Core Concept and Its Importance

Automated report generation for retinal Optical Coherence Tomography (OCT) images employs deep learning techniques to synthesize diagnostic reports based on image analysis. This technology matters because timely and accurate detection of ocular diseases is critical for patient outcomes. Traditional reporting is time-consuming; automation reduces the workload on ophthalmologists and minimizes human error, ultimately enhancing patient care.

Key Components of the System

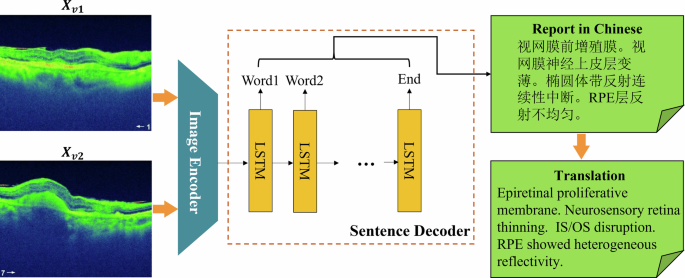

The architecture of an automated deep learning report generator encompasses several vital components:

- Image Encoder: Extracts relevant features from the OCT images.

- Feature Fusion Module: Combines features from multiple image perspectives, enhancing detail and context.

- Decoder: Utilizes the enhanced features to generate a coherent diagnostic report.

- Dataset: Consists of paired OCT images and corresponding expert-authored reports.

By understanding each of these components, we can appreciate the intricacies of generating high-quality medical documentation.

Step-by-Step Process

The lifecycle of the automated report generation can be broken down into several crucial operations:

- Image Acquisition: Collect high-quality paired OCT images, ensuring they meet predefined criteria.

- Feature Extraction: Utilize an encoder network, like Densenet121, to derive features from the images.

- Feature Fusion: Implement a multi-scale feature fusion mechanism to enhance semantic understanding and coordinate attention across various layers of the neural network.

- Report Generation: Use a decoder based on Long Short-Term Memory (LSTM) networks to formulate a textual report that describes the observed abnormalities.

Each step ensures that the system produces reliable and informative results.

Practical Example: The MORG Model

Using the MORG framework, researchers at the Joint Shantou International Eye Center developed a model that integrates these principles. This model begins with OCT image pairs from patients, processes the images to extract and fuse features, and subsequently generates diagnostic reports in Chinese. The model faced the challenge of reflecting both local abnormalities and global context, which it overcame by adopting a multi-view feature learning strategy.

Common Pitfalls and How to Avoid Them

A few challenges frequently arise in the process:

- Overfitting: This occurs when the model learns noise in the training data rather than the underlying patterns. To mitigate this, use techniques like dropout and data augmentation.

- Data Quality: Poor image quality hampers feature extraction. To prevent this, establish rigorous data collection standards.

- Lack of Interpretability: Complex models may generate results that are difficult for clinicians to interpret. Creating auxiliary visualizations or utilizing attention maps can provide insights into model predictions.

By addressing these issues proactively, developers can enhance the reliability of their models.

Tools and Frameworks in Use

Commonly used tools include:

- PyTorch: The framework typically employed for developing deep learning algorithms due to its dynamic computational graph feature.

- Densenet121: This neural network architecture enhances feature extraction while mitigating gradient vanishing issues.

Though powerful, each tool has limitations; for instance, PyTorch may require substantial computational resources for large models.

Variations and Their Trade-offs

Variations in architecture, such as different neural network designs for the encoder or decoder, can impact performance:

- Convolutional Neural Networks (CNNs) vs. Transformers: CNNs are generally more interpretable and efficient for image processing, while Transformers may offer superior contextual understanding with sufficient data. The choice between these architectures depends on available resources and specific project goals.

FAQ

What types of images are used in the reports?

Retinal OCT images are standard, specifically focusing on different cross-sectional views of the retina.

How does the model ensure accuracy in its reports?

By training on a comprehensive dataset combining OCT images with expert reports, the model learns to correlate specific image features with clinical terminology.

What challenges exist with data collection?

Data quality can be an issue; stringent criteria must be set to exclude poor-quality scans.

Is the model applicable in different languages?

While the current implementation generates reports in Chinese, adaptations can be made for other languages with appropriate training data.