Overview of Inverse Rendering in 3D Multi-Object Scene Tracking

In recent advancements, researchers are leveraging the potentials of inverse rendering and generative object priors to infer and track 3D multi-object scenes. This innovative approach emphasizes the importance of accurate scene understanding, particularly in safety-critical scenarios such as autonomous driving. By conceptualizing object tracking as a test-time inverse rendering and synthesis task, we can search for latent object representations of all scene elements that align with observed images across time, optimizing parameters such as object pose, geometry, and appearance.

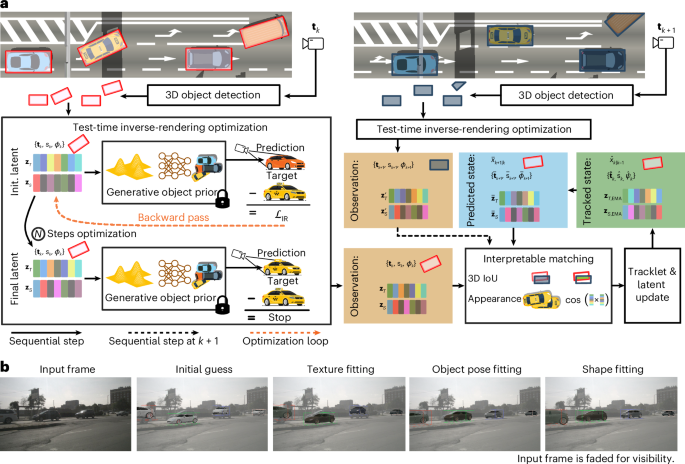

Structuring the Scene as a Scene Graph

At the core of this method lies the transformation of complex multi-object scenes into a scene graph representation. This graph comprises individually generated 3D object models, which are the leaf nodes of the structure. By employing this representation, we can efficiently compute gradients in both object and camera coordinate systems. The differentiable nature of our forward-rendering pipeline allows us to engage in detailed gradient calculations, which are crucial for maintaining the overall efficiency and interpretability of the process.

Tracking Objects Through Inverse Rendering

The tracking framework utilizes inverse rendered multi-object scenes, with an emphasis on rendering individual object instances based on observed images. To achieve this, algorithms are crafted to minimize the visual distance between rendered 3D representations and observed images. The detailed definition of the end-to-end tracking algorithm illustrates the meticulous approach needed to ensure that each object is accurately tracked throughout various stages of observation.

Object Generation through Generative Models

To accurately represent a diverse set of object instances, we utilize an object-centric scene representation. Each object instance is regarded as stemming from a learned distribution over all instances within a specific class. This generative modeling allows for the creation of a vast array of object instances, enhancing the system’s capability to represent complex scenes effectively.

The representation utilizes two disentangled spaces: one for shape and another for texture. This nuanced modeling ensures the richness of detail within each object instance, ultimately leading to more accurate renderings and tracking.

Multi-Object Scene Rendering Techniques

Our method constructs a multi-object scene using a differentiable scene graph centered around affine transformations and object instances. This graph captures the relationships between various objects and includes efficient occlusion handling. By strategically defining camera transformations and utilizing a differentiable rasterized rendering pipeline, we can generate accurate representations of multi-object scenes, facilitating robust object tracking across time.

Inverse Rendering for Scene Understanding

Utilizing the described differentiable rendering model, we invert it through gradient-based optimization, aiming to reconstruct the set of object representations in any given image. This requires precise initialization of object poses and embeddings, ensuring that they align closely with the observable features in the image context. Considering the challenges posed by complex scenes containing various object instances, we implement more sophisticated optimization techniques to extract meaningful visual representations.

3D Multi-Object Tracking Through Time

Our tracking methodology employs the inverse rendering approach to keep track of objects across video frames. By initializing each observation with initial 3D detections, we can refine the object location, scale, and orientation actively. Although our approach is flexible regarding the dynamics model used, we adopt a linear state-transition framework that efficiently predicts object states. The adoption of a Kalman filter for tracking reflects a blend of traditional and modern methodologies, emphasizing the ongoing evolution of tracking techniques.

Implementation Details

The success of our model is rooted in precise implementation choices, emphasizing the loss term composition, optimization schedules, and the heuristics that guide the matching stage during multi-object tracking. It integrates rich details about the generative object model while ensuring that all components work harmoniously together.

Reporting and Further Research

This innovative approach marks a significant step towards enhancing scene understanding through advanced inverse rendering techniques. More detailed insights and data can be found in the supplementary materials linked, providing a fuller grasp of the research, its methodologies, and its implications for the future of automated perception in 3D environments.

This ongoing exploration serves as a vital piece in the puzzle of creating robust systems capable of understanding complex visual environments, propelling advancements in fields requiring meticulous scene comprehension, such as autonomous driving and beyond.