Model Structure of a Biologically Oriented Motion Processing System

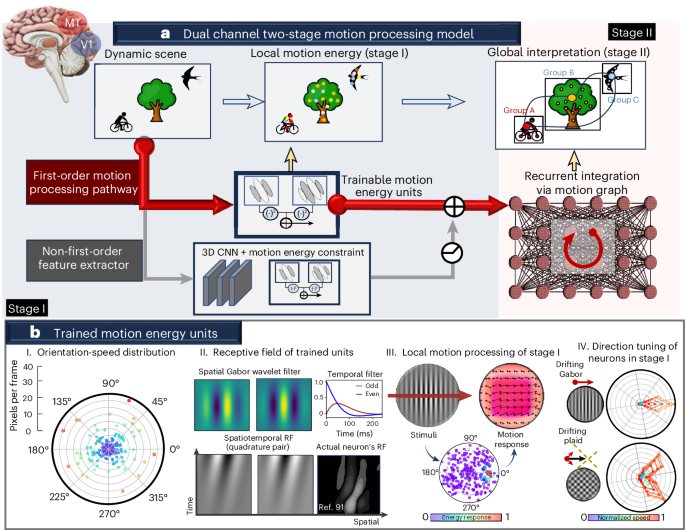

In cutting-edge advancements of biological motion processing systems, a novel two-stage model is introduced, comprising two distinct channels that reflect the complexity of human visual perception. This model serves as a crucial foundation for understanding neural responses to motion, particularly in simulating the functionality of the human visual system’s V1 cortex. The two stages are outlined as Stage I and Stage II, each playing a unique role in processing motion.

Stage I: Channeling First-Order and Higher-Order Features

First-Order Channel: Spatiotemporal Dynamics

The first channel in Stage I is responsible for direct luminance-based motion energy computation. Inputs into this section comprise sequences of grayscale images denoted as ( S(p,t) ), where each image encapsulates local motion captures at various spatial positions ( p ) across different time frames ( t ). The goal is to replicate the behavior of direction-selective neurons in the visual cortex.

To achieve this, the model utilizes 3D Gabor filters, which serve as the primary tool for neuron response modeling. These filters are decomposed into spatial 2D and temporal 1D components to enhance computational efficiency. By manipulating trainable parameters like frequency (( f_s, f_t )), orientation (( \theta )), and Gabor filter shape (( \sigma, \gamma )), the model can effectively adjust its spatiotemporal tuning.

Higher-Order Channel: Complex Feature Extraction

The second channel in Stage I adopts a more sophisticated approach, harnessing standard 3D convolutional neural networks (CNNs) to extract higher-order, non-linear features. This channel comprises five layers of 3D CNNs, interlinked with residual connections and employing nonlinear ReLU activation functions. The configuration allows for preprocessing of data, creating a pathway for extracting nuanced motion energy-related features before proceeding to Stage II.

Notably, this stage inputs RGB images to enhance characteristics concerning color sensitivity, an aspect highly relevant to human perception. The outputs from both channels converge into a unified feature set for further analysis in Stage II.

Stage II: Global Motion Integration and Segregation

Transitioning to Stage II, the model addresses a significant limitation encountered in visual perception—the aperture problem. The aperture problem arises when detectors with limited receptive fields struggle to discern the global motion of objects. To circumvent this challenge, the model integrates local motions using advanced graph-based techniques that enhance spatial connectivity.

Motion Graph and Self-Attention Mechanisms

A pivotal aspect of this integration is the motion graph, an adaptable framework constructed from nodes representing spatial locations and edges denoting relationships between these points. The connections across the nodes specify local motion energy vectors, facilitating a comprehensive understanding of motion dynamics across the stimulus.

Incorporating techniques akin to modern self-attention mechanisms, the model calculates the distances between nodes using cosine similarity, establishing robust connections across varying motion components. Consequently, this graph representation enables the model to overcome the constraints of conventional convolutional approaches by allowing extensive connectivity across spatial locations.

Recurrent Processing for Integration

Further enhancing the model’s capability, recurrent neural networks (RNNs) come into play. They effectively manage temporal dependencies by not only simulating dynamics of motion integration but also accommodating feedback loops critical in human neural processing. During each iteration, an adjacency matrix, determined by the current graph’s feature embeddings, facilitates seamless information flow across the model architecture.

The recurrence of these steps ensures that localized motion qualities integrate into a cohesive global representation, approximating a comprehensive understanding of motion energy over time.

Dataset Construction

To train the model effectively, a diverse dataset is essential, encompassing a wide variety of both natural and synthetic motion patterns. This includes integrating established benchmarks such as MPI-Sintel and KITTI with specially tailored datasets. These newly generated datasets feature simple 2D patterns and different forms of motion (like drifting gratings), providing foundational training stimuli that enable the model to develop stable performance characteristics.

Experimentation and Human Data Correlation

Human psychophysical measurements have played a significant role in evaluating the model’s effectiveness. By using datasets that encompass both first-order and second-order motion characteristics, researchers can juxtapose model predictions with human perceptual data. This comparison allows for validating how well the model mimics biological motion perception without reliance on ground truth data, focusing rather on subjective human insights into motion.

The Computational Landscape

The model has been built using modern frameworks like PyTorch, ensuring scalability and efficiency in processing. This study has significant implications for future explorations into how artificial systems can emulate human cognition, particularly in dynamically complex visual environments.

While this model framework tackles critical issues in motion perception through advanced computational strategies and biologically inspired methodologies, it opens avenues for further development and deeper insights into human vision, offering a rich field for exploration in both artificial intelligence and cognitive neuroscience.