Overview of Datasets and Experimental Setup for Optimized RetinaNet

In this article, we delve into the intricacies of our research involving the Optimized RetinaNet (OptRetinaNet) and its performance across various datasets. We’ll provide a detailed overview of each dataset utilized in our experiments, followed by the experimental setup and implementation nuances regarding RetinaNet and our proposed optimization algorithm. Finally, we’ll explore and analyze the results of our experiments on each dataset, comparing the performance of OptRetinaNet against the baseline RetinaNet23 and other contemporary object detection methods.

Dataset Overview

KITTI Dataset

The KITTI dataset is a cornerstone in the realm of autonomous driving research, featuring 7,481 training images and 7,518 testing images. Each image is accompanied by camera calibration files, providing essential spatial representation. The dataset encompasses critical object classes such as cars, pedestrians, and cyclists, facilitating a comprehensive evaluation of object detection capabilities in complex urban environments. As illustrated in Figure 4, images from KITTI showcase diverse object categories and varying environmental conditions, highlighting the challenges in reliable detection.

UFFD25 Dataset

The UFFD25 dataset is specially curated to assess face detection models under a myriad of challenging conditions. Comprising 6,424 images with 10,895 face annotations, it captures real-world variabilities such as lens artifacts, motion blur, and adverse weather effects. The presence of distractor images, including animal faces, is fundamental for gauging the efficiency of face detectors in distinguishing between relevant and irrelevant images. A sample from this dataset is presented in Figure 5, demonstrating the numerous obstacles encountered in face detection.

TomatoPlantFactoryDataset

Targeting agricultural advances, the TomatoPlantFactoryDataset is replete with high-quality images aimed at tomato plant detection, comprising 520 images and 9,112 tomato fruit instances. Unlike other datasets with lower-resolution images, this collection features sharper resolutions and intricate ambient lighting conditions. As showcased in Figure 6, the dataset encapsulates potential challenges including occlusions and background clutter that new detection algorithms must overcome.

MS COCO 2017 Dataset

The esteemed MS COCO 2017 dataset stands as a benchmark not only for object detection but also for segmentation and image captioning. With approximately 118,000 training images and over 2.5 million annotated instances across 80 object categories, the dataset presents a highly realistic challenge to models. The visual complexity of MS COCO is evident in Figure 7, which includes multi-object scenes with varying scales, orientations, and non-iconic viewpoints.

Summary Table of Datasets

A structured summary of the datasets highlights the key aspects: the number of images, annotations, object classes, and significant challenges faced during experiments, as displayed in Table 3.

Experimental Setup and Implementation Details

Computational Environment

Our experiments utilized a high-performance computing system equipped with an NVIDIA GeForce GTX 1080 Ti GPU, 32 GB of RAM, and an Intel Core i7 @ 3.40GHz CPU. The MMDetection framework served as the foundation for our implementation, ensuring compatibility and efficiency.

Implementation Parameters

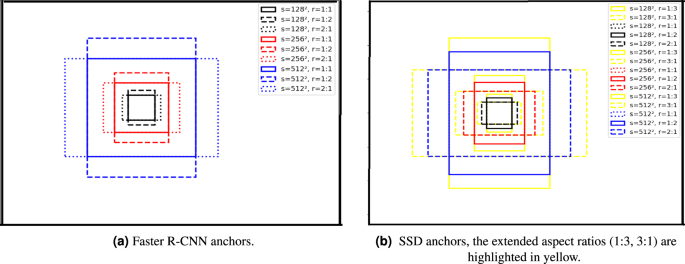

For our optimization algorithm, we set a population size of 30 and ran the algorithm for 100 generations. The scaling factor (F) was established at 0.5, and the crossover probability (Cr) was determined at 0.9. We maintained anchor configuration settings akin to the original RetinaNet, utilizing pre-trained weights from ImageNet for initialization. The training process employed mini-batch Stochastic Gradient Descent (SGD) with momentum and weight decay adjustments, complemented with various data augmentation techniques to bolster generalization.

Evaluation Metrics

To measure detection accuracy effectively, we used Average Precision (AP) as our primary evaluation metric. Different IoU thresholds were respectively established for each dataset, aligning with established benchmarks to ensure consistent and fair comparisons across models.

Results and Discussion

Results for KITTI Dataset

With optimized anchor parameters, OptRetinaNet demonstrated superior performance on the KITTI dataset, achieving higher AP scores across both ResNet-50 and ResNet-101 backbones compared to RetinaNet. Particularly, the UP-scores were strikingly elevated for cars, pedestrians, and cyclists, demonstrating consistent improvements across categories. Notable enhancements in AP when using ResNet-101 underscored the advantages of deep feature extraction. Figures 8, 9, and 10 visualize the advantages in detection performance, convergence, and stability.

Results for UFFD25 Dataset

On the UFFD25 dataset, the implementation of optimized anchor structures allowed OptRetinaNet to excel in capturing diverse facial attributes. The performance gains over RetinaNet were stark, particularly impressive for challenging detection scenarios with occlusions and lighting variations. Figures 11, 12, and 13 reveal the substantive improvements in detection accuracy and model stability, further elucidating OptRetinaNet’s effectiveness in demanding conditions.

Results for TomatoPlantFactoryDataset

The TomatoPlantFactoryDataset results indicated that while retaining the original aspect ratios, OptRetinaNet’s optimized anchor scales amplified detection accuracy significantly. With detailed results provided in Table 6, the model demonstrated its strength in handling agricultural object detection tasks. Visual comparisons of detection are illustrated in Figure 14 alongside performance trends in subsequent figures.

Results for MS COCO 2017 Dataset

Lastly, the results from the MS COCO 2017 dataset showcased OptRetinaNet’s robust performance gains over the baseline RetinaNet and other contemporary models. The results revealed superior AP scores across varying evaluation criteria, substantiating OptRetinaNet’s competitive edge even within a complex dataset. Figures 17, 18, and 19 present impressive performance trends and training dynamics, reaffirming the algorithm’s efficacy in diverse object detection scenarios.

This structured exploration of our experimental findings emphasizes the pivotal role of optimized anchor parameters in enhancing detection performance across various scenarios and datasets, marking a significant advancement in the realm of object detection models.