Transformers Propel Breakthroughs in Genome Language Modeling

Transformers Propel Breakthroughs in Genome Language Modeling

Understanding Genome Language Models

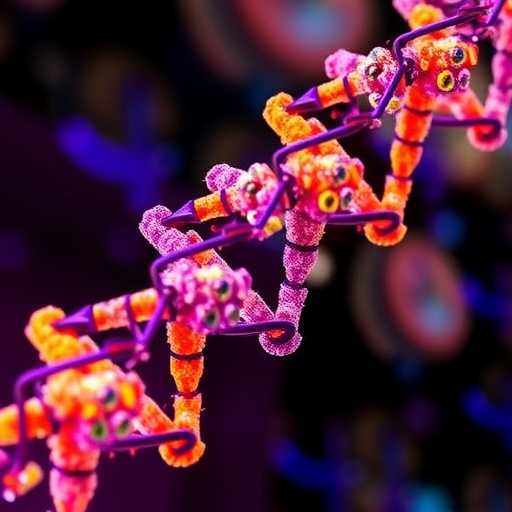

Genome Language Models (gLMs) leverage transformer architectures to analyze and interpret genomic sequences, transforming how researchers decode biological information. This innovative approach applies techniques from natural language processing (NLP) to biology, enabling the extraction of meaningful patterns from complex genetic data. Just as language models revolutionized text understanding, gLMs aim to unravel the intricacies of the human genome.

The Importance of Transformer Architecture in Genomics

Transformers, initially designed for tasks like text translation, excel in tasks involving sequential data due to their ability to capture long-range dependencies. For example, when processing a sentence, transformers consider not just individual words but the context surrounding them. Similarly, in genomics, gLMs can analyze sequences of nucleotides (A, T, C, G) with attention to relationships across vast genomic regions, providing insights that might go unnoticed using traditional methods.

Key Components of gLMs

Several components define gLMs and their effective use in genomic research:

-

Unsupervised Pretraining: Pretraining gLMs on extensive genomic databases allows them to identify patterns without exhaustive labeling. For instance, a model could learn to predict missing nucleotide sequences by absorbing vast amounts of genomic data.

-

Zero- and Few-Shot Learning: These features enable gLMs to make inferences even with limited labeled data. This is particularly useful in genomics, where annotated datasets can be scarce. For example, a gLM might correctly predict the effect of a genetic variation on a disease’s progression without needing previous examples.

- Computational Resources and Memory: While gLMs are powerful, they require substantial computational resources. This limits their practicality for some laboratories and necessitates advancements in hardware or model efficiency.

A Step-by-Step Process in gLM Application

Implementing gLMs in a research context typically follows several key steps:

-

Data Acquisition: Researchers gather genomic sequences from public databases like GenBank or Ensembl.

-

Preprocessing: The raw data undergoes cleaning and normalization, ensuring that it’s formatted correctly for model input.

-

Model Training: gLMs are pretrained on large datasets, allowing them to develop an understanding of genetic sequences.

-

Fine-Tuning: After pretraining, models are often fine-tuned on specific tasks, such as predicting gene expression related to environmental factors.

- Evaluation: Researchers test the models against known outcomes to assess performance and accuracy.

Case Study: Disease Prediction

A compelling application of gLMs is in predicting genetic predispositions to diseases. For example, a research team employed a gLM to analyze single nucleotide polymorphisms (SNPs)—variations at single positions in the DNA sequence linked to diseases like diabetes. By leveraging this model, they could identify which SNP patterns most significantly correlated with disease manifestations, potentially leading to earlier diagnosis or personalized treatment strategies.

Common Pitfalls and How to Avoid Them

While gLMs are powerful, challenges persist:

-

Overfitting: This occurs when models perform well on training data but poorly on unseen data. To mitigate this, researchers can implement techniques such as dropout or regularization during training.

-

Lack of Interpretability: The complexity of transformer models can obscure how decisions are made. To combat this, utilizing techniques like SHAP (SHapley Additive exPlanations) can help clarify model predictions.

- Resource Allocation: Given their high computational demands, ensuring adequate resources is crucial. Researchers can consider cloud computing solutions or optimize their models to improve efficiency.

Tools and Metrics for gLM Evaluation

Several tools and frameworks are commonly used in gLM research:

-

TensorFlow and PyTorch: These libraries offer robust ecosystems for developing and training deep learning models, including gLMs.

- Metrics such as Accuracy and F1-Score: These metrics are vital for evaluating model performance in classification tasks. For example, in predicting phenotypic outcomes based on genetic data, a high F1-score indicates a balanced model in precision and recall, essential for reliable predictions.

Variations and Trade-offs in gLM Approaches

Different methodologies exist for implementing gLMs, each with its pros and cons. For instance, some researchers may opt for hybrid models that incorporate both transformer and graph neural network elements. While this approach may enhance the model’s ability to capture complex relationships among genes, it also increases computational demand and complexity.

Frequently Asked Questions

What are the main benefits of using gLMs in genomics?

gLMs enhance the analysis of genomic data by identifying patterns within vast datasets without extensive labeling, accelerating research in areas like disease prediction and drug discovery.

Are gLMs interpretable?

Currently, gLMs can be challenging to interpret. Ongoing research aims to develop metrics and techniques to make these models more transparent, allowing researchers to understand how predictions are made.

How can researchers prevent overfitting in gLMs?

Utilizing techniques like dropout during training, for example, can help prevent overfitting by ensuring that the model does not rely too heavily on a subset of the training data.

What is the future of gLMs in healthcare?

The integration of gLMs in healthcare could significantly impact personalized medicine by predicting patient responses to treatments based on genetic data, leading to more tailored healthcare solutions.