Exploring the LLM-Prop Framework: Shaping Crystal Property Prediction

Introduction to LLM-Prop

The LLM-Prop framework is a groundbreaking initiative aimed at predicting the physical and electronic properties of crystal materials through innovative use of natural language processing. At its core lies a finely-tuned encoder derived from a compact version of the T5 model. This architecture leverages text descriptions of crystal structures to create meaningful crystal representations, facilitating accurate predictions about various material characteristics.

The Core Architecture

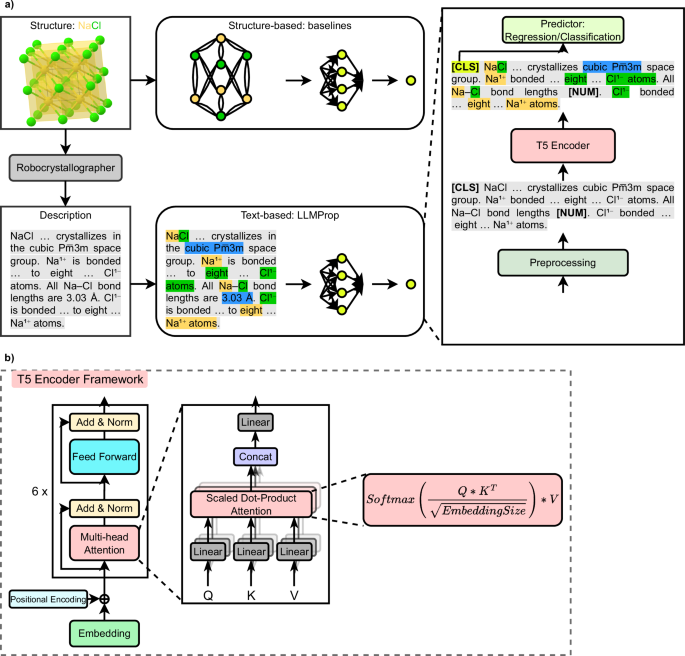

Illustrated in Figure 1a, the LLM-Prop framework underscores a unique methodology juxtaposing LLMs against traditional Graph Neural Networks (GNNs). Instead of relying solely on structural data, LLM-Prop capitalizes on the richness embedded within textual descriptions. The transition into this realm marries the power of T5—a pioneering transformer-based language model designed for diverse text-based tasks—with specialized adaptations aimed at crystal structure representations.

T5, or Text-to-Text Transfer Transformer, fundamentally transforms all linguistic tasks into a uniform text-input-output paradigm, enabling both generative and predictive functionalities. Its effectiveness is attributed to the extensive comparisons and enhancements leveraged from numerous tasks, ultimately augmenting its capabilities beyond conventional architectures. By integrating insights from various pre-training techniques and datasets, T5 achieves a sophisticated level of representation that LLM-Prop taps into for crystal property prediction.

Selecting the T5 Encoder

While T5’s richer architecture includes an encoder-decoder mechanism, LLM-Prop adopts a leaner approach by focusing exclusively on the encoder component for predictive tasks. This choice is motivated by the need to enhance efficiency while circumventing the added complexity and memory requirements imposed by the decoder during regression tasks. The directed focus on the encoder allows LLM-Prop to maintain a manageable parameter count while processing extensive sequences of crystal data.

Text Preprocessing Techniques in LLM-Prop

Preprocessing Strategy Overview

The effectiveness of LLM-Prop is significantly bolstered by a series of preprocessing strategies designed to optimize input data. This process not only involves the removal of unnecessary stopwords but also the substitution of critical numerical data—bond lengths and angles—with special tokens. Replacing such quantitative aspects with tokens like [NUM] and [ANG] ensures that LLM-Prop can efficiently manage its input and maintain context while sidestepping the common pitfalls of processing numerical values.

Analyzing the Impact of Tokenization

A crucial insight emerged during the development of LLM-Prop: traditional ways of representing numerical data complicate learning processes for language models. By transforming bond lengths and angles into designated tokens, LLM-Prop effectively mitigates the challenges associated with numerical contextualization. This streamlined approach, although seemingly limiting, surprisingly enhances performance by allowing the model to consider broader contextual information within the text.

Input Tokenization and Sequence Length

Integrating a [CLS] token at the start of the input aids in capturing the overarching context. This design choice not only fosters effective pooling across inputs but also sets the stage for enhanced predictive capabilities. The framework allows LLM-Prop to process up to 888 tokens—a strategic decision informed by the memory capabilities of modern GPUs—thus recognizing the critical correlation between input length and predictive performance.

Data Sources and Collection

The data foundation for LLM-Prop is sourced from the Materials Project database, an invaluable resource comprising a wealth of crystal structure-descriptive pairs. The initial dataset encompassed over 145,000 crystal structures, systematically refined and split to create robust training, validation, and test sets. Each crystal was labeled with vital properties, including band gap, formation energy, and crystal volume—all pivotal for assessing LLM-Prop’s predictive prowess.

Insights from Data Distribution

Figure 2 highlights the distribution of tokens within the crystal text descriptions, showcasing prevalent elements such as O²⁻ and Mg. This analysis enables an understanding of the language patterns within crystal descriptors, which can further inform how LLM-Prop might leverage these components in its predictions.

Predictive Performance Evaluation

LLM-Prop Versus GNNs

In comparative assessments against GNNs, LLM-Prop excels particularly in volume predictions, outperforming the best GNN baseline substantially. This performance showcases the advantageous role of textual descriptions in deriving nuanced insights into crystal properties, underlining the framework’s capability to tap into crucial information that might otherwise be overlooked by structural-only approaches.

Benchmarking Against MatBERT

When juxtaposed with MatBERT—a model designed specifically for text-based tasks—LLM-Prop demonstrates superior efficacy across multiple properties, despite its relatively fewer parameters. This comparison allows researchers to appreciate the contrasting methodologies and performance dynamics between various language models and their adaptations for crystal property prediction.

Ablation Studies: Dissecting Model Performance

A series of ablation studies explored the influence of each design choice within LLM-Prop, revealing critical insights into how preprocessing strategies, including stopword removal and token substitution, impact performance. Notably, the introduction of label scaling techniques for regression tasks emerged as a significant contributor to enhancing predictive accuracy.

Exploring Preprocessing Techniques

An insightful review of preprocessing techniques showcases how each adjustment influences the overall outcomes. The removal of stopwords and the tokenization of numerical data were associated with improvements in performance across regression tasks like band gap prediction, indicating that smart data engineering plays a pivotal role in model efficacy.

Conclusion and Future Directions

The forward motion of LLM-Prop invites further exploration into the realm of text-based crystal property prediction, suggesting pathways for more refined methodologies. As data-rich environments expand and the intricacies of crystal structures become better understood, the potential applications for LLM-Prop and similar frameworks are both expansive and promising. The evolving landscape of machine learning in materials science stands ready to embrace these novel approaches, paving the way for breakthroughs in crystal property prediction and understanding the complexities of crystalline materials.