The Challenges of Creating the Gold Standard EL Dataset

The pursuit of establishing a gold standard Entity Linking (EL) dataset is riddled with complexities, many of which stem from the absence of a precise definition for the task itself. Initially, many studies adhered to Wikipedia’s editing policy, which suggested linking only to pages of potential interest to readers. This approach, however, led to inconsistencies among annotators, highlighting the urgent need for a more rigorous framework.

The Need for Precise Definitions

Creating a formal definition of EL presents a formidable challenge. The inherent ambiguity of natural language makes it nearly impossible to arrive at a universally accepted definition. Over the years, attempts to unify annotator efforts have often focused on developing comprehensive annotation guidelines. However, these guidelines, while pivotal, must address several critical considerations:

Technical Aspects of Annotations

-

Overlap of Annotations:

One of the first decisions in the creation of the guidelines is whether annotations can overlap. For instance, in the phrase "Gdańsk University of Technology," an annotator could link the entire phrase to its corresponding Wikipedia page, while simultaneously linking just "Gdańsk" to another article about the city. Allowing overlaps can enrich the dataset, but it also complicates the annotation process. -

Fragment Continuity:

Another technical consideration is whether mentions must be continuous text fragments or whether gaps are acceptable. In the sentence "Castor and his twin-brother Pollux helped the Romans at the Battle of Regillus," could "Castor and Pollux" be linked without including "his twin-brother"? This decision impacts how fluidly the text can be annotated. - Character vs Word-Level Mentions:

Annotation guidelines also need to determine whether mentions are limited to word-level or if character-level mentions—where links can start or end in the middle of a word—are permissible. This has implications for how nuanced the linkages can be, affecting the overall precision of the dataset.

Types of Entities to Link

Determining the types of entities to be linked is equally crucial. The landscape of this decision often bifurcates into two primary approaches: KG-dependent and KG-independent.

-

KG-dependent Approach:

In this paradigm, mentions must be linked to existing entities in a Knowledge Graph (KG). The presence of NIL links is disallowed, as the framework comprehensively defines what constitutes a valid mention. - KG-independent Approach:

Here, mention detection and entity disambiguation are viewed as separate tasks. This method often leverages external tools, such as Named Entity Recognition (NER) algorithms, to detect mentions before determining if they can be linked to a KG. This approach necessitates a robust NIL detection capability.

Common Concepts vs Named Entities

A vital question arises during the annotation process: should common concepts be included alongside named entities? While common concepts can enhance the richness of a dataset, their vagueness often leads to inconsistencies among annotators. In contrast, named entities, though more concrete, also present challenges, particularly in borderline cases.

Philosopher Saul Kripke’s concept of the rigid designator offers some guidance here. Rigid designators refer uniquely to the same object across all possible worlds. This principle can help inform the selection of named entities, but effective classification schemes remain critical for clarity.

Annotation Guidelines Framework

Effective annotation guidelines must encompass several key elements:

-

Technical Details:

Guidelines should stipulate that detected mentions are non-overlapping, continuous, and confined to word-level. -

NER Class Definitions:

Using a robust NER class scheme, such as the modified OntoNotes 5.0 schema, provides a foundation for classifying mentions consistently. -

KG-independence:

Establishing that the annotation process will follow a KG-independent model where proper mentions are first identified and then linked. -

Link Precision:

All mentions must link to definitive Wikipedia articles, disallowing links to redirects, disambiguation pages, or fractions of articles. -

Specificity Matters:

Guidelines should ensure that annotators target more specific articles when available, as specificity enhances the dataset’s overall quality. - Metonymic Awareness:

Annotators should recognize and respect metonymy, linking phrases like "Kyiv" to the relevant government entity rather than merely the city.

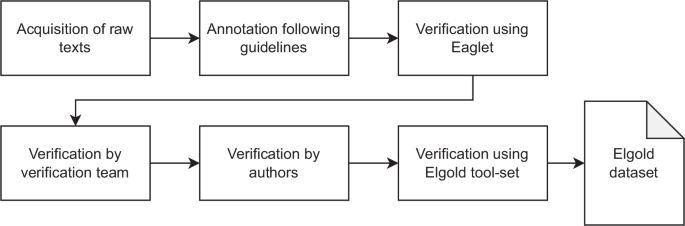

Stages of Dataset Creation

The process of creating a gold standard EL dataset involves multiple stages, both human-driven and automated. Each stage plays a critical role in ensuring quality and consistency.

Raw Text Acquisition

The initial step involves gathering raw texts that will serve as the data foundation. Key considerations include:

- Relevance: Collecting texts that reflect recent trends.

- Diversity: Ensuring a variety of domains and writing styles.

- Length Variation: Attracting texts of differing lengths enhances the dataset’s richness.

Annotation Phase

During this crucial step, annotators sift through texts, identifying named entities and linking them to corresponding Wikipedia pages. A customized annotation tool, "ligilo," facilitates this process by integrating NER and Wikipedia API functionalities.

Multi-Stage Verification

Given the complexities endemic to human annotation, a comprehensive verification system is vital for maintaining dataset integrity. This multifaceted approach includes both automated tools and manual review processes. The Eaglet tool, which identifies eight potential errors in annotations, assists in streamlining error detection.

Consequently, a dedicated group of editors conducts a thorough review, aiming to correct common issues while enabling intersubjective perspectives on the annotation quality.

Final Adjustments and Toolset Application

A dedicated toolset is employed to carry out final verification actions, including:

- Correcting annotation discrepancies.

- Normalizing target entities.

- Ensuring that links are valid and current.

Ultimately, creating a gold standard EL dataset requires meticulous planning, robust guidelines, and extensive validation efforts to overcome the inherent challenges of natural language ambiguity and the intricacies of human cognition.