Assessing LLMs in Health Communication: A Study Overview

Introduction to the Study

In the ever-evolving landscape of artificial intelligence, large language models (LLMs) like OpenAI’s ChatGPT, Google Gemini, and Mistral AI are making waves, particularly in fields such as health communication. A recent study aimed to evaluate these models’ ability to generate health risk information compliant with evidence-based health communication standards. By employing systematic prompting variations, researchers sought to determine the accuracy and reliability of the information produced when users queried sensitive health topics like mammography and prostate-specific antigen (PSA) testing.

Study Design and Methodology

Content Analysis Framework

The study, conducted between June and July 2024, adhered to established reporting guidelines, specifically STROBE and TRIPOD-LLM, ensuring rigorous methodology. The researchers focused on three prominent LLMs, namely OpenAI’s ChatGPT (gpt-3.5-turbo), Google Gemini (1.5-Flash), and Mistral AI Le Chat (mistral-large-2402). Selected due to their accessibility in Germany without requiring personal data, these models were used to explore how effectively they could provide evidence-based information in response to health-related queries.

Following a pre-registered content analysis design (AsPredicted, Registration No. 180732), the researchers implemented a systematic prompting strategy encompassing 18 prompts related to both mammography and PSA tests. The prompts were crafted across three levels of contextual informedness:

- Highly Informed Prompts: These employed technical keywords related to the specific medical procedure.

- Moderately Informed Prompts: These utilized more generic terms, making them slightly less technical.

- Low-Informed Prompts: These omitted essential terminology altogether.

The Health Topics: Mammography and PSA Testing

Mammography for breast cancer (BC) and PSA testing for prostate cancer (PC) were chosen as focal points due to their prevalence and significance in public health. Both screening methods have presented complex risk-benefit scenarios and have sparked ongoing debates about overdiagnosis and false positives in the public sphere. Therefore, they served as ideal case studies to evaluate whether LLM-generated responses align with established health communication guidelines.

Scoring and Analytical Framework

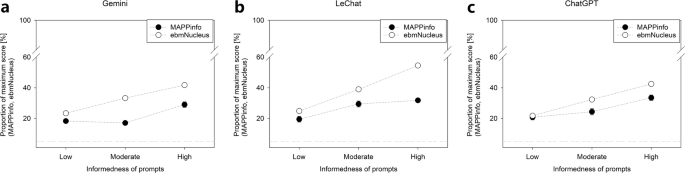

Two independent researchers, blinded to each other’s assessments, evaluated the responses using validated scoring instruments: MAPPinfo and ebmNucleus. Each model’s responses were designated and scored based on the quality of the information presented. MAPPinfo focuses on broad quality assessment, while ebmNucleus zeros in on critical health decision-making components.

By employing a systematic approach, every LLM response was labeled and scored accordingly, with emphasis placed on the evidence base underlying the responses. Scoring was conducted on a scale ranging from zero to three points for aspects relating to patient-relevant information, thus ensuring thorough evaluation.

Inter-Rater Reliability and Assessment Challenges

The study faced challenges in achieving consistent inter-rater reliability. Initial calculations yielded Cohen’s kappa coefficients of ϰ = 0.41 for MAPPinfo and ϰ = 0.38 for ebmNucleus, indicating variability in scoring among raters. To address this, a third rater, blinded to previous assessments, was introduced to mediate conflicts, achieving a much higher agreement rate of ϰ = 0.94.

Findings and Interpretations from Study 1

The results highlighted significant variability in the output quality of the LLMs, depending on the type and depth of the prompts used. Descriptive statistics and variance analyses revealed that responses appropriated to higher-informed prompts garnered better scores in adherence to evidence-based criteria. These findings emphasize the necessity of comprehensive prompting to foster beneficial LLM-generated outputs in health-related queries.

Study 2: A Lay Perspective on Prompting

In a follow-up analysis (Study 2), researchers transitioned to assessing how well laypeople could leverage LLMs to receive evidence-based information. An additional sample of 300 participants interacted with the LLMs using either standard or enhanced prompting techniques to evaluate how prompting variations affected the quality of responses.

The enhanced prompting instructions encouraged participants to think critically about their information-gathering approach, prompting them to consider the options, advantages, risks, and consistency of potential outcomes (the OARS rule). The design aimed to foster a more informed decision-making process, thereby amplifying the quality of the communications received from the LLMs.

Methodological Rigor and Engagement

To ensure methodological rigor, the study incorporated robust randomization procedures and gathered comprehensive demographic data from participants. By maintaining diversity in participant profiles, researchers aimed for a representative sample, ultimately enriching the data quality and relevance.

Scoring and Analysis in Study 2

The analytical procedure mirrored that of Study 1, where raters independently assessed the generated responses using similar scoring systems. Inter-rater reliability results demonstrated improvement compared to Study 1, highlighting enhanced scoring clarity and consistency, which bolstered the reliability of the overall assessment.

Exploring the Effects of Prompting Variations

Through both studies, researchers took a keen interest in unpacking how variations in prompting influenced the quality of LLM-generated information. The results indicated that informed prompts significantly impacted the adherence of responses to evidence-based criteria, revealing potential strategies for enhancing user interactions and improving the utility of LLMs in health communication.

Summary of Implications

Both studies underscore the promising potential of LLMs in generating health information while concurrently pointing to the critical importance of user engagement and prompting strategies. By leveraging these insights, researchers and health communicators can further refine how AI tools are utilized to support informed medical decisions in an evolving digital landscape.

This comprehensive examination of LLMs reflects a pivotal moment in the intersection of artificial intelligence and health information delivery, offering critical insights that can serve as a cornerstone for future developments in health communication strategies.