Exploring Deep Learning Approaches to Classifying Maize Leaf Diseases

Experimental Setup

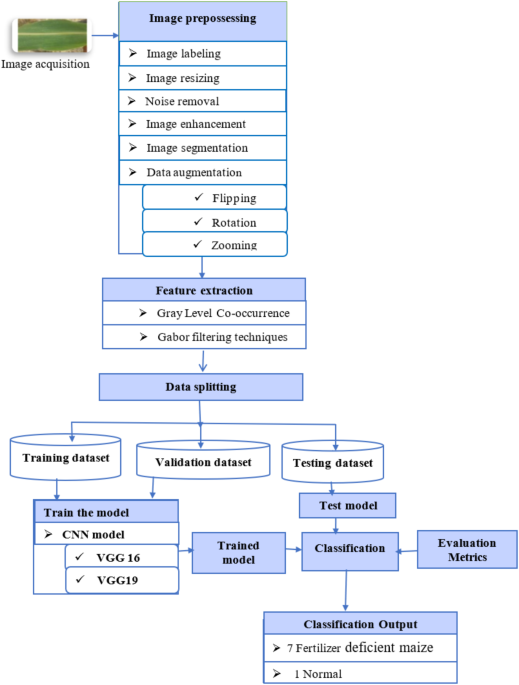

The foundational experiments of this research were conducted in Python, which provided a robust environment for leveraging various libraries crucial for image processing, numerical computations, and data visualization. OpenCV and NumPy stood at the forefront for image manipulation and numerical tasks, respectively. For the deep learning aspect, Keras, with TensorFlow as its backend, facilitated sophisticated data modeling.

The research journey unfolded on Google Colaboratory, a free cloud-based Jupyter notebook environment. This platform enabled seamless model training on GPUs, freeing the researchers from the constraints of local hardware limitations. The dataset was meticulously divided into training and testing components, allocating 80% for training and 20% for testing. Additional splits were examined—70%/30%, 60%/40%, and 90%/10%—to gauge their effects on model performance.

Deep Learning Models

The focus shifted to the selection of deep learning architectures, primarily VGG16 and VGG19. Both models are well-established convolutional neural networks (CNNs) renowned for their efficacy in image classification tasks. Their comparatively straightforward architecture makes them particularly valuable for transfer learning applications. Here, the research aimed to evaluate the baseline performance of these classic models in classifying maize leaf diseases before delving into more complex alternatives.

VGG16, comprising 16 layers, demonstrated remarkable prowess in image classification tasks. The model was rigorously trained using datasets of varying image sizes, such as 224×224 and 127×127 pixels, alongside experimenting with different training, validation, and testing ratios. Various hyperparameters, including learning rate and batch size, were also tested to refine model performance.

Attention was dedicated to fine-tuning these hyperparameters as they play a pivotal role in achieving optimal results. The optimization focused on minimizing loss and maximizing evaluation metrics. Emphasis was placed on utilizing a gradient descent algorithm, particularly the Adaptive Moment Estimation (Adam) optimizer. While learning rates of 0.001, 0.01, and 0.1 were evaluated, 0.001 was determined to be the most effective.

Moreover, Categorical Cross-Entropy (CCE) was chosen as the loss function, accompanied by the SoftMax activation function to tackle multiclass classification. The configuration also experimented with multiple epochs and batch sizes, concluding that 60 epochs and a batch size of 32 yielded the most satisfactory results. Regularization through dropout was employed to address overfitting concerns during training.

Experiment Results

Experiment by Changing Image Size

Variations in image size showcased diverse performance outcomes. Generally, upscaling from a low resolution resulted in greater performance loss compared to downscaling from a higher resolution. For instance, training the VGG16 model with images sized at 224×224 achieved a spectacular 99% training accuracy and 95% validation accuracy. Training and validation phases emphasized performance differences, notably represented in accompanying graphs.

Conversely, using the 127×127 image size resulted in a training accuracy of 95% and validation accuracy of 90%. The performance metrics underscored that a 224×224 image size yielded superior results across different segments evaluated.

Experiment by Changing Train and Test Datasets Ratio

The model’s effectiveness across various training/testing dataset ratios revealed promising findings. An 80%/20% split showed the most robust performance, reinforcing the significance of choosing the right dataset partition. Metrics varied significantly across the ratios tested—60%/40%, 70%/30%, and 90%/10%—illustrating the model’s adaptability and highlighting benefits from a balanced training set.

Experiments by Changing Batch Size

Batch size emerged as a critical hyperparameter in deep learning systems. Experiments revealed that while larger batch sizes improve computational speed, they may negatively affect generalization. For the tested batch sizes of 16 and 32, the latter demonstrated superior performance, echoing findings related to the importance of batch size on model efficiency.

Experiment by Changing Learning Rate

Investigating the learning rate yielded informative results. The research indicated that lower learning rates led to improved accuracy, establishing 0.001 as the optimal learning rate. The experiment underscored that judiciously tuning this parameter plays a foundational role in enhancing model performance.

Results Comparison with VGG19

A noteworthy comparison with the VGG19 model revealed VGG16’s heightened performance. While VGG19 achieved training accuracy of 97% and testing accuracy of 92%, the results signified that the VGG16 model—boasting 99% training accuracy and 95% testing accuracy—outperformed its counterpart. These findings reiterated the effectiveness of VGG16 in this specific context, driving home the message that architectural simplicity can bring forth formidable results in tasks involving smaller datasets.

Results Discussion

Throughout the experimental phases, the research rigorously adjusted hyperparameters to refine model performance. Various image sizes, dataset splits, batch sizes, and learning rates were systematically explored. Results affirmed that a combination of a 224×224 image size, an 80% training/testing ratio, a batch size of 32, and a learning rate of 0.001 continuously yielded the optimal hyperparameter configuration.

When juxtaposed against existing methodologies, such as decision tree algorithms and Random Forest classifiers, the proposed model exhibited markedly superior performance, achieving 95% accuracy in detecting and classifying maize leaf fertility deficiencies.

The fundamental insight from the comparative analysis suggested that VGG16’s simpler design reduced overfitting risks compared to VGG19’s complexity, allowing it to efficiently capture features in a smaller dataset. This specificity enhances its usability in practical agricultural contexts.

Moving forward, leveraging transfer learning on the VGG models, alongside implementing data augmentation and comprehensive hyperparameter tuning, could facilitate improved prediction accuracy. By customizing architectures to fit various crops and treatments, this research opens pathways for innovative solutions in agriculture, particularly for regions like Ethiopia facing challenges in maize production.