Advancements in Autonomous Driving: Introducing YOLO-FLC

In the rapidly evolving field of autonomous driving, challenges such as object occlusion and the detection of small objects have long posed significant issues for computer vision systems. Addressing these hurdles is crucial for the safe and efficient operation of self-driving vehicles. An innovative approach to solve these problems is presented in the newly proposed network model, YOLO-FLC (YOLO with Feature Diffusion and Lightweight Shared Channel Attention), which builds upon the foundational YOLO11 model.

Core Innovations of YOLO-FLC

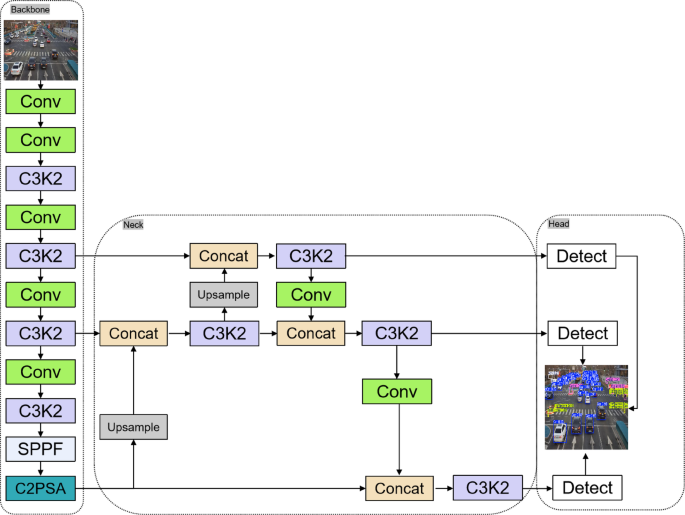

The YOLO-FLC model introduces several key innovations designed to enhance the detection performance in complex driving scenarios:

-

Channel Transfer Attention (CTA) Mechanism:

At the heart of the YOLO-FLC model is the advanced Channel Transfer Attention mechanism, which integrates a novel network module known as C3CTA (Channel Transposed Attention). This mechanism allows the model to adaptively select and transfer important channel information across multi-level feature maps. This capability is particularly beneficial when targets overlap or occlude each other. The C3CTA module thus significantly enhances the model’s ability to distinguish different objects, thereby improving overall detection performance. -

Feature Diffusion Pyramid Network:

In the "Neck" section of the network, YOLO-FLC incorporates a Diffusion Focusing Pyramid Network. This innovative framework enriches the context of feature information across various scales by introducing both a feature focusing module and a diffusion mechanism. The feature focusing module emphasizes vital local information, while the diffusion mechanism enhances the flow of global context. Together, these elements ensure that feature maps at all scales contain comprehensive contextual data, which is particularly advantageous for small object detection. - Lightweight Shared Convolutional Detection Head (LSCD):

The YOLO-FLC model also replaces the original detection head with a Lightweight Shared Convolutional Detection Head. This strategic improvement not only enhances the model’s recognition capability and accuracy but also reduces computational demands. By adding a scale layer, the detection head can capture objects of varying sizes more accurately, further optimizing the model’s multi-scale detection abilities.

The C3CTA Module: A Deep Dive

The C3CTA module represents a groundbreaking step in feature extraction strategies. Traditional methods, such as the C3k2 module, employ variable convolution kernels and channel separation, which are efficient but may falter in complex environments characterized by overlapping targets. The proposed CTA mechanism refines this approach by assigning automatic weights to feature channels based on their relevance to the current detection task.

This dual-pronged strategy involves parallel processing through:

-

Spatial Frequency Attention (SFA): This branch captures both local and global dependencies by transforming input features into frequency representations. By maintaining crucial spatial information, the SFA module amplifies relevant features while filtering out noise.

- Channel Transposed Attention: This branch emphasizes the relationships between channels, refining cross-channel features to improve detection accuracy, especially in the cluttered environments often seen in autonomous driving scenarios.

Feature Diffusion-Focused Pyramid Network

The architecture innovation extends beyond individual modules. The Diffusion Focused Pyramid Network (DFPN) is innovatively structured to process multi-scale features effectively. By utilizing the Focus Features (FoFe) Module, the model captures fine-grained context from inputs of varying resolutions.

The FoFe module employs small kernel convolutions combined with depthwise separable convolutions to harvest both local details and broader contextual information. This balanced approach allows the YOLO-FLC model to maintain detection performance across different scales, crucial for recognizing targets typically encountered in driving environments.

LSCD: Redefining Detection Heads

The Lightweight Shared Convolution Detection Head (LSCD) introduces a novel approach to model efficiency. By utilizing a hierarchical shared convolution structure alongside multi-scale feature fusion, the LSCD significantly enhances computational efficiency without sacrificing detection accuracy.

-

Multi-Scale Feature Fusion:

The LSCD fuses features from different resolutions, allowing the model to synthesize data with varying granularities. This fusion ensures comprehensive information capture from diverse object scales, improving performance on both small and large targets. - Shared Convolutional Layers:

One of the standout features of the LSCD is its shared convolutional architecture, which reduces parameter counts and computational load. Unlike traditional models that require independent convolutional layers for each detection head, the LSCD allows multiple heads to share convolution weights, thereby streamlining processing while retaining efficiency.

Conclusion: A Step Towards Robust Autonomous Systems

The YOLO-FLC model represents a significant advancement in the quest for reliable object detection in autonomous driving. With its innovative features and structures, it aims to tackle the challenges posed by occlusion and small object detection head-on. These enhancements pave the way for future research and developments in this critical field, driving towards safer, more efficient autonomous systems.