Covariance Properties of Spatio-Temporal Receptive Fields

Understanding Spatio-Temporal Transformations

In the realm of computer vision and neuroscience, particularly when analyzing dynamic processes such as video sequences, understanding spatio-temporal images is paramount. They encapsulate both spatial and temporal information, allowing us to describe how objects in motion interact with time. To analyze these transformations systematically, especially under geometric image transformations like translations and scales, let’s delve into the covariance properties inherent in them.

Geometric Image Transformations

The dynamic relationship between two video sequences ( f’ ) and ( f ) can be expressed mathematically through transformations of their spatial and temporal coordinates. Specifically, consider the transformations:

-

Spatial Transformation:

[

x’ = A \cdot (x + u \cdot t)

] - Temporal Transformation:

[

t’ = S_t \cdot t

]

Where:

- ( A ) denotes an affine transformation matrix.

- ( u ) is a position vector impacting the spatial transformation.

- ( S_t ) indicates scaling in the temporal domain.

From these definitions, we can see how changes in the spatial and temporal domains affect the video sequences, allowing for a rich understanding of motion and perception.

Spatio-Temporal Scale-Space Representations

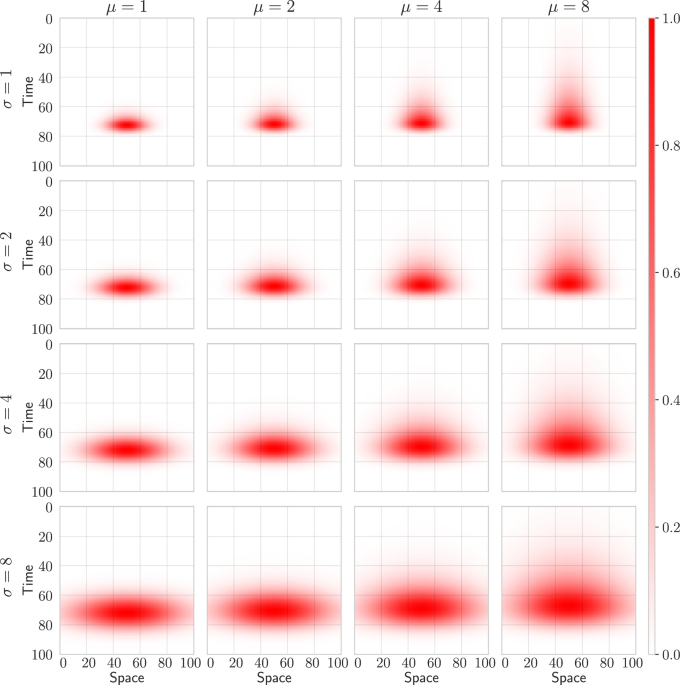

To effectively analyze the video sequences, we introduce spatio-temporal scale-space representations of both ( f’ ) and ( f ). These representations are derived through convolution with smoothing kernels, enabling us to dissect the underlying structure.

An example of such a representation is given by the convolution of ( f ) and ( f’ ) with a kernel ( T(x, t; \Sigma, \tau, v) ):

[

T(x,t;\,\Sigma,\tau,v) = g(x-vt;\,\Sigma )\, h(t;\,\tau )

]

In this equation:

- ( g(x; \Sigma) ) acts as a spatial Gaussian kernel, where ( \Sigma ) dictates the shape.

- ( h(t; \tau) ) represents a scale-covariant temporal window function, governing the smoothing process in time.

- ( v ) pertains to the velocity sensitivity of the receptive fields across both space and time.

Relationships Under Transformations

A crucial aspect of spatio-temporal representations is their interrelationship under transformations. These transformations show a sense of covariance, meaning that if the parameters of the transformations change, the relationships between the representations remain intact.

This can be mathematically described as:

[

L'(x’,t’;\Sigma’,\tau’,v’) = L(x,t;\Sigma,\tau,v)

]

where the parameters of the receptive fields in the two domains are related as follows:

- Covariance Matrices:

[

\Sigma’ = A \cdot \Sigma \cdot A^T

] - Temporal Scales:

[

\tau’ = S_t^2 \cdot \tau

] - Velocities:

[

v’ = \frac{A \cdot v + u}{S_t}

]

This establishes a robust framework where transformations can be cascaded, maintaining the structural integrity of the visual representations.

Commutative Properties of Transformations

Illustrating this with a commutative diagram emphasizes that whether an image is transformed first and then processed, or the inverse, yields the same result. This insight is pivotal, as it allows researchers and engineers to standardize the approach when analyzing temporal dynamics and spatial properties.

Covariance in Image Transformations

Lindeberg and Gårding’s research underlined a significant relationship between scale-space representations when satisfying linear affine transformations. The equations governing these relationships help ascertain how well the models can adapt under transformation, underscoring the notion of covariance in image transformations.

Practical Applications

These analytical tools and principles can be harnessed in various applications:

- Object Tracking: Enhancing algorithms that identify and follow objects across frames.

- Motion Analysis: Enabling a better understanding of movement dynamics in videos, crucial for fields like robotics.

- Neural Encoding: Providing insights into how neurons process visual inputs over time.

Temporal Scale Covariance in Models

Several models, particularly leaky integrator models, showcase temporal scale covariance. This characteristic indicates the model’s ability to adapt to different temporal resolutions without losing fidelity in the output.

By incorporating a one-sided temporal kernel with a characteristically defined exponential decay function, the models establish temporal smoothing while maintaining causality—a vital aspect in real-time processes.

Expanding Upon Neuron Models

The incorporation of leaky integrate-and-fire (LIF) models extends this discussion to biological analogs of artificial neural networks. These models offer a powerful framework for simulating neuronal dynamics and can adapt over multiple temporal scales.

Numerous experimental setups, including those using event-based datasets, have validated these correlations, demonstrating their robustness in simulating real-world phenomena.

Dataset and Model Training

The datasets used in these analyses consist of simulated, sparse events resembling dynamic scenes. Such datasets allow for comprehensive testing of the covariance properties. The training processes involve structured methodologies, ensuring robustness through the adaptation of convolutional blocks, tailored to respond to the diverse dynamics observed in the spatial and temporal domains.

Exploring Model Architectures

Different model architectures, from spatio-temporal receptive fields expanded across parallel channels to those utilizing advanced activation functions, enhance the capacity to process and analyze visual data effectively.

By employing backpropagation and sampling techniques for parameter initialization, the models ensure stability and efficiency, allowing them to maintain accuracy across transformations.

By considering all these aspects—both theoretical and practical—the study of covariance properties in spatio-temporal receptive fields not only deepens our understanding of visual perception and action but also pushes the boundaries of how technology can simulate these intricate dynamics. From computer vision to neuroscience, these principles echo the underlying frameworks of our visual systems, promising advancements in artificial intelligence and cognitive modeling.