Hybrid Deep Learning Model for Brain Tumor Classification: A Comprehensive Analysis

In recent years, the application of hybrid deep learning (DL) models in the medical field, particularly in classifying brain tumors using MRI images, has gained significant traction. This approach combines the strengths of various neural network architectures to improve diagnostic accuracy, reduced misclassification, and enhance interpretability of model predictions.

Model Performance Overview

The proposed hybrid DL model was meticulously assessed, focusing on its training history, classification accuracy on a designated test set, and the interpretability of its predictions via Grad-CAM visualizations. This multi-faceted evaluation not only showcases the model’s effectiveness but also underscores its potential utility in clinical settings. Comparative analyses with previous models highlighted the superiority of the proposed system in brain tumor classification (BTC).

Training Progress

Tracking the model’s training progress is crucial for understanding its capabilities. Over 20 epochs, the training utilized a dataset comprising 5,688 images for training and 632 images for validation. The critical metrics of loss and accuracy were monitored closely to evaluate how well the model learned.

Loss and Accuracy Trends

The training and validation loss plots reveal a rapid decrease in loss, with the training loss initially at 0.8 dropping below 0.1 by the 15th epoch. This decline illustrates the model’s effective learning rate and ability to generalize well to unseen data. The validation loss began at 0.4, steadying at 0.1 after some fluctuations, suggesting that the model is not overfitting but rather capturing meaningful patterns in the data.

In terms of accuracy, the training accuracy surged from approximately 65% to nearly 100% within the first ten epochs, while validation accuracy stabilized around 96%. This close alignment (less than 5% difference) between training and validation suggests robust generalization and effective learning.

Classification Performance

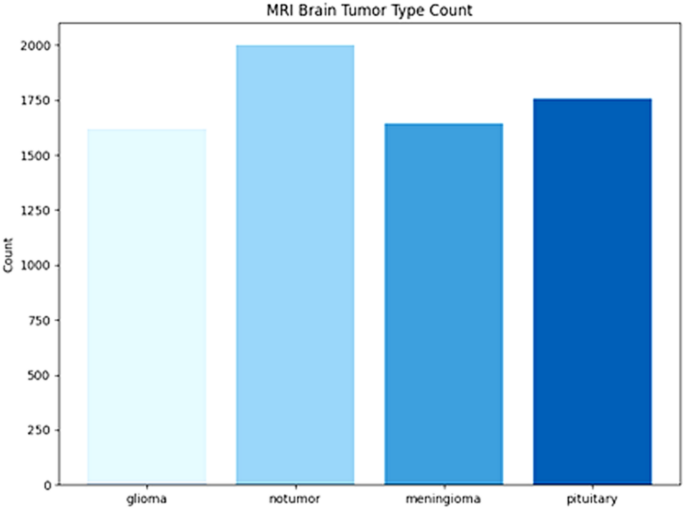

To evaluate performance on unseen data, the model was tested on a separate dataset consisting of 703 images across four categories: No Tumor, Glioma, Meningioma, and Pituitary Tumor. The classification report comprised detailed metrics including precision, recall, and F1-scores.

Metrics Explained

- Precision assessed the proportion of correct positive predictions,

- Recall captured the ability to identify all relevant instances, and

- F1-Score provided a harmonic mean of precision and recall, especially useful in unbalanced classifications.

The model achieved an impressive average accuracy of 99%, with particularly high precision and recall for the non-tumor category at 1.000, indicating no misclassifications among predicted non-tumorous cases. However, some recall discrepancies arose due to imaging artifacts mimicking tumor features.

Confusion Matrix and Detailed Insights

The confusion matrix offers a granular view of the model’s predictive performance. Clear diagonal lines indicate proficient classification, with minor non-diagonal entries signifying occasional misclassifications, notably between gliomas and pituitary tumors due to visual similarities on MRI scans.

Confusion matrices from the training, validation, and test sets maintained a consistent pattern, confirming the model’s learning effectiveness and suggesting that it effectively navigated the complexities of different tumor morphologies.

Receiver Operating Characteristic (ROC) Curves

ROC curves confirmed the model’s efficacy, demonstrating perfect performance across all categories. The curves showed an Area Under the Curve (AUC) score of 1.00, indicating exceptional classification confidence without any false positives. Such results solidify the model’s ability to differentiate between classes distinctly and effectively.

Grad-CAM Visualizations

Grad-CAM visualizations serve a dual purpose: they not only affirm the model’s predictive accuracy but also augment interpretability—a key requirement in medical AI applications. These overlays indicate the regions of MRI scans that contributed significantly to model predictions, reinforcing clinician trust. Areas highlighted in red and yellow corresponded with tumor boundaries, demonstrating that the model focuses on clinically relevant features.

Robustness Against Class Imbalance

Despite balancing issues inherent in the dataset, the hybrid model showed high reliability across the diagnostic spectrum. The model’s robust performance was supported by meticulous data augmentation techniques that further enhanced its generalizability in real-world scenarios.

Discussion on Model Strengths and Limitations

While the model achieved commendable metrics, several limitations warrant attention. The training dataset was sourced from a single location, limiting external validation and generalizability. Even with high AUC scores, the potential for misclassification in complex cases requires consideration. The interpretability provided via Grad-CAM is profound, yet improvements in spatial localization are necessary for precise anatomical delineation in surgical planning.

Future enhancements may also explore transformer-based architectures or lightweight alternatives for scalability in resource-constrained environments.

This comprehensive evaluation highlights the promising potential of hybrid DL models in medical imaging, particularly in brain tumor classification. While limitations exist, the contributions of the proposed model offer a solid foundation for future research and clinical applications.