Data Presentation in Solar Power Generation Forecasting

Overview of the Dataset

In this analysis, we work with a comprehensive dataset containing 4,200 historical records of solar power generation. Each record is enriched with 20 meteorological and astronomical input features and one target output—namely, the generated power measured in kilowatts. This collection of data offers a broad spectrum of input parameters crucial for understanding solar energy production. These inputs include:

- Temperature

- Humidity

- Pressure

- Precipitation

- Cloud cover at various altitudes

- Solar radiation

- Wind levels at different heights

- Solar angle of incidence

- Zenith and azimuth position angles

These features provide a rich context for analyzing and predicting solar power generation dynamics.

Correlation Matrix Analysis

To explore the relationships between these input features and the generated power, a correlation matrix was formulated, as illustrated in Figure 2. This heatmap illustrates both positive and negative linear correlations among the variables. Specifically, we find that shortwave radiation and zenith exhibit the strongest correlation with output power. Additional variables such as humidity and azimuth also show moderate correlations, which is vital for understanding their influences on solar energy production.

One remarkable finding indicates strong inter-correlations across various wind layers, suggesting some degree of redundancy among features. This redundancy presents an opportunity for feature reduction, allowing the modeling process to become more efficient by eliminating less impactful inputs.

Feature Selection Using Lasso Regression

Beyond basic correlation assessment, we employed Lasso regression for feature selection, which serves to gauge the importance of independent variables relative to the target output. As depicted in Figure 3, this analysis revealed shortwave radiation to be the most critical feature for forecasting solar power generation. Additionally, mean sea level pressure, wind speed at 80 m, and wind direction at 80 m were identified as influential variables.

Conversely, the angle of incidence and azimuth were assigned considerably low coefficients, indicating their minimal contribution to the predictive model under Lasso’s sparsity constraints. Consequently, such insights have informed future model designs, suggesting a pathway for the elimination or reduction of low-impact features, thereby optimizing training and enhancing model generalization.

Deep Learning Algorithms for Prediction

To advance our predictive capabilities, we compared several deep learning (DL) models tailored for solar PV power production estimation. This approach incorporates robust data pre-processing alongside exploratory analysis, facilitated by various deep learning techniques, to ensure accurate predictions.

Data Preparation

The analytical workflow begins by uploading the pre-processed dataset, integrating both historical solar generation data and correlated meteorological variables. The dataset is systematically partitioned into training, validation, and test sets in a 70:20:10 ratio to maintain impartiality during score calculations. Standardization through feature scaling normalizes the input space, while data is restructured into time sequences, preserving temporal dependencies.

Exploratory Data Analysis and Feature Engineering

Prior to model estimation, we conducted Exploratory Data Analysis (EDA). The correlation matrix not only highlighted relationships among features but also guided feature selection. Variable names were recoded for clarity. Lasso regularization allowed for quantifying feature importance and dimensionality reduction, with visual interpretations aiding in understanding model decisions.

Model Building

A diverse array of deep learning architectures was employed to evaluate their efficacy in predicting solar power generation. The configurations included:

- Autoencoders: Designed as dense feedforward networks to derive compact latent representations of input features.

- Recurrent Neural Networks (RNNs): Such as SimpleRNN, GRU, and LSTM, to capture temporal correlations.

- Convolutional Neural Networks (CNNs): Implementing one-dimensional convolutional layers to extract temporal patterns.

- Temporal Convolutional Networks (TCNs): Utilizing dilated causal convolutions for enhanced temporal learning.

- Transformer models: To manage complex relationships within data sequences effectively.

All models were implemented using the Keras API with TensorFlow 2.16 backend, ensuring enhanced compatibility and scalability.

Training and Evaluation

Models are constructed using the Adam optimizer and incorporate callbacks for early stopping and learning rate reductions. Upon training, we performed inference across all datasets with predictions transformed back to their original scale.

Evaluation metrics, including RMSE, MAE, MAPE, and R², were systematically computed for the training, validation, and test sets. Visualizations such as loss curves and scatter plots provided additional insights into model performance.

To quantify uncertainty during testing, Monte Carlo dropout was employed, contributing to the interpretability of model predictions.

Result Collection and Methodology Overview

All evaluation metrics were meticulously stored as CSV files for reproducibility and future analysis. Comprehensive testing for residual behavior, employing tests like Shapiro–Wilk, Jarque–Bera, and Ljung–Box, provides further robustness to our findings.

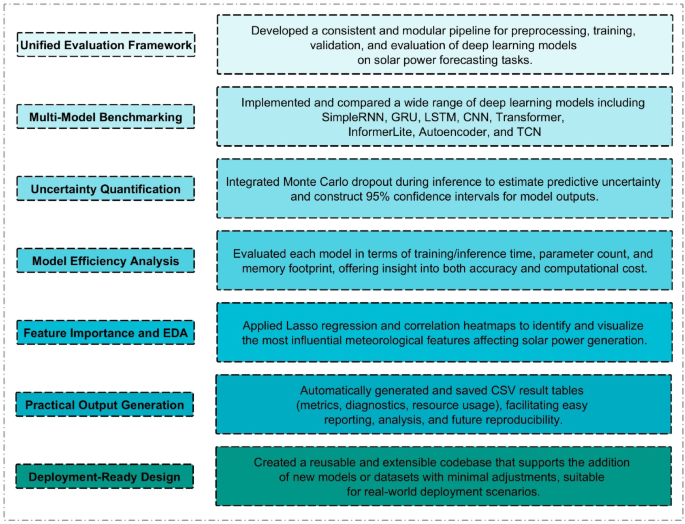

The full methodological flow is encapsulated in Figure 4, illustrating the workflow from raw data ingestion to final evaluations.

Experimental Setup and Configuration Parameters

The experimental design aimed for uniformity and repeatability. The pre-processed solar power generation dataset was systematically divided and normalized. Multiple models trained under various architectures with fixed learning rates managed by Adam optimization were evaluated through defined performance metrics.

Evaluation Metrics

To fully characterize the forecasting ability of the deep learning models, a composite measure was employed, combining accuracy, reliability, and statistical consistency. Various errors were computed through equations, quantifying variance explained by predictions and assessing the model’s predictive precision.

Through this structured approach encompassing data presentation, feature selection, deep learning techniques, and thorough evaluation, we gain enriched insights into the dynamics of solar power generation forecasting, priming the stage for optimized future implementations.