Study Design: A Deep Learning Approach to Estimating Fetal Weight

In recent years, the intersection of advanced technology and medical imaging has opened new avenues for enhancing prenatal care. One pioneering approach employed a deep learning model to estimate fetal weight through a retrospective, multi-center cohort study conducted across 17 hospitals in Denmark spanning from 2008 to 2018. This study aimed to harness the vast potential of ultrasound imaging alongside big data to improve fetal assessments.

Data Collection

The research utilized data from the Danish Fetal Medicine Database for birth weight statistics, complemented by imaging data collected from four central servers. Remarkably, the Danish Patient Safety Authority waived the need for patient consent, streamlining the study’s ethical considerations (Record No. 3-3013-2915/1). Approval was granted by the Danish Data Protection Agency, ensuring adherence to proper data governance protocols (Protocol No. P-2019-310).

Utilizing the Hadlock Formula

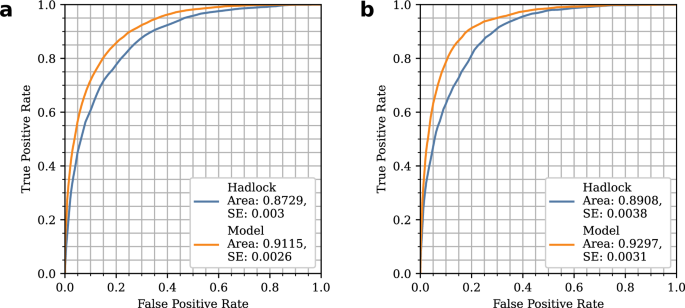

To analyze fetal weight, the study adopted the Hadlock formula—a well-known equation in obstetric medicine. This formula draws on key biometric measurements, including Abdominal Circumference (AC), Head Circumference (HC), and Femur Length (FL), typically gathered during routine ultrasound scans. A significant innovation in this study was the use of Optical Character Recognition (OCR) technology to automate the measurement extraction process.

For those interested in a deeper dive, supplementary materials reference the detailed methodologies and findings (Supplementary Material G).

The Dataset: Composition and Features

Ultrasound images used for the study are rich with embedded markings made by clinicians during scans. These markings, representing specific anatomical features to be measured, showcased details such as caliper placements and measurement codes. The clinicians followed international guidelines for obtaining standard ultrasound planes, ensuring uniformity and reliability.

Images were categorized as head, abdomen, femur, or classified as "other" using Tesseract-based OCR technology. Following this classification process, images were aggregated by patient identification numbers and examination dates. Sets lacking images from all requisite anatomical classes were excluded, thus maintaining the integrity and comprehensiveness of the dataset.

Defining the Population

Focusing exclusively on singleton pregnancies, the study divided the data into training, validation, and test sets—85%, 5%, and 10% respectively—while ensuring no patient overlap. Interestingly, the training dataset included 27% of images from the second trimester to augment the available data. Various permutations of anatomical images enhanced the model’s learning opportunities, while the testing phase relied solely on images from the third trimester, specifically those with gestational ages over 28 weeks.

The Deep Learning Model: Structure and Function

The core of this study’s innovation lies in a robust deep learning model based on RegNetX 400 Mf, which is organized into two distinct components. The first part processes ultrasound images to generate anatomical measurements and an embedding vector encapsulating additional information about the input images. The architecture is formed of three subnetworks, each dedicated to processing one standard anatomical plane (head, abdomen, femur).

The second component consists of fully connected layers that take predicted measurements and the embedding vectors as input, outputting the Estimated Fetal Weight (EFW). This multi-layered framework is designed to enhance accuracy while capturing complex connections within the data. The model incorporates pixel spacing, a crucial factor derived from the ultrasound machine’s exported DICOM files, to address variations in image scale.

Training the Model: Techniques and Parameters

The training of the model was meticulous and strategic, utilizing the AdamW optimizer with specifications including a learning rate of 1e-4 and a weight decay of 1e-6, while the batch size was set to 8. Leveraging pre-trained RegNetX parameters on ImageNet significantly reduced training time.

Images were pre-processed through center cropping, resizing to 224×224 pixels, and converted to grayscale. Augmentation techniques—including random rotation, shear, translation, brightness, and contrast adjustments—further enriched the dataset and aided in enhancing the model’s robustness.

Incorporating Uncertainty Estimation

Another layer of complexity was introduced through unpredictability assessments. The study utilized test-time augmentation to yield multiple predictions per image, calculating standard deviations of these predictions to gauge uncertainty. This method not only provided quantifiable risk assessments but also illustrated the correlation between predicted uncertainty and prediction errors.

Analyzing Pixel-Level Information

To gain deeper insights into model performance, saliency heatmaps were created for a subset of the test data—1800 images illustrating various anatomical regions. This analysis, conducted by experienced fetal medicine clinicians, enabled the annotation of the most significant anatomical features, contributing to the understanding of which elements the model leveraged for its predictions.

By understanding the intricacies of this research project, it becomes clear how the integration of advanced imaging technology and deep learning models is poised to revolutionize fetal assessments, ultimately contributing to improved prenatal care and outcomes.