An In-Depth Exploration of Cohort Characteristics in Cerebral-Cardiac Syndrome Research

Understanding the nuances of cohort characteristics in studies related to Cerebral-Cardiac Syndrome (CCS) can provide valuable insights into its risk factors, model development, and predictive accuracy. In this piece, we delve into the demographic factors, machine learning model performance, calibration, decision-making thresholds, and feature significance associated with the study cohort.

Cohort Demographics and Characteristics

The study’s participant screening initially involved 571 records from which 511 were found to meet specific eligibility criteria. Among them, 178 individuals, representing 34.8%, developed CCS. A baseline comparison between the CCS group and those without CCS revealed significant differences across several characteristics:

- Age: The average age of participants in the CCS group was 68.8 years, compared to 66.8 years in the non-CCS group, with a statistically significant p-value of 0.011.

- NIHSS (National Institutes of Health Stroke Scale): The CCS group reported a median NIHSS score of 11, indicating a higher severity of neurological impairment, compared to a median of 9 in the non-CCS group (p < 0.001).

- D-dimer Levels: D-dimer, a marker often associated with clot formation and breakdown, was significantly higher in the CCS group (median 1.17) compared to non-CCS participants (median 0.79, p < 0.001).

- Additional Factors: Other notable metrics included elevated CRP (C-reactive protein) levels, elevated HbA1c (indicating possible diabetes), and the presence of severe carotid stenosis (9.0% vs. 2.4%, p = 0.006).

These distinctions underscore the importance of demographic and clinical factors when assessing CCS risk.

Model Development and Cross-Validation Insights

During the analysis, five machine learning models were utilized, with performance evaluated through 5-fold cross-validation:

- SVM (Support Vector Machine): Achieved the highest mean Area Under the Curve (ROCAUC) of 0.799, yet boasted lower accuracy (0.662).

- Random Forest: While providing a solid ROCAUC of 0.794, this model achieved the best accuracy at 0.819.

- XGBoost: Performed well in balancing discrimination and accuracy, with an AUC of 0.779 and accuracy of 0.811.

- Logistic Regression: Similar performance to XGBoost, with an AUC of 0.785 and accuracy of 0.792.

- Deep Neural Network: Showed moderate performance with an AUC of 0.759 and accuracy of 0.755.

Notably, the standard deviations for AUC were all below 0.06, indicating that the models exhibited stable performance throughout the folds tested.

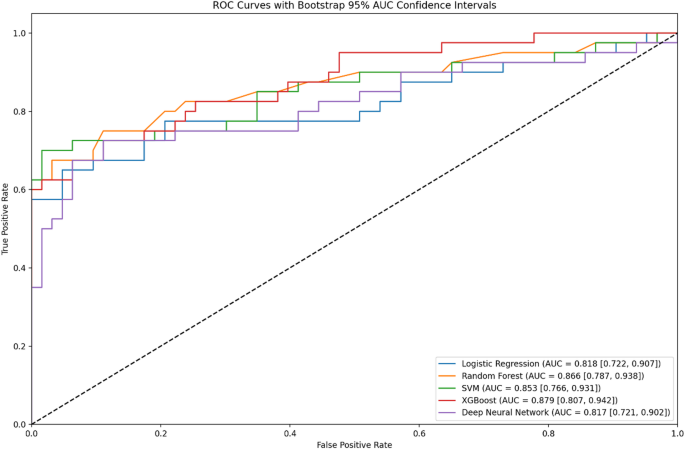

Test Set Performance Evaluation

When applied to a separate test cohort consisting of 103 individuals, the XGBoost model demonstrated the best discrimination performance with an AUC of 0.879 and accuracy of 0.825. Metrics distribution was as follows:

- Precision: 0.844

- Recall: 0.675

- F1 Score: 0.750

Random Forest was a close second, with an AUC of 0.866 and similar precision and recall metrics. Other models like SVM and Logistic Regression also performed admirably, with AUCs of 0.853 and 0.818, respectively. The Deep Neural Network trailed behind, indicating a need for refinement.

Model Calibration Performance

Calibration, crucial for understanding how predicted probabilities align with actual outcomes, was assessed using the Hosmer-Lemeshow goodness-of-fit test:

- SVM: Best calibration performance with a p-value of 0.246, indicating good fit, alongside the lowest Brier score (0.126).

- Random Forest: Also demonstrated acceptable calibration (p = 0.153).

- XGBoost and Deep Neural Network: Both exhibited poor calibration despite their discrimination prowess, with p-values below 0.001 and higher Brier scores.

Calibration curves visually accentuated these findings, showing SVM and Random Forest predictions closely aligning with observed outcomes, while XGBoost and the Deep Neural Network deviated from the line of perfect calibration.

Decision Curve Analysis Findings

Decision curve analysis revealed that all models surpassed trivial "treat-all" or "treat-none" strategies across a range of clinically relevant thresholds (0.1–0.8). Notably:

- SVM and Random Forest: Delivered the highest net benefits across thresholds of 0.1–0.6.

- XGBoost: Only provided a marginal benefit at lower thresholds (<0.15) but struggled at higher decision thresholds, where performance diminished.

Threshold Optimization Analysis

Recognizing the moderate class imbalance, various threshold-selection strategies were scrutinized. Among these:

- SVM with a Youden-optimized threshold (0.576) exhibited a balanced performance (accuracy = 87.4%).

- XGBoost, when adjusted for a 3:1 false-negative penalty, showcased high sensitivity but at the expense of specificity.

- Random Forest maintained the highest specificity at a default threshold of 0.50, underscoring its suitability for confirmatory testing.

Feature Importance Analysis

Using SHAP (SHapley Additive exPlanations) for feature importance analysis, critical predictors of CCS risk were identified:

- D-dimer emerged as the most significant predictor, followed by ACEI/ARB usage, HbA1c, and CRP levels.

- Other Influential Factors: Age, NIHSS scores, and the presence of severe carotid artery stenosis also played pivotal roles in predicting CCS.

The SHAP analysis highlighted how increases in D-dimer, CRP, and HbA1c relate to heightened CCS risk, thus offering potential avenues for early intervention strategies.

By illustrating these intricate relationships between cohort characteristics, machine learning model performances, and clinical variables, a clearer picture of CCS emerges. Understanding how these elements intertwine is not only vital for research but is also a significant step toward improving clinical outcomes for those at risk of this condition.