The Football Transformer (FoT): A Next-Gen Framework for Football Video Analysis

Overview

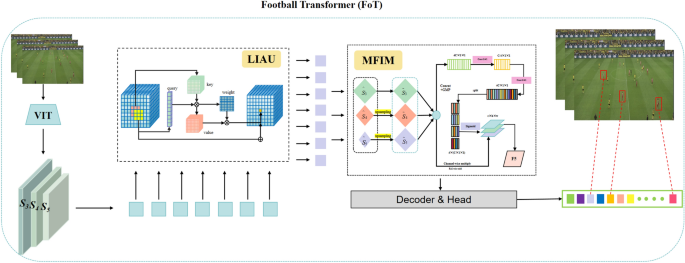

The proposed Football Transformer (FoT) is an innovative end-to-end object detection framework specifically crafted for the complexities of football video scenarios. Designed to deliver both high accuracy and real-time performance, FoT leverages advanced computer vision techniques to meet the unique demands of analyzing fast-paced football matches. As illustrated in Figure 1, FoT employs a vision transformer-based backbone to extract detailed hierarchical feature maps (S_3, S_4, S_5) at varying spatial resolutions. These features capture critical visual cues essential for detecting various objects and actions in a football game.

The framework is structured into several key modules, including a Local Interaction Aggregation Unit (LIAU) and Multi-Scale Feature Interaction Module (MFIM), which process these multi-scale features. The LIAU utilizes a lightweight self-attention mechanism with a shifted window approach, allowing for cross-region interaction while maintaining computational efficiency. The refined features then undergo further processing in the MFIM, culminating in predictions made by a Transformer decoder and a detection head. This cohesive architecture balances global context modeling with localized detail preservation, making FoT adept at addressing the spatial and detection challenges common in football scenes.

Backbone Feature Extraction Network

At the forefront of the FoT architecture is the backbone feature extraction network. This critical component transverses the input RGB image, denoted as (\textbf{I} \in \mathbb{R}^{3 \times H \times W}), and extracts hierarchical feature maps at multiple spatial resolutions. Using a vision transformer, the output ( \textbf{F}_\text{backbone} = {S_3, S_4, S_5} ) showcases a blend of low, mid, and high-level representations. Each of these feature maps plays a pivotal role:

- Low-Level Features ((S_3)): Capture edge and texture details necessary for detecting small objects.

- Mid-Level Features ((S_4)): Encapsulate a balanced representation essential for semantic understanding.

- High-Level Features ((S_5)): Provide grand patterns and context, useful for larger objects.

Shallow features ((S_1) and (S_2)) are discarded due to their limited semantic value, ensuring efficient resource use while maintaining essential details for downstream tasks.

Local Interaction Aggregation Unit (LIAU)

The LIAU stands as a hallmark feature of the FoT architecture. Its primary objective is to efficiently model spatial dependencies within localized regions of the feature maps. To achieve this, the LIAU divides each input feature map into non-overlapping windows (for example, (3 \times 3)) and applies self-attention within these localized regions. This significantly reduces computational complexity from quadratic to linear terms.

A unique aspect of the LIAU is its shifted window strategy, which allows sequential layers to share tokens across window boundaries. This broader interaction enhances the model’s capacity to identify small targets (like a football or distant players) in high-resolution scenes. Through residual connections, LIAU outputs retain original positional encoding as well as aggregated contexts, effectively enriching the feature maps for further processing.

Input and Representation

The LIAU begins by partitioning the input feature map ( \textbf{F} \in \mathbb{R}^{C \times H \times W} ) into local windows where self-attention is efficiently computed. By restricting attention to a fixed neighborhood size (K \times K), the computational overhead is greatly minimized—transforming the original global attention cost from (\mathscr{O}(N^2)) to a more manageable (\mathscr{O}(N \cdot K^2)).

Shifted Window Interaction

One limitation of a naive window partition is the lack of interaction among neighboring windows. LIAU addresses this with a shifted window approach that alternates the partitioning schema based on layer parity. In even layers, the partition starts from the corner; in odd layers, the shift introduces communication across windows, allowing for feature pooling from areas previously isolated. This dynamic interactions bolster the model’s capacity to capture spatial relationships across regions.

Multi-Scale Feature Interaction Module (MFIM)

The Multi-Scale Feature Interaction Module (MFIM) plays a critical role in bridging the semantic gap across various resolutions. It integrates the hierarchical feature maps obtained from the LIAU modules through spatial alignment and upsampling. Each feature map is adjusted to match the dimension of the shallowest feature, enabling effective concatenation and pooling. A series of (1 \times 1) convolutions generates channel-wise weights which recalibrate the fused features through element-wise multiplications, culminating in a unified representation that enriches the feature maps with comprehensive global and local insights.

Transformer Decoder

Once the MFIM outputs a fused feature map, it is fed into the Transformer Decoder. Here, the module transforms the input into predictions of object classes and bounding boxes using a fixed set of learnable queries. The flattened feature map, supplemented with positional encoding, is iteratively updated through a set of self-attention and cross-attention blocks.

Optimization Objective

To ensure the effective training of the FoT framework, an end-to-end optimization strategy is employed. This includes classification and localization losses, which aid in matching predictions with ground-truth instances during training. The combination of multiple loss functions balances object classification and spatial accuracy for bounding box predictions, allowing the model to refine its detection capacities iteratively.

By focusing on these intricate yet intuitive structures and components, the Football Transformer (FoT) demonstrates a robust approach to enhancing object detection in complex football scenarios. The architectural choices made within FoT not only optimize for performance but also underline the importance of targeted feature extraction and efficient computational strategies in modern computer vision applications.