Advanced Predictive Modeling in Heart Failure Management

Input Variables

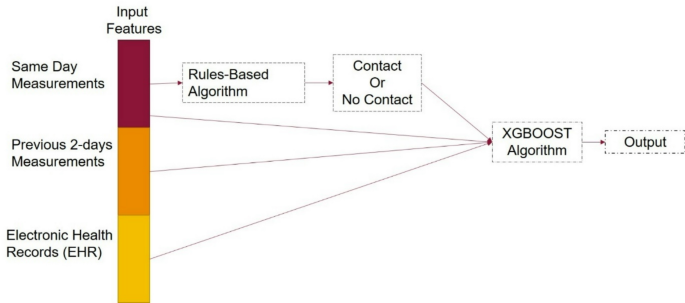

In the evolving landscape of healthcare technology, predictive algorithms play a pivotal role, especially in managing chronic conditions like heart failure (HF). One innovative approach involves a rules-based algorithm that monitors key vital parameters. The algorithm originates from morning measurements of blood pressure, weight changes, heart rate, and symptoms to generate alerts for immediate clinical considerations.

To fortify this algorithm, previous morning measurements from the past two days are integrated along with the same-day readings. This creates a robust data set encompassing three consecutive days of measurements. Additionally, to ensure comprehensive clinical assessment, laboratory investigations for each patient are included. The laboratory tests encompass a wide range of essential parameters, such as complete blood count (CBC), lipid profile, serum electrolytes, renal function tests (including serum urea and creatinine), and B-type natriuretic peptide (BNP).

Furthermore, the algorithm considers the patient’s medication history, co-morbid conditions, and smoking history. The integration of these input variables provides a holistic view of the patient’s health status. The complete set of these variables can be found in Table 1, presenting an organized structure for efficient analysis.

Outcome Measure

The rules-based algorithm executed by Medly generates various alerts based on patients’ vital signs. It classifies alerts on a scale from 1 to 8, with 1 indicating a normal status and 8 signaling a high-level emergency—prompting immediate action like dialing 911.

Alerts numbered 1 to 3 are classified as "No Contact," indicating no immediate intervention is needed from healthcare providers. Conversely, alerts 4 to 8 signify various levels of warning requiring a response, such as adjustments in diuretic treatments or direct communication with the care team. For ease of understanding, we simplify these alerts into two categories: “Contact” or “No Contact.” The delineation between these alerts aids healthcare professionals in prioritizing patient care efficiently.

Clinical experts were engaged to manually evaluate these alerts against actual outcomes, classifying them as true positives, true negatives, false positives, and false negatives. Here are the definitions:

- True Positive: An identified “Contact” alert that led to necessary changes in treatment or care escalations.

- True Negative: An absence of a “Contact” alert with no deterioration in the patient’s condition.

- False Positive: A “Contact” alert issued inappropriately, where no intervention was warranted.

- False Negative: A failure to issue a “Contact” alert despite the patient experiencing clinical deterioration.

HF Decompensation Episodes Estimation

Model Development

For predicting heart failure decompensation episodes, a predictive model is formulated using the XGBoost framework, known for its efficacy with decision-tree ensembles. The model is oriented towards a binary classification objective, focusing on discerning real-time patient needs with speed and accuracy.

The strengths of XGBoost lie in its parallel tree boosting methods, which rapidly address various data science challenges. It has emerged as a favored algorithm for healthcare classification and regression tasks, thanks to its versatility and precision. The implementation utilized is in Python (version 1.4.2), with steps taken to calibrate the model effectively through hyperparameter tuning conducted via a randomized grid search.

Key hyperparameters include tree depth, learning rate, column subsampling ratio, and adjustments for class weighting to manage data imbalance. Evaluative measures employed include five-fold stratified cross-validation, assessing the area under the receiver operating characteristic curve (AUC) as a success metric.

Following the tuning phase, training is executed leveraging the chosen parameter set, with monitoring performed on a reserved validation set. The overall training methodology employed early stopping after a specified patience period to mitigate overfitting—a crucial factor in predictive modeling.

To address missing values within the dataset, XGBoost’s inherent capabilities were utilized, ensuring that gaps did not obstruct model training. Continuous variables were standardized to minimize outlier effects, while categorical variables underwent one-hot encoding. By integrating features such as patient-reported measures and historical vitals data, the algorithm lends itself to heightened predictive accuracy.

Validation and Evaluation

The dataset comprised records from 342 patients, chronicling daily measurements over a span of 1 to 3 years. An 80:20 partition of the dataset was established to separate training from testing, ensuring non-overlapping data for fair evaluation. The resulting training set encapsulated 52,932 incidents, while 11,161 incidents were earmarked for testing.

Notably, the obtained “No Contact” to “Contact” ratio stood at 10:1 in the training dataset. To tackle this imbalance, strategic random subsampling was employed to align these ratios more closely, resulting in a balanced training framework. The performance analytics encompass sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and accuracy metrics, while careful threshold selection was anchored against the Medly rules-based algorithm’s true positive rate.

Interpretability Analysis

To enhance the interpretability of the XGBoost model, feature importance analyses were conducted through various methods, notably using SHAP (SHapley Additive exPlanations) values. These analyses elucidate which factors significantly influence predictions regarding decompensation episodes and help rectify the false positives identified in the rules-based approach.

SHAP Summary

SHAP values render a deep dive into feature importance, quantifying each feature’s contribution to predictions and facilitating a clearer understanding of model outcomes. The SHAP summary plot, where each point signifies a sample, delineates the direction of each feature’s influence on predictions, visually correlating feature values with their predictive powers.

Ablation Studies

Further inquiry into feature influence included a sequence of ablation studies where various combinations of input features were analyzed for their impact on model performance. This strategy identified critical sets of features that contributed to the model’s efficacy in reducing false positive rates.

Performance Analysis of EHR Data

The comparative performance of the Medly rules-based algorithm and the XGBoost classifier was analyzed against continuous EHR data variables. It offered insights into trends between false positives in both models, providing a richer perspective on how data-driven approaches can improve clinical decision making.

Through the comprehensive evaluation of these methodologies, the potential for augmenting clinical practice through sophisticated predictive modeling becomes evident, paving the way for more proactive healthcare solutions.