Performance Assessment of Feature Representation Techniques in MRI-based Tumor Classification

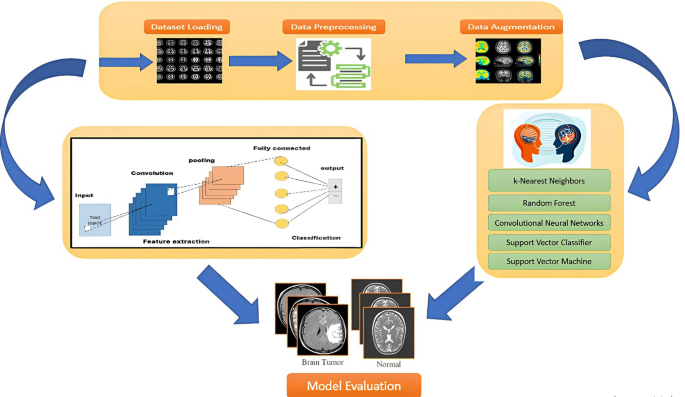

In the evolving landscape of medical imaging, accurately classifying and detecting tumors using magnetic resonance imaging (MRI) is crucial for timely intervention and improved patient outcomes. This article delves into the performance assessment of various feature representation techniques—including Convolutional Neural Networks (CNN), Discrete Wavelet Transform (DWT), Fast Fourier Transform (FFT), Gabor filters, Gray Level Run Length Matrix (GLRLM), and Local Binary Patterns (LBP)—in conjunction with different machine learning and deep learning algorithms.

Addressing Class Imbalance

A significant challenge in large datasets pertaining to medical imaging is class imbalance, particularly between tumor and non-tumor cases. To address this issue, class weighting is implemented during training. This approach assigns larger loss penalties to the minority class (non-tumor), ensuring that the algorithm learns to prioritize this critical aspect without being swayed by the overwhelming majority of tumor cases. In addition to standard performance metrics—accuracy and specificity—we also adopt additional metrics such as precision and the F1-score, which provide a more nuanced understanding of model performance on imbalanced datasets.

CNN Feature Space

CNNs not only serve as feature extraction mechanisms but also function as classifiers. In this approach, a pre-trained CNN model (like ImageNet) is utilized to extract deep features from MRI images. The convolutional layers remain frozen during inference, allowing these features to be fed into various classical machine learning classifiers such as Support Vector Machines (SVM), K-Nearest Neighbors (KNN), and Probabilistic Neural Networks (PNN). This fixed-feature extraction method is employed primarily to conserve training time and computational resources while evaluating the effectiveness of transfer learning in tandem with classical methods.

When applied in an end-to-end classification capacity, CNNs learn features and perform classification simultaneously. The convolutional layers act as adaptive feature extractors whose outputs are then processed through fully connected layers to produce classification results. The results in Table 2 indicate that SVC outperformed its peers, achieving an impressive 96.6% accuracy and 98% specificity when identifying MRI-based tumors. While SVC displayed consistently high performance, including balanced precision and specificity, other models such as RF and CNN showed a penchant for reliable positive predictions, albeit with unbalanced sensitivity levels.

Discrete Wavelet Transform (DWT) Feature Space

DWT shows promising results, with SVC leading by achieving 95.9% accuracy and 97.8% specificity, as illustrated in Table 3. CNN and RF similarly demonstrated strong classification capabilities, particularly excelling at 100% precision. The KNN model exhibited sensitivity of 93%, reflecting its effectiveness in identifying true positives. DWT’s ability to provide multi-resolution analysis enables it to extract both frequency and spatial information adequately. However, selecting the appropriate wavelet functions and decomposition levels requires careful consideration, emphasizing the necessity of expertise in utilizing this technique effectively.

FFT Feature Space

Fast Fourier Transform (FFT) consistently delivers optimal results, particularly with SVC, achieving 97.4% specificity and over 95.2% accuracy, as noted in Table 4. CNN maintained a commendable precision rate of 93%, while KNN demonstrated true positive detection capacity with a sensitivity of 97%. However, the sensitivity of RF was high but came with low specificity, illustrating the class imbalance inherent in its classification results. One of the advantages of FFT is its computational efficiency, which rivals that of DWT; nevertheless, FFT often lacks spatial information, potentially limiting its effectiveness for localized data.

Gabor Feature Space

The Gabor representation method yields moderate results, with SVC continuously reflecting an accuracy of 93.6% and specificity of 95.4%, as detailed in Table 5. Although CNN provided a commendable precision of 90%, models like KNN and PNN revealed lower accuracies, suggesting that Gabor features may struggle to match the discriminative power of alternative techniques. Gabor filters excel in extracting texture and edge information but are computationally intensive due to their multi-scale and multi-orientation nature. Importantly, while effective for local data, Gabor filters often overlook global patterns.

GLRLM Feature Space

GLRLM-based features exhibited robust performance, achieving accuracy of 94.8% and specificity of 96.6% with SVC, as presented in Table 6. KNN showcased a commendable balance between recall and precision, while both CNN and RF also delivered accurate positive predictions. Despite troubleshooting variations, GLRLM effectively captures texture information, though its limitations in representing spatial relationships in images are noteworthy.

LBP Feature Extraction

LBP features presented outstanding outcomes, achieving a remarkable 98.06% accuracy with SVC, as delineated in Table 7. LBP demonstrated balanced sensitivity and specificity, while CNN and RF followed closely with 97.8% and 95% accuracy, respectively. The robust performance of LBP features underscores its efficacy in collecting local texture patterns, making it both computationally effective and simple.

Statistical Analysis of Feature Representation Methods

To assess the contribution of each feature representation scheme, we computed chi-square and p-value statistics, detailed in Table 8. Significantly, CNN, DWT, and LBP displayed marked contributions to classification accuracy (p < 0.05). In contrast, FFT, GLRLM, and Gabor demonstrated minimal contributions (>0.05), suggesting that these techniques might not be as impactful in enhancing overall model performance.

Performance Metrics on Large Training Datasets

Moving to larger datasets, LBP feature representation methods generally achieved the highest success rates. Specifically, a dataset composed of 7,023 MRI images, with 5,723 for training and 1,311 for testing, highlights the superiority of LBP. Table 9 indicates that CNN achieved 98.9% accuracy, surpassing SVC’s 96.7%. The balanced nature of sensitivity and specificity in CNN reinforces its exceptional ability to manage imbalanced datasets.

SHAP Analysis for Interpretability

Utilizing SHAP analysis (illustrated in Fig. 2), we explored the most influential LBP features, revealing correlations with high-contrast texture and edge definitions typically associated with various types of brain tumors. These findings validate the model’s clinical interpretability, essential for applications in medical diagnostics.

Individual Class Accuracy

Details of the accuracy of individual tumor classes across different datasets for the CNN and SVC classifiers are documented in Table 10. The evaluation further emphasizes the nuanced performance capabilities and strengths of each method on varying data distributions.

Performance in Resource-Constrained Environments

Our proposed model was further optimized in contrast to contemporary transformer-based models, demonstrating enhanced performance metrics in terms of computational cost and resource requirements. Reductions in floating-point operations (FLOPs) and memory utilization bolster its feasibility for deployment in resource-constrained settings, such as rural healthcare.

Comparative Analysis of Existing Models with Proposed Models

The study’s novelty lies in employing diverse algorithms and feature representation strategies, offering a well-rounded approach to MRI tumor classification. Compared to existing literature—where previous studies indicated lower accuracy rates—our proposed model stood out with a notable accuracy of 98.9%, reinforcing the advantages of integrating LBP features with a CNN classification approach.

Summary of Findings

Through a detailed performance assessment, we have illustrated significant differences in the interplay between feature extraction methods and machine learning/digital learning classifiers in the context of MRI-based tumor detection. These insights inform the ongoing development of reliable diagnostic systems capable of enhancing classification accuracy and improving patient outcomes in real-world medical imaging scenarios.